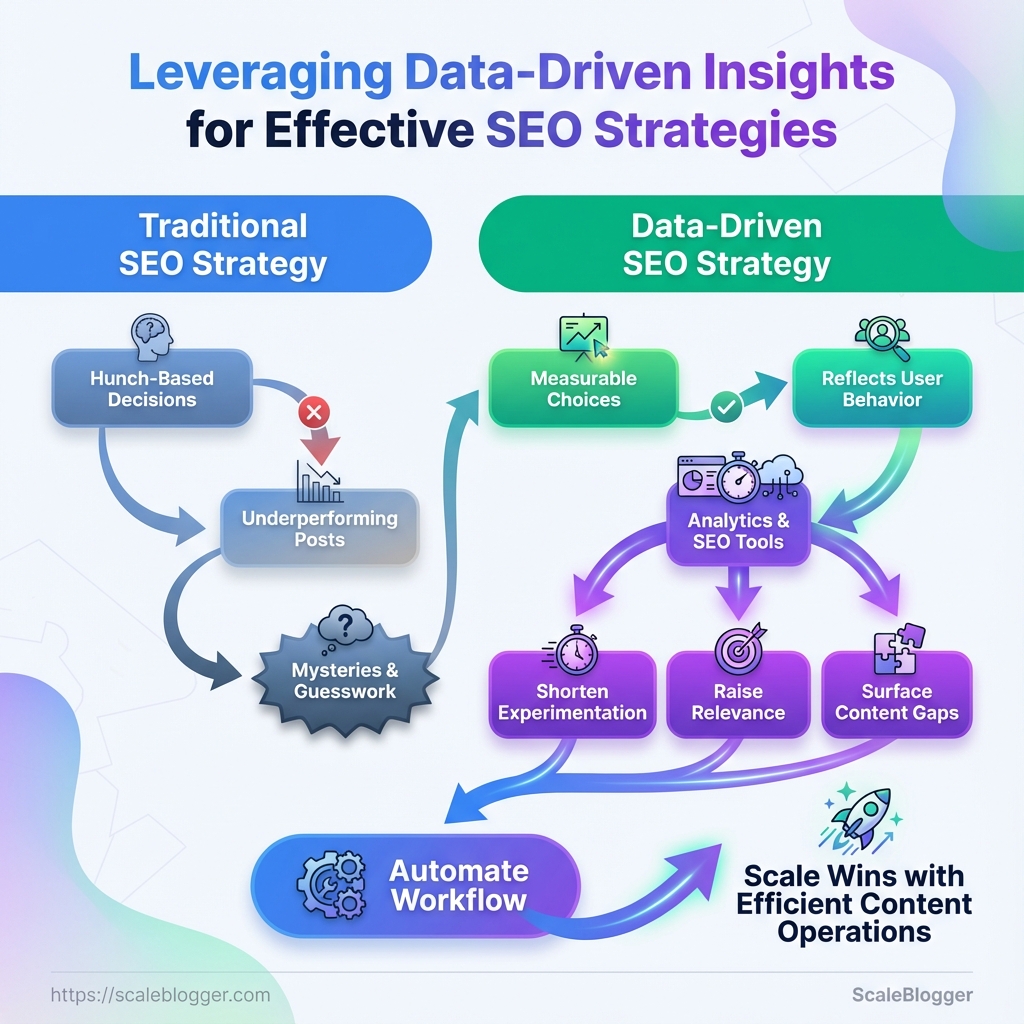

Content teams still publish by hunch: a headline that felt right, a topic that “should” rank, and hope traffic follows. Months later the post underperforms and the answer sits in raw logs. Shifting that guesswork into data-driven SEO turns those mysteries into measurable choices, so every title, page change, and internal link reflects user behavior, not optimism.

When decisions are guided by analytics and modern SEO tools, traffic signals reveal what to double down on and what to prune. That clarity shortens experimentation cycles, raises relevance for target queries, and surfaces content gaps worth owning. Automate your analytics pulls and content ops to scale those wins: Automate your SEO workflow with Scaleblogger.

What You’ll Need (Prerequisites & Tools)

Start with clean access and a tight toolset. Before any data-driven SEO work begins, confirm analytics ownership and the right permission levels, pick an SEO platform that fits budget and goals, and set up reliable exports and storage so nothing disappears when you need it. Those steps make audits faster, reporting reproducible, and experiments safe.

Analytics accounts & permission levels: Ensure Google Analytics 4 and Google Search Console are owned by a team admin. SEO tool access: Service accounts or user seats for an SEO platform (Ahrefs, SEMrush) with export rights. Data export & storage: Centralized storage (Google Drive, AWS S3, or enterprise storage) and an automated export schedule. Skillset baseline: Familiarity with GA4 events, basic SQL or spreadsheet formulas, and on-page SEO concepts.

- Grant permissions in this order:

- Add a team admin in Google Search Console.

- Add

EditororAdministratorin GA4 for the person running reports. - Create an API key or service user for your SEO tool and test one sample export.

Tools & materials

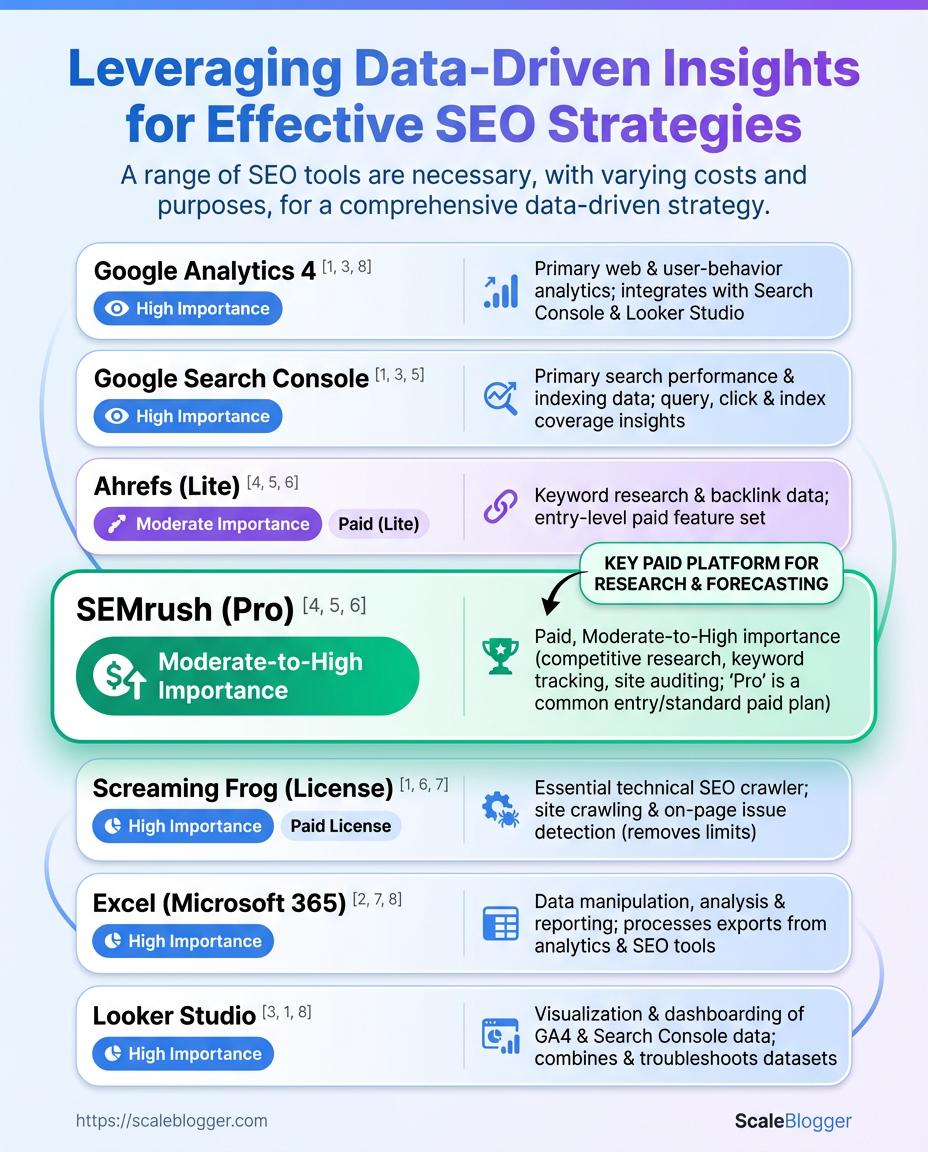

- Google Analytics 4: Core behavioral data and conversion tracking.

- Google Search Console: Search presence, index coverage, and query data.

- SEO platform (Ahrefs/SEMrush): Keyword research, backlink analysis, and site-level visibility.

- Crawl tool (Screaming Frog): Technical crawl data and URL-level metadata.

- Spreadsheet/BI tool (Excel/Looker Studio): Data modeling, dashboards, and visualizations.

Necessary tools, why they’re needed, and recommended plan tiers

| Tool | Purpose | Minimum Plan | Prep Action |

|---|---|---|---|

| Google Analytics 4 | Behavioral metrics, events, conversion tracking | Free | Create property, define events, verify data stream |

| Google Search Console | Search queries, index status, URL inspection | Free | Verify site, submit sitemap, assign owners |

| Ahrefs | Keyword research, backlinks, organic traffic estimates | Lite $99/mo | Create project, connect GSC, run site audit |

| SEMrush | Keyword tracking, competitor insights, content tools | Pro $119.95/mo | Add domain, configure position tracking, schedule exports |

| Screaming Frog | Full-site crawl, redirect chains, metadata issues | Free (500 URLs), License £219/yr | Install desktop app, set crawl config, export CSVs |

| Excel / Looker Studio | Data modeling, reporting, dashboards | Excel via Microsoft 365 $69.99/yr; Looker Studio free | Build templates, connect GA4/GSC, automate refreshes |

Key insight: Aligning permissions, export routines, and a mix of behavioral + crawl + keyword tools creates a reliable pipeline for data-driven SEO. Start with free Google tools, add an SEO platform for competitive depth, and use a crawl tool plus BI spreadsheets to turn data into action.

Confirming access and exports up front saves days later when chasing missing metrics. With these tools and the few setup steps above, the team can run repeatable audits, score content, and measure impact reliably.

For help automating the export-to-dashboard flow or scaling this stack, consider using an AI content automation partner like Scaleblogger.com to speed up pipelines and reporting.

Time Estimate & Difficulty Level

For a mid-sized data-driven SEO project, expect the full cycle to take roughly 4–8 weeks from initial data collection to the first actionable monitoring loop. Complexity scales with site size and technical debt: smaller blogs finish at the low end; enterprise sites with custom platforms push toward the high end. Below are realistic per-step time budgets and why each task varies in difficulty.

- Scope drivers: number of pages, CMS flexibility,

crawl budget, existing analytics quality. - Skill drivers: familiarity with SQL or BigQuery, experience running technical audits, and capacity to implement site changes.

- Tooling: using automated pipelines (for example, an AI-powered content automation platform) cuts the manual hours significantly.

- Data collection and access often gets delayed by permission issues and instrumentation gaps.

- Keyword and SERP analysis depends on API limits and how many queries you run.

- On-page opportunity work is fast when templates are editable; slower when each page requires manual edits.

- Technical audits vary with platform complexity—headless or custom CMSes add hours.

- Execution and monitoring pace depends on whether changes are deployable via CI/CD or need tickets and developer sprints.

Breakdown of time estimates and difficulty per major step

| Step | Estimated Time | Difficulty | Who should do it |

|---|---|---|---|

| Data collection & access | 8–24 hours | Medium — permissions + instrumentation | Analytics engineer / SEO analyst |

| Keyword & SERP analysis | 12–36 hours | Medium — tooling + API limits | SEO strategist / keyword researcher |

| On-page opportunity analysis | 16–40 hours | Medium-high — content mapping + templates | Content strategist / SEO analyst |

| Technical SEO audit | 20–60 hours | High — complex sites, JavaScript rendering | Technical SEO / DevOps |

| Execution & monitoring | 2–8 weeks (iterative) | Medium — dependent on deployment process | Dev team + SEO analyst |

Key insight: The table shows where bottlenecks typically appear — technical audits and execution are the most time-variable. Investing in automation and better instrumentation moves hours from manual analysis into repeatable pipelines.

For teams wanting to shorten the calendar, automating the data pipeline and using templated content updates reduces per-cycle time dramatically (this is where an AI content automation platform becomes cost-effective). Focus first on clearing access and analytics gaps; everything else moves faster once reliable data is available.

Step-by-Step Implementation: Collect & Clean Data

Start by treating data collection and cleaning like building a reliable foundation — the rest of the content work sits on top of this. Collect exact exports, normalize fields, run quality checks, and produce a single master table you can trust for analysis and automation.

Data sources: Verified access to Google Search Console (GSC), GA4 property, and a site crawl tool export (CSV). Tools & materials: Spreadsheet or database (BigQuery/Postgres), scripting environment (Python or R), and a crawl tool CSV (Screaming Frog, Sitebulb). Time estimate: 2–6 hours for small sites; 1–2 days for large sites with iterative validation.

- Export exact datasets and date ranges

- Export GSC:

Performance → Search Resultswithpage,query,clicks,impressions,ctr,positionfor the target date range (recommended: last 90 days). - Export GA4:

Acquisition → Traffic acquisitionwithpage_path,sessions,engagement_time,conversionsfor same date range. - Export crawl CSV: full crawl with

status_code,canonical,meta_robots,page_url. - Normalize and map columns

- Standardize URLs: remove trailing slashes, force lowercase, strip UTM/query strings (keep important query params if needed).

- Map

page(GSC) andpage_path(GA4) to a canonicalpage_url. Use aurl_cleanfunction in code:normalize_url(url). - Convert date fields to ISO (

YYYY-MM-DD) and numeric metrics to integers/floats. - Quality checks and validation

- Row counts: confirm GSC rows ≈ expected unique page-query pairs; flag sudden drops.

- Value ranges:

clicks >= 0,impressions >= clicks,ctr <= 1. - Cross-source joins: sample 100 URLs and validate that GSC clicks roughly align with GA4 sessions trends (not exact matches).

- Build the master dataset and rules

- Join on

page_urlwith preference order: canonical redirect resolution → crawl data → GSC → GA4. - Create flags:

is_indexable(fromstatus_codeandmeta_robots),has_content(word count > 200),high_traffic(top 10% by impressions).

Sample schema for the master dataset after cleaning (columns, data types, source)

| Column Name | Data Type | Source | Transformation Rule |

|---|---|---|---|

| page_url | string | GSC / GA4 / crawl CSV | Normalize: lowercase, remove UTM, add trailing slash consistency |

| query | string | GSC | Trim, dedupe common stopwords, map synonyms if needed |

| clicks | integer | GSC | Cast to int, replace negative/null with 0 |

| impressions | integer | GSC | Cast to int, aggregate duplicates by page_url+query |

| crawl_status_code | integer | crawl CSV | Map to canonical set (200,301,404,410), infer is_indexable |

Key insight: This schema keeps strings and metrics separated, prioritizes canonical URLs from the crawl, and ensures numeric hygiene so downstream models and dashboards aren't poisoned by bad types or inconsistent URL formats.

For automation and scaling, pipe this workflow into a reproducible script or an ETL job. Tools like Scaleblogger.com can help automate content pipelines once the master dataset is reliable. Getting the collection and cleaning right saves hours later and makes analysis actionable.

Step-by-Step Implementation: Analyze & Prioritize Opportunities

Start by mapping intent and SERP features across your content — this quickly separates low-hanging wins from long-term plays. Then apply a repeatable competitor-gap methodology and a numeric opportunity score so decisions aren’t opinions but measurable trade-offs.

- Catalog intent segmentation and SERP feature mapping

- Perform competitor gap analysis

- Calculate opportunity scores with sample formulas

- Rank and bucket pages into priority lanes

- Create execution plan with estimated effort and metrics

Intent segmentation: Break queries into informational, commercial, transactional, and navigational buckets so optimizations match user expectations.

SERP feature mapping: Record whether a query returns featured snippets, People Also Ask, video, shopping, or local packs — these features change the tactics and expected traffic uplift.

Competitor gap methodology

Start with cleaned exports and fresh SERP snapshots, then:

- Baseline content audit: Identify pages with traffic but weak rankings (positions 6–20).

- Competitor content pull: Save top-10 competitor URLs and extract word count, headings, schema presence, and backlink counts.

- Gap signals: Flag missing formats (video, tables), missing intent matches, and absent schema as prime upgrade opportunities.

- Action mapping: Pair each gap with the simplest fix (add FAQ schema, restructure headings, or create a comparison table).

Opportunity scoring and sample formulas

Use a simple, transparent formula that weighs impact and effort. Example:

- Opportunity Score = (Traffic Potential Click-Through Modifier Conversion Value) / Effort

Implement with concrete proxies:

Traffic Potential= Search volume × (1 - current CTR)Click-Through Modifier= 1.0 for organic, 1.3 if page can gain a featured snippetConversion Value= estimated revenue per conversion (or lead score)Effort= hours to update + production time

Example numeric substitution: Opportunity Score = (2,000 0.7 0.5) / 10 = 70

Practical steps to keep this operational

- Automate exports: Schedule weekly keyword and rank pulls.

- Score consistently: Use the same proxies across pages to compare apples-to-apples.

- Triage quickly: Move anything scoring above your historical mean into an execution sprint.

Prioritization matrix comparing Opportunity Score, Effort, and Expected Impact

| Page / Query | Opportunity Score | Effort (hrs) | Priority |

|---|---|---|---|

| Top converting blog post | 120 | 8 | High |

| High-impression non-ranking page | 95 | 12 | High |

| Thin pages with crawl issues | 40 | 6 | Medium |

| Pages with rich results potential | 150 | 14 | High |

| High-value product pages | 110 | 20 | Medium |

Key insight: The matrix groups pages by realistic effort and upside so teams can assign sprints that move KPIs quickly. Pages with rich result potential and already-converting posts frequently offer the fastest lift when paired with schema and intent-aligned rewrites.

Where helpful, surface these scores into a shared dashboard or feed them into an automated pipeline like Scaleblogger.com to turn prioritized opportunities into scheduled work and measurable wins. Taking a data-driven, repeatable approach keeps the team focused on impact rather than busywork.

Step-by-Step Implementation: Execute Changes & Measure

Start by making targeted, reversible edits in the CMS and codebase, then run a tight QA loop before publishing. Changes should be small, tracked, and measurable so that any uplift (or regression) ties back to a specific action. Below are the practical steps, necessary edits, testing flows, and monitoring cadence for Steps 11–15.

Access: Admin access to CMS, repo, staging environment, and analytics account.

Tools & materials

Version control: Git with feature branches. Staging environment: Identical to production. Monitoring: Analytics (GA4), Search Console, server logs, and an uptime monitor. Editor/IDE: For code edits (VS Code or equivalent). Deployment pipeline: CI/CD configured for atomic deploys.

- Step 11: Exact CMS and code edits

- Step 12: 301 redirects and canonical fixes

- Step 13: Testing and QA flows before publishing

- Create a QA checklist: visual check, structured data validator, mobile-friendly test, Lighthouse performance, link crawl.

- Run structured data through Rich Results Test

and validatecanonicaland hreflang where applicable. - Smoke deploy to staging, verify critical pages, then merge to main branch.

- Step 14: Monitoring metrics and cadence

1. Update meta/title in WordPress via theme file or SEO plugin; example functions.php snippet: `php add_filter('pre_get_document_title', function($title){ if(is_singular('post')) return get_field('seo_title') ?: $title; return $title; }); 1. For head tags in Next.js, use: jsx import Head from 'next/head'; 1. Add JSON-LD for articles (insert in head): html

1. For Apache .htaccess: apache Redirect 301 /old-url/ https://example.com/new-url/ 1. For Nginx: nginx rewrite ^/old-url/?$ https://example.com/new-url/ permanent;

- Traffic baseline: Record pre-deploy 90-day average sessions and impressions.

- Immediate checks (0–48 hrs): Indexing errors in Search Console, 4xx/5xx server errors, crawl budget spikes.

- Short term (1–4 weeks): Organic impressions, clicks, and ranking movement.

- Medium term (4–12 weeks): Engagement metrics (CTR, time on page), conversion lifts, indexation changes.

- Step 15: Iteration and rollback plan

- Tag the release and keep a rollback runbook. If regressions appear, revert the feature branch and run a post-mortem. Use A/B testing or staged rollouts for high-risk changes.

Implementation checklist mapping change types to verification steps and expected lag time for SEO impact

| Change Type | Implementation Action | Verification Check | Expected Impact Lag |

|---|---|---|---|

| Meta/title optimization | Update CMS fields, push to templates | Validate title tag, Search Console inspect | 2–6 weeks |

| Structured data addition | Insert JSON-LD in head or CMS block | Rich Results Test, Search Console reports | 1–8 weeks |

| 301 redirects / canonical fixes | Configure .htaccess`/Nginx or CMS redirects | Link audit, crawl logs, no 404s | 1–12 weeks |

| Server/hosting performance fixes | Improve caching, upgrade CDN, optimize images | Lighthouse score, Time-to-First-Byte | 1–8 weeks |

| Content rewrites and expansions | Publish updated pages, use content blocks | On-page audits, content diff checks | 4–12+ weeks |

Key insight: prioritize fixes that unblock indexing (redirects, canonical) and publish measurable changes (titles, structured data). Performance and content changes usually take longer to influence rankings but are essential for sustained gains.

A controlled rollout, thorough QA, and a disciplined monitoring cadence turn guesses into reliable optimization paths. Treat each change as an experiment: deploy, monitor, learn, and iterate until performance goals are met. For teams looking to automate parts of this pipeline—editing templates, scheduling deploys, and tracking impact—Scaleblogger.com can plug into that workflow and reduce manual overhead.

These steps make changes safe, measurable, and repeatable so the team can prove which edits actually move the needle.

Troubleshooting Common Issues

Start by treating each SEO failure like a bug in a pipeline: isolate where data or signals stop, verify with a repeatable test, then apply a minimum-change fix before broader rollouts. That approach reduces flip-flopping and gives clear escalation triggers when a quick fix doesn't hold.

Common diagnostics and quick workflow

- Reproduce the problem locally or in a staging view.

- Capture a timestamped checklist: crawl, index, analytics, page-level signals.

- Change one variable at a time and verify results within 24–72 hours for obvious issues; allow longer for ranking signals to stabilize.

Common failures and targeted fixes

Missing GSC Pages: Pages not showing in Google Search Console can mean crawl budget limits or indexing blocks. 1. Check Coverage and request indexing for representative URLs. 2. Confirm noindex meta and robots.txt rules. 3. Use a live URL inspection to force re-crawl. Escalation trigger: No change after 7 days and live inspection shows blocked crawl.

Data sampling in GA4: High sampling skews performance insights. 1. Validate data streams and measurement IDs. 2. Use BigQuery export for unsampled queries. 3. Compare GA4 with server-side logs for discrepancies. Escalation trigger: Business decisions depend on the sampled metric and BigQuery export still inconsistent.

Duplicate content due to parameters: URL parameters create canonical confusion. 1. Audit query-string variants and check rel=canonical. 2. Implement canonicalization or parameter handling in Search Console. 3. Consolidate internal linking to preferred URLs. Escalation trigger: Crawled pages exceed site limits and canonical signals conflict.

Unexpected ranking drops: Algorithmic or technical causes both possible. 1. Check recent site changes, manual actions, and competitors’ moves. 2. Use rank-history and SERP-feature shifts to correlate timing. 3. Revert recent template or content changes as an A/B diagnosis. Escalation trigger: Drop persists >30 days without technical issues identified.

Blocked by robots.txt: Accidental blocking is common during deployments. 1. Inspect robots.txt and test with Google’s robots tester. 2. Remove or adjust disallow rules and request re-crawl. 3. Validate sitemap paths are reachable. Escalation trigger: Staging rules propagated to production and affect >5% of high-value pages.

Map issue → likely cause → quick diagnostic → corrective action

| Issue | Likely Cause | Diagnostic Step | Corrective Action |

|---|---|---|---|

| Missing GSC Pages | Crawl/index blocks or budget | Use Live URL inspection in GSC | Remove noindex/update robots.txt, request index |

| Data sampling in GA4 | High-traffic sampling limits | Compare GA4 reports to BigQuery export | Enable BigQuery export, use unsampled queries |

| Duplicate content due to parameters | Query-string proliferation | Crawl sample URLs and check canonical headers | Add rel=canonical, consolidate parameters |

| Unexpected ranking drops | Algorithm updates or site changes | Correlate drop with change logs and SERP shifts | Revert recent changes or file rethink plan |

| Blocked by robots.txt | Deployment copied staging rules | Test robots.txt with Google tool |

Fix rules, update sitemap, request re-crawl |

Key insight: The most efficient fixes are those that identify whether the root cause is technical, data, or content-related. Prioritize reproducible diagnostics and escalate when fixes don’t show measurable improvement within the expected window.

When recurring issues become routine, automate the repeatable checks and integrate them into the content pipeline—tools and workflows that automate monitoring make these problems far less painful and speed up recovery. Scale your content workflow to include automated checks that catch regressions before they affect traffic.

Tips for Success & Pro Tips

Start by treating analytics and experiments like engineering work: instrument, test, observe, iterate. Clean data and tightly scoped tests let small content changes reveal real lift instead of noise, and cross-functional alignment makes those wins repeatable.

Analytics hygiene and validation

Good measurement begins before publishing.

Event taxonomy: Define consistent event names, properties, and required fields for every content type. Data retention rules: Keep raw event logs long enough to validate experiments and reprocess if tracking changes. Sampling checks: Compare aggregate metrics against raw logs weekly to catch dropped events or broken tags.

Practical example: if article_read sometimes lacks author_id, queries will undercount author-level performance — add a required author_id field and backfill when possible.

- Use consistent UTM conventions: standardize campaign and source values.

- Validate post-implementation: check a random sample of sessions for expected events within 48 hours.

- Automate alerts: set thresholds for sudden drops in pageview or conversion events.

Experimentation and measuring lift

Run experiments with the same rigor as product A/B tests.

- Define the metric hierarchy: primary KPI, guardrail metrics, and secondary outcomes.

- Estimate detectable effect sizes using baseline variance; if your traffic is low, prioritize high-impact changes or longer windows.

- Segment by intent and channel: a headline change may lift organic CTR but not paid traffic.

Example: a template change increased time-on-page by 12% for organic visitors but reduced referral conversions — splitting results by channel revealed the trade-off.

- Pre-register hypotheses: state expected direction and magnitude before testing.

- Use holdouts for seasonality: keep a small, stable control group across experiments.

- Measure cumulative lift: track how individual wins compound in the funnel.

Cross-functional workflows

Make experiments operational, not optional.

Content QA: Editors validate SEO and analytics hooks before publish. Dev handoff: Use feature branches with automated tests that assert analytics events fire. Growth syncs: Weekly 30-minute cadence between content, analytics, and engineering to review failures and wins.

When tooling helps, tie it to workflows—automated checks that fail a deploy when critical events are missing save hours of firefighting. For teams looking to scale execution and measurement, consider platforms that automate pipelines and benchmarking like Scaleblogger.com.

Small measurement improvements compound into reliable decisions; invest in hygiene, run thoughtful tests, and keep collaboration tight so insights actually turn into higher-performing content.

📥 Download: Data-Driven SEO Checklist (PDF)

Measurement & Reporting Templates

Start by separating what executives need from what the team needs. Executives want concise trend signals and outcomes; the team needs diagnostic detail and action items. Design two linked dashboards: a high-level executive view (weekly/monthly snapshots) and an operational workspace (daily/real-time widgets) that maps directly to PR, product, and engineering owners.

Executive metrics: Short list of outcome-focused KPIs that show direction and risk.

Operational metrics: Detailed diagnostics that explain why a trend moved and where to act.

Dashboard design essentials Clear ownership: Assign one person per widget. Single source of truth: Prefer GA4 for session/goal data, GSC for query-level impressions/CTR, crawl exports for technical issues, and your conversion platform for final attribution. Actionability: Every widget should suggest a next step or responsible owner. Visibility rules: Executive dashboard emailed to leadership; operational dashboards pushed to Slack or the workflow tool.

- Map widgets to data sources.

- Define refresh cadence and distribution list.

- Build alerts and annotation rules.

Widget and data-source mapping works best when written down and versioned. Below is a practical blueprint to copy into any BI tool or Google Sheet.

Map dashboard widgets to data sources and update cadence

| Widget | Data Source | Purpose | Update Frequency |

|---|---|---|---|

| Top pages by impressions | GA4 & GSC | Surface pages driving visibility and where to prioritize optimization | Daily |

| CTR by query cluster | GSC & crawl exports | Detect content vs meta issues across topic clusters | Weekly |

| Organic conversions | GA4 & conversion platform | Tie organic traffic to revenue or goal completions | Daily |

| Technical issues by severity | crawl exports | Track site health, crawl errors, indexability blockers | Real-time / On crawl |

| A/B experiment results | conversion platform & GA4 | Measure lift and determine rollout decisions | At experiment completion / Weekly interim |

Key insight: This blueprint forces discipline — every widget lists a data source and cadence, which reduces “who owns this” debates. Daily refreshes for conversions and top pages keep the team nimble, while weekly query-cluster and experiment reviews balance signal stability with speed.

Practical reporting cadence and distribution: Daily: Operational alerts (technical failures, conversion dips) to owners via Slack. Weekly: Team sync with detailed diagnostics and action items. Monthly: Executive summary emailed with 3–5 strategic signals and resource asks. Quarterly: Deep-dive performance review with experiments and roadmap adjustments.

For a faster setup, plug this blueprint into your BI tool or use an automation partner to wire GA4, GSC, crawl data, and your conversion platform. Tools that automate these connectors save hours and keep dashboards honest — for example, implementing an AI-driven pipeline that standardizes metrics and schedules reports dramatically reduces manual churn; learn more at Scaleblogger.com.

Good measurement keeps the team aligned and prevents firefights over data. When widgets are mapped, cadences set, and owners named, reporting becomes a decision-support system instead of noise.

Appendix: Example Queries, Snippets, and Templates

Below are copy-paste ready assets for generating, testing, and scaling content tasks. Each asset includes input assumptions, expected outputs, and a short note on how to adapt it for different intents.

Tools: Access to an LLM (API or UI), a spreadsheet or CSV for inputs, basic familiarity with curl or a script runner.

Assumptions: Input rows contain topic, keyword_intent, and target_audience. Outputs are a title, 3 subheadings, a 150-word intro, and 5 meta tags.

- SEO content brief generator (prompt template)

- High-conversion meta description and title snippet

- FAQ extractor from an article (regex + prompt combo)

` Create a content brief for the topic "{topic}" with keyword intent "{keyword_intent}" aimed at "{target_audience}". Output JSON with keys: title, headings (array of 3), intro (150 words), meta_tags (array of 5), target_cta.

Input example: topic="data-driven SEO", keyword_intent="informational", target_audience="marketing managers" Expected output: JSON brief ready to feed to writers or a content pipeline. How to adapt: Change intro length or add word_count parameter for long-form vs short-form.

Given title: "{title}", write: 1) SEO title (≤60 chars) 2) Meta description (≤155 chars) 3) Social preview (≤120 chars) Return as plain text labelled lines.

Input assumptions: title exists and contains the primary keyword. Expected output: three-line snippet ready for CMS.

`bash

simple curl to LLM endpoint (pseudo)

curl -X POST https://api.your-llm -d '{"text":"

Output: list of 5-8 question-answer pairs suitable for schema markup. Adaptation: Increase max_faqs to 10 for pillar pages.

- Content scoring quick-check (pseudo-algorithm)

- Email outreach template (personalize tokens)

Score factors: keyword_density, headings_count, readability_grade, internal_links

if keyword_density between 0.8% and 1.2% add +2 if headings_count >= 3 add +2 if readability_grade <= 10 add +2 if internal_links >= 2 add +2

Input: content text plus counts. Expected output: score 0–8 and quick improvement tips.

` Subject: Quick question about your article on {article_topic}

Hi {name},

Saw your piece on {article_topic} — loved the section on {specific_point}. I wrote a short guide that complements it: {link}. Would you be open to linking or sharing it?

Best, {your_name} ``

How to adapt: Swap outreach intent to "collab" or "guest post" and adjust CTA.

Tips for scaling: Batch inputs in CSV, map tokens to columns, and feed the brief generator. For automating publish and scheduling, consider integrating with an AI content pipeline like Scaleblogger.com to move from brief to live post automatically.

These templates get work out of the way and keep creative energy where it matters—crafting better angles and improving distribution.

Conclusion

This process is about swapping guesswork for signals: start by getting your analytics clean, then use that clean dataset to prioritize pages where small changes move the needle. Teams that ran a focused clean-data sprint, reworked metadata on high-traffic pages, and tracked results saw measurable uplifts within a few weeks — proof that disciplined analysis beats intuition alone. Expect the work to follow a rhythm: clean the data first, prioritize high-impact pages, and measure before and after so decisions stay evidence-led rather than hopeful.

If you’re wondering how long this takes, plan for an initial 4–8 week cycle to collect baseline metrics and test changes; if you’re asking which tools to use, combine your analytics platform with modern SEO tools for anomaly detection and keyword gaps; and if you’re unclear what counts as success, track organic clicks, rankings for prioritized queries, and the conversion actions that matter to your business. For teams looking to automate these steps and reduce manual overhead, platforms that tie analytics to content ops can save weeks of work. To streamline execution and move from analysis to automation, consider giving Automate your SEO workflow with Scaleblogger a look — it’s one practical next step toward consistent, data-driven SEO.