Most teams recognize the problem before the meeting starts: campaigns drifted from the original voice, personalization felt tacked on, and scaling meant losing nuance. AI storytelling isn’t a magic pen that writes brand copy for you; it’s a way to map audience signals to narrative patterns, surface authentic moments, and keep tone consistent across formats without multiplying review cycles. That shift turns repetitive chores into creative fuel and makes iteration measurable.

When used thoughtfully, brand storytelling guided by AI in content marketing creates repeatable processes for emotional resonance instead of templated messages. Models can suggest narrative arcs, test headlines, and surface micro-stories tied to real customer behavior, freeing human writers to refine meaning rather than churn drafts. Explore Scaleblogger’s AI content strategy services: Explore Scaleblogger’s AI content strategy services

Prerequisites & What You’ll Need

Start here: this work demands a blend of hands-on writing instincts, basic technical chops, and the right assets so the automation actually scales instead of creating noise. Expect to spend time up front mapping roles and grabbing a few essential tools; that investment makes the content pipeline predictable and repeatable.

Skills you must have

Content craft: Familiarity with long-form writing, persuasive headlines, and brand storytelling so prompts produce usable drafts.

SEO fundamentals: Comfort with keyword intent, internal linking, and on-page signals — enough to validate AI output and guide optimization.

Basic technical literacy: Ability to use a CMS, connect APIs, and edit HTML for structured data or canonical tags.

Data interpretation: Read simple analytics reports and content scoring output to decide what to iterate or pause.

Recommended team roles

Content owner: Oversees strategy, approves topic clusters, and maintains brand voice.

Writer/editor: Refines AI drafts, enforces style, and ensures factual accuracy.

SEO/data analyst: Maps search intent, monitors performance, and tunes content scoring.

Developer/automation lead: Implements publishing workflows, connects the CMS to automation, and manages scheduling.

Essential assets to have on hand

- Editorial style guide: Defines voice, grammar rules, and preferred examples.

- Content brief template: Standardizes inputs for AI prompts and human writers.

- Topic cluster map: Lists pillar pages and supporting posts, aligned to search intent.

- Access to analytics:

Google AnalyticsandSearch Consoleor equivalent reporting. - Reusable prompt library: High-quality prompts for outlines, intros, and meta descriptions.

- Confirm credentials and access for everyone who will publish.

- Seed the system with 10–20 proven pieces of content to train expectations.

- Set measurable KPIs for traffic, time-to-publish, and engagement before full rollout.

Automation and AI support the process, but they don’t replace editorial judgment. With the right skills, clearly defined roles, and those core assets in place, the content engine becomes a repeatable growth tool rather than an experiment. Consider exploring Scaleblogger.com for frameworks that connect content scoring and automated pipelines if you want a ready-made starting point.

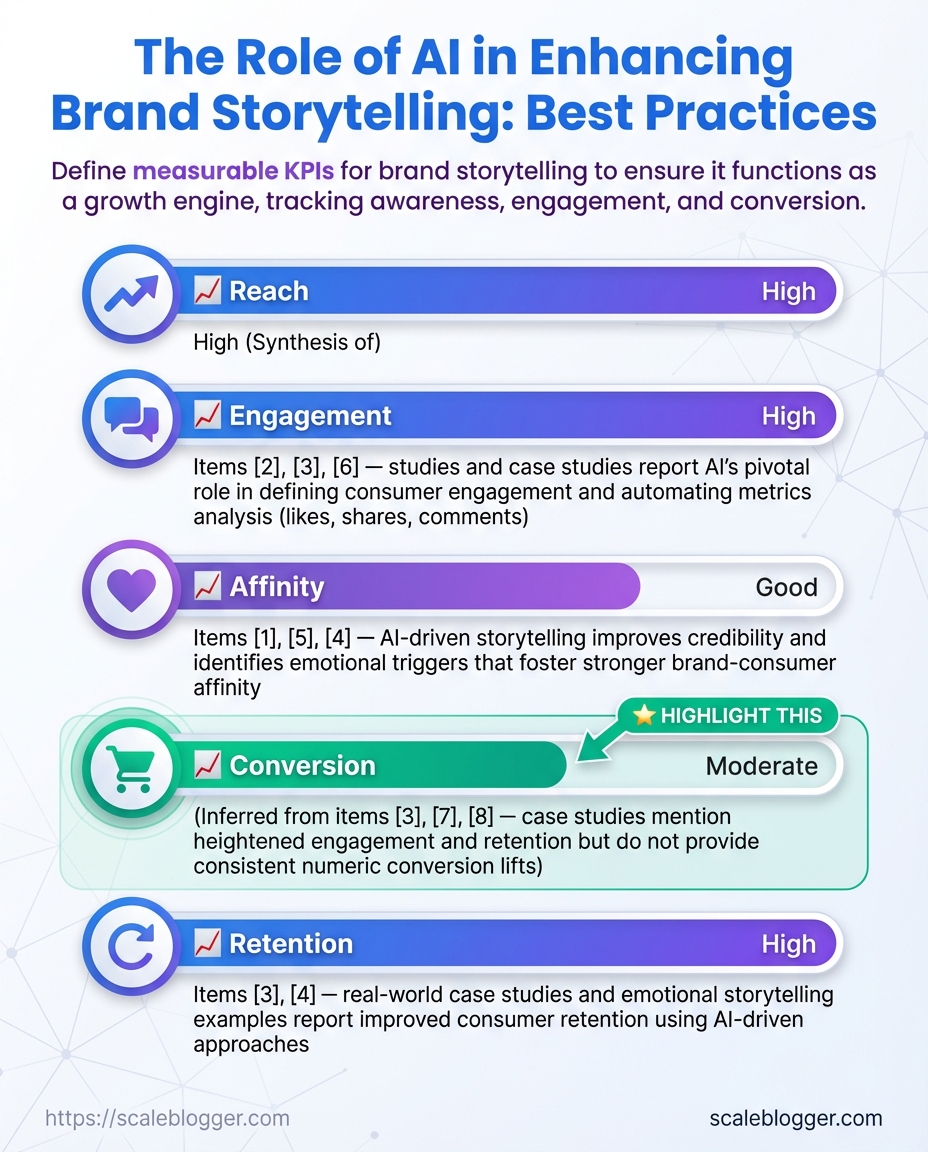

Define Your Brand Story Objectives

Start by naming exactly what the story should accomplish. A brand story without measurable goals is a pleasant read, not a growth engine. Focus on outcomes you can track back to storytelling—awareness, trust, action—and tie each to an audience segment so the story speaks to a real person, not an abstract market.

Set measurable KPIs tied to storytelling

- Reach: Unique pageviews or impressions for story-led content.

- Engagement: Average time on page, scroll depth, or social shares per story.

- Affinity: Increase in brand sentiment or newsletter signups from story traffic.

- Conversion: Lead form completions, trial starts, or product purchases attributed to story pieces.

- Retention: Return visitor rate and repeat-engagement within 30–90 days.

Create a one-line narrative objective using this template: For [audience segment], our story will [primary emotional & rational benefit] so they [desired action].

- For early-stage founders, our story will normalize bootstrapped growth tactics so they subscribe to our newsletter.

- For marketing leads at mid-market companies, our story will demonstrate repeatable content systems so they request a demo.

- For content teams evaluating automation, our story will reduce uncertainty about AI workflows so they trial our tools.

Audience linkage

- Founders: Prefer practical case studies and quick wins.

- Marketing leaders: Want benchmarks, frameworks, and ROI logic.

- Content operators: Look for how-to detail and automation shortcuts.

- Decide primary audience segment for this story.

- Choose 1–2 KPIs you’ll optimize for first (pick one engagement metric + one conversion metric).

- Draft the one-line narrative objective in

backticksand get stakeholder buy-in. - Define the attribution method (UTM + landing page + follow-up form) to measure outcomes.

Practical example: choose engagement (avg. time on page) and conversion (newsletter signups). Write the one-line objective, publish a multi-format story, and measure results at 30 and 90 days.

If automation or content pipelines are part of the plan, consider tools that track story performance end-to-end; Scaleblogger.com can help automate the pipeline and benchmark outcomes. Clear objectives turn good writing into repeatable business results—set them upfront and the rest of the process gets focused fast.

Audit Existing Content & Identify Story Threads

Start by treating the audit like archaeology: dig up everything, label the layers, then look for the narratives that keep repeating. A focused inventory plus thematic tagging makes it possible to spot which topics already resonate, where gaps exist, and which threads are worth amplifying with AI-generated sequences.

Access to analytics: GA4/Search Console and CMS analytics login.

Spreadsheet or content tool: A Google Sheet or Airtable ready to capture fields.

Stakeholder alignment: A short list of primary personas and business KPIs.

- Export essential metrics into a spreadsheet

- Pull URLs, titles, organic traffic (30d), avg. time on page, backlinks count, and conversion KPI from GA4/Search Console/CMS.

- Add owner and publish date from your CMS so responsibility and recency are clear.

- Identify repeating story threads

- Scan tags and titles for recurring themes (3–5 threads max).

- Prioritize threads that combine decent traction (traffic or time-on-page) with strategic fit to personas.

Tag content by theme, persona, and format so future automation can group and sequence pieces. Tagging example: Theme: AI storytelling | Persona: Content Director | Format: How-to.

Practical examples: Thread A — AI storytelling principles: several how-tos and case studies, medium traffic, high time-on-page. Thread B — Automation workflows: a couple of process articles with good conversion but thin depth. * Thread C — SEO for writers: many short posts; opportunity to cluster into a cornerstone guide.

Provide a sample content-inventory table template that readers can copy into their spreadsheet to capture necessary audit fields

| URL | Title | Primary Persona | Theme/Story Thread | Traffic (30d) | Avg. Time on Page | Primary KPI |

|---|---|---|---|---|---|---|

| example.com/post-1 | How AI Shapes Brand Storytelling | Content Director | AI storytelling | 1,420 | 3:12 | Email signups (120) |

| example.com/post-2 | Automating Your Blog Pipeline | Head of Ops | Automation workflows | 980 | 2:45 | Demo requests (18) |

| example.com/post-3 | SEO Habits for Busy Writers | SEO Manager | SEO for writers | 2,300 | 2:05 | Organic conversions (65) |

| example.com/post-4 | Case Study: AI Storytelling in SaaS | Product Marketer | AI storytelling | 640 | 4:00 | Trial starts (22) |

| example.com/post-5 | Content Scoring Framework | Content Strategist | Automation workflows | 410 | 3:30 | Strategy downloads (40) |

This template captures the fields needed to prioritize and group content effectively. Use the Theme/Story Thread column to collapse multiple posts into 3–5 threads that an AI pipeline can expand into series or cornerstone pieces.

Consider using Scale your content workflow tools to automate tagging and scheduling once threads are chosen. Pin the threads that both serve high-value personas and show engagement signals; those are the best candidates to scale with AI-driven outlines and sequenced publishing.

Craft AI Prompts to Generate Story Variants

Start by treating prompts like replicable recipes: state the desired narrative shape, the audience voice, and the constraints up front so outputs are predictable and editable. Good prompts speed iteration and make A/B-ready story variants that a human editor can polish quickly.

Editorial brief: Target audience, tone, length, CTA, and brand dos/don’ts.

Source assets: Original article, quotes, data points, and keyword targets.

Tools & materials

Model access: A large language model with temperature and max tokens control.

Prompt editor: A text editor or prompt-management tool to save templates.

- Draft a base prompt that anchors the brand voice and objective.

- Create format-specific wrappers: short-form social, newsletter, long-form intro.

- Save each template with the expected output length and examples.

Prompt templates (copy-ready)

- Story arc template: “Rewrite this article into a 5-paragraph story that opens with a surprising fact, follows with a human example, explains the problem, presents the solution, and ends with a clear next step. Use [brand voice], keep it under 450 words, and include one quote from the source.”

- Social variant template: “Create three social captions (≤200 characters) for LinkedIn that extract the article’s main insight, include one stat from the source, and end with an action-oriented question.”

- Newsletter blurb template: “Write a 50–75 word newsletter blurb summarizing the article, with one bold sentence for the hook and a short reading-time estimate.”

Model parameter recommendations

- Temperature:

0.2–0.5for factual, on-brand variants; raise to0.7for creative spins. - Top-p:

0.9to balance diversity and coherence. - Max tokens: Set to cover output needs; e.g.,

512for long intros. - Stop sequences: Provide

\n\nor custom tokens to prevent run-on outputs.

Iteration workflow

- Run the base prompt and review three outputs.

- Score each output against the QA checklist and pick the best two.

- Refine prompt with constructive constraints (e.g., “shorten the hook”, “use active verbs”).

- Re-run with adjusted parameters, compare, and finalize.

QA checklist (each line item for quick scoring)

Accuracy: Facts, quotes, and numbers match the source.

Alignment: Tone and brand rules followed.

Originality: No verbatim copying beyond short quotes.

Clarity: Sentences read easily and flow logically.

Actionability: Contains a clear next step or CTA when required.

Using repeatable prompt templates and a tight iteration loop turns one article into polished, platform-ready story variants quickly—ideal for scaling content with human oversight and automation like the systems showcased at Scaleblogger.com. Keep templates versioned so improvements compound across campaigns.

Human-in-the-Loop Editing & Brand Alignment

Human review turns competent AI drafts into content that actually represents the brand and survives public scrutiny. Start by treating AI output as a first draft: edit for clarity and accuracy, fact-check claims and sources, then route the piece through a defined approval flow that enforces voice, legal, and SEO checks. That three-stage habit — Edit, Fact-Check, Approve — keeps speed without sacrificing trust.

Why this step matters

AI accelerates creation but doesn’t inherit brand judgment. A consistent brand-voice rubric and a short, well-defined approval path prevent tone drift, factual errors, and compliance slips. The process also creates measurable SLAs so marketing, legal, and product know when to expect reviews and signoffs.

Brand-voice rubric: A one-page checklist that codifies tone, vocabulary, sentence length, and taboo phrases.

Fact-check standard: Minimum of one primary source per claim and verification of any quoted statistics.

Approval SLA: Maximum 48 hours for editorial review, 72 hours for legal review on regulated topics.

Step-by-step process

- Edit for readability and brand voice.

- Fact-check claims, links, and statistics.

- Run SEO and accessibility checks.

- Route to subject-matter expert (SME) for technical signoff.

- Final approval by editor or brand manager within SLA.

Practical editing pointers

- Bold lead-in: Use the rubric to fix tone quickly — prefer verbs over nominalizations.

- Bold lead-in: Replace passive constructions and AI-stilted phrasing.

- Bold lead-in: Standardize product names, trademarks, and capitalization.

Fact-checking tactics

- Verify original source for every factual claim.

- Confirm dates, figures, and direct quotes against the primary publication.

- Use

Ctrl+Fto find original phrasing on source pages before citing.

Roles, responsibilities, and SLAs

Editor: Edits for voice and SEO; SLA — 24–48 hours.

SME: Confirms technical accuracy; SLA — 48–72 hours.

Legal/Compliance: Reviews regulated language; SLA — 72 hours.

Publisher: Final QC and scheduling; SLA — 24 hours.

Example workflows

Small team: Editor does edit + fact-check; SME on demand; publisher approves.

Enterprise: Parallel reviews—editorial and legal concurrently—then final publisher signoff.

Integrating a tool like Scaleblogger.com can automate routing, track SLAs, and surface content-score signals so editors focus on higher-value judgments rather than status chasing. When the loop is tight and responsibilities clear, output quality rises while cycle time stays fast.

Personalize & Distribute Story Variants at Scale

Start by matching each story variant to the audience slice and channel where it will perform best. Think of this as a routing map: not every angle belongs on every channel. When personalization is done right, engagement rises and wasted impressions fall.

Audience segments: Clear definitions for segments (e.g., new visitors, returning readers, paying customers).

Story variants: A finished set of headlines, intros, and hooks for each persona.

Delivery tools: A CMS or marketing automation platform that supports dynamic content blocks and personalization tokens.

Channel mapping and routing

- Map each variant to one channel and segment at first.

- Use high-intent variants for email (welcome series, cart recovery), conversational variants for chatbots, and visually rich variants for social and paid ads.

- Reserve long-form, research-heavy variants for blog and LinkedIn where time-on-page matters.

- Channel fit: Match format to consumption behavior.

- Segment fit: Prioritize segments by value and likely intent.

- Frequency cap: Avoid blasting the same persona with every variant.

Automation setup and technical patterns

Start with reusable building blocks: {{first_name}}, {{industry}}, {{last_article_read}}. Place them inside dynamic content blocks so a single template can render dozens of personalized variants.

- Personalization tokens: Insert

{{user.city}}or{{company_size}}where it changes the message tone. - Dynamic content blocks: Swap entire paragraphs, CTAs, or images based on segment rules.

- Fallback rules: Always include a default block when token data is missing.

Simple A/B validation

- Pick one hypothesis (e.g., “Benefit-driven headline improves click-through”).

- Run the variant against the control for a fixed sample size and time window.

- Compare primary metric (CTR or conversion) and secondary signals (time on page, scroll depth).

- Test only one variable at a time.

- Keep samples large enough to be meaningful.

- Automate winner promotion into the main pipeline.

Practical tools to consider include an automation-friendly CMS or an AI content pipeline that can push variants into schedules; Scaleblogger.com is an example of a service that ties AI-driven variants to publish and scheduling workflows. Implementing this map-and-test rhythm turns experimentation into predictable lift — and keeps content working harder without increasing headcount.

Measure Impact & Iterate

Start by treating measurement as part of the creative process: if you can’t attribute outcomes to specific content moves, you can’t improve with confidence. Focus on three linked practices — tracking, attribution, and a repeatable learning loop — so every campaign produces usable knowledge.

Tracking: instrument precisely and consistently

- Tag variants: Use

utm_content,campaign, andvariantto differentiate headline, hero image, and CTA tests. - Event coverage: Track page views, scroll depth, time on page, CTA clicks, and conversion events on every variant.

- Consistency: Standardize naming conventions in a tagging playbook so metrics are comparable across months.

Example: tag an email variant as utm_campaign=spring_launch&utm_medium=email&utm_content=subjectA_variant1 so opens, clicks, and on-site behavior tie back cleanly.

Attribution: isolate what moved the needle

- Use a primary attribution model (first-click, last-click, or multi-touch) and document it.

- Create control groups by withholding the creative change from a randomly selected audience segment.

- Segment results into cohorts by acquisition source and behavior window (0–7 days, 8–30 days).

Control group example: send the new AI-storytelling lead magnet to 70% of the list and hold 30% as a control. Compare conversion lift while normalizing for list segment.

Learning Loop: institutionalize insights

Insights Log: Record hypothesis, test setup, outcomes, and recommended action for each experiment.

Decision cadence: Run a biweekly review where product, content, and analytics teams decide whether to kill, scale, or iterate on a variant.

Playbook updates: When a test result is repeatable across two cohorts, update templates and the tagging playbook.

Practical checklist for a single experiment: 1. Define a measurable hypothesis and primary KPI.

- Implement tags and events in analytics and QA tracking.

- Launch with a randomized control group.

- Monitor results in cohort windows and validate statistical significance.

- Log findings in the insights repository and update content templates.

Tips and warnings are woven into setups — e.g., avoid changing more than one major variable per experiment, and watch for seasonal confounders that can mask real lift. Tools that automate reporting and cohort comparisons speed this up; when automation is used, ensure the tagging playbook is machine-readable.

Measuring like this turns guesses into reliable inputs for content planning. Keep the loop tight: the faster you test and record what worked, the quicker you build a content machine that actually improves traffic and conversions.

Troubleshooting Common Issues

When a model starts behaving off-brand, inventing facts, or producing weak results, the fastest path back to reliable content is a disciplined diagnose-and-fix loop. Below are practical checks and corrective steps that work across generation tools and workflows.

Tone drift: diagnose and correct

Tone drift shows up when output slowly moves away from brand voice — more casual, too formal, or inconsistent across pieces. Diagnose by sampling recent outputs and comparing to a baseline voice sample.

- Quick check: Compare three recent outputs to a canonical brand piece.

- Fix: Tighten the prompt with explicit voice anchors (

voice: confident, conversational, 2nd-person), add a short brand style excerpt, and constrain temperature to0.2–0.4. - If persistent: Retrain or fine-tune on 20–50 in-brand examples, or add a post-generation pass that applies consistent phrasing rules.

Hallucinations: detect and stop them

Hallucinations are invented facts or attributions. Always assume any factual claim needs validation.

- Detect: Run a targeted fact-check: name, date, statistic, or claim.

- Fix: Add a verification step where the model must return a

sources:list orconfidence:flag. Reject outputs whereconfidence < 0.6orsources:are missing. - When to escalate: For legal, medical, or financial content, require human sign-off before publication.

Sources required: Always verify external claims with the original publisher or a primary source before publishing.

Low performance: root causes and recovery

Low performance can mean poor engagement metrics, low relevancy, or slow throughput.

- Common causes: weak prompts, mismatched intent, poor topic selection, or pipeline bottlenecks.

- Fix: Run an A/B test between the latest prompt and a control prompt, measuring click-through and time-on-page for two weeks. Use shorter prompts, clearer intent statements, and ensure the model has access to the latest context window.

Step-by-step rollback and re-run process

- Identify underperforming variants and mark their timestamps.

- Revert the content pipeline to the last known-good prompt and model checkpoint.

- Re-run generation with improved validation rules and a small human review batch.

- Deploy the corrected variant to a subset (10–20%) and monitor KPIs for 7–14 days.

How to validate AI output before publishing

- Checklist: confirm facts, check tone consistency, ensure SEO intent match, run plagiarism scan, and verify links.

- Automation tip: Add automated validators that flag missing

sources:orconfidence:fields.

When the issue involves scale or automation, consider integrating an AI content governance tool or an AI-powered content pipeline like Scaleblogger.com to enforce prompts, checkpoints, and rollbacks. Small fixes here stop big reputational problems down the line, and make the content process actually dependable.

Tips for Success & Pro Tips

Start by treating AI as a teammate, not a magician. Systems that win consistently combine tight processes, small controlled experiments, and human judgment baked into the loop. That means building repeatable assets (prompts, templates, rubrics), running lots of low-risk tests, and scoring every output against a brand-aware metric.

Set up the basics (fast wins)

- Create a centralized prompt and template library.

- Define a compact human scoring rubric for brand voice, factual accuracy, and SEO intent.

- Run short A/B experiments on headlines, intros, and CTAs at scale.

- Tag each item by purpose, tone, and performance signal (CTR, dwell time, conversions).

- Version-control high-performing prompts and archive experiments that didn't move metrics.

- Run a prompt variation that adds a customer quote to intros and compare time-on-page.

- Score ten generated drafts with the rubric; iterate the prompt until median Voice ≥4.

How to build the centralized library 1. Inventory existing prompts, templates, and content fragments into one searchable repo.

Operational best practices Maintain a prompt library: Store canonical prompts, variations, and context examples so collaborators reuse consistent voice. Prefer micro-experiments: Run many 1–2 week tests instead of a few multi-month bets to learn faster. Score outputs with humans: Use a short rubric that rates voice fit, accuracy, and search intent match. Automate repeatable steps: Use CI-like automation for publishing and tagging to reduce human error. * Document failure modes: Track when the model hallucinates, slips tone, or produces SEO-unfriendly copy.

Practical scoring rubric (three quick fields) Voice (1–5): Does the piece sound like the brand? Accuracy (1–5): Are claims verifiable or flagged for review? * Intent match (1–5): Does the content satisfy the target query?

Small experiments to try this week 1. Swap two headline formulas across 50 pages and measure CTR for seven days.

For teams wanting to scale the whole workflow, adopt an AI content pipeline that automates scheduling, scoring, and version control—tools like Scaleblogger.com specialize in that integration. These practices shrink risk, speed learning, and keep brand integrity intact. Keep experiments small, keep the human rubric central, and the system will reliably get better week over week.

Compliance, Ethics, and Data Privacy

AI-assisted storytelling can massively speed content creation, but it also raises real questions about consent, attribution, and personal data. Treat compliance and ethics as design constraints: they shape how content gets produced, who appears in it, and what data is safe to use. Below are practical guardrails, anonymization techniques, and ready-to-use disclosure language to put into production.

Ensure legal review and a data inventory are in place before using personal data or training models.

Consent: when and how to ask

- Ask consent early. Obtain explicit permission before using someone’s words, likeness, or private data in any AI-generated narrative.

- Make the ask specific. State what will be used, how the AI will transform it, where the output will appear, and how long it will be stored.

- Use layered consent. Provide a short checkbox for immediate approval and a link to a short policy for details.

- Present a clear consent banner with three bullets: purpose, AI involvement, retention period.

- Provide a one-click consent option plus a “learn more” link to the full policy.

- Log consent with timestamp and scope; store hashes rather than raw signatures.

Practical anonymization strategies

Pseudonymization: Replace direct identifiers with consistent pseudonyms so longitudinal analysis remains possible.

Data minimization: Keep only fields strictly necessary for the story—drop exact addresses, precise timestamps, and unique IDs.

Differential masking: Aggregate or fuzz small counts and rare attributes so individuals can’t be re-identified from context.

> Industry analysis shows regulators expect clarity on AI usage and reasonable steps to prevent re-identification.

Example step-by-step consent flow

Example disclosure text for AI-assisted narratives

Disclosure: This story was written with the assistance of an AI system that helped draft and refine language. Personal details have been anonymized; any quotations are used with permission or paraphrased to protect privacy.

Practical tips for teams

- Audit regularly: Run quarterly checks on datasets and model outputs for leaking PII.

- Train staff: Make attribution and privacy part of onboarding for writers and editors.

- Use tooling: Automate redaction checks and consent logs where possible—services that integrate with the editorial pipeline reduce human error.

For organizations building AI content at scale, consider integrating these policies into your CMS or using an AI content automation partner like Scaleblogger.com to enforce consent and anonymization workflows. Handling ethics and privacy up-front prevents legal headaches and preserves reader trust, which is the whole point of sustainable storytelling.

Appendix: Prompt Library & Templates

Practical, copy-ready prompts speed up content production and make reviews predictable. Below are templates that work for blog drafts, outlines, meta descriptions, and idea expansion, plus a human-review rubric and the CSV headers to export audits into a spreadsheet.

Copy-ready prompt templates

- Blog outline (SEO-first):

Write a detailed blog outline on {topic} with target keyword "{keyword}", 1,500–2,000 words, H2/H3 structure, and suggested internal links. - Draft expansion (voice-preserving):

Expand the outline into a first draft in the voice of {brand_voice}, keep paragraphs ≤3 sentences, include examples and a data-driven claim. - Title and meta set:

Generate 10 headline variations and 5 meta descriptions under 155 characters for {topic}. Prioritize CTR and clarity. - Content brief for writers:

Create a brief with audience, intent, required links, references, and primary/secondary keywords for {topic}. Include target word count and CTA. - Repurpose block:

Turn the H2 "X" into a 30-60 second LinkedIn post and a 3-tweet thread summarizing the point.

How to use these templates

- Paste the template into your prompt editor.

- Replace placeholders like

{topic}and{brand_voice}. - Add constraints: tone, citations, or

do not invent statistics. - Review the output against the rubric below and iterate.

Human-review rubric (score each criterion 0–4)

Clarity: How readable and skimmable is the piece? Accuracy: Are facts and claims correct and cited where needed? Intent Match: Does the content satisfy the search intent? Originality: Is the angle or examples distinctive? SEO Optimization: Proper headings, keyword use, and meta elements? Actionability: Are there clear next steps or takeaways for readers?

Scoring bands: 0–1 = Reject, 2 = Major revisions, 3 = Minor edits, 4 = Publish. Tally scores and require a minimum average (e.g., 3.0) for publishing.

CSV header example for audit export

`csv id,title,topic,author,word_count,readability_score,clarity,accuracy,intent_match,originality,seo_score,actionability,publish_decision,tags,published_date

- Tip: Keep id

as a UUID andreadability_score` from a consistent tool (e.g., Flesch). - Warning: Don’t rely only on automated scores; human checks catch nuance.

Integrate these prompts into your content pipeline and map the rubric thresholds to publishing gates in your workflow. If automation is being added, the templates above slot directly into scheduling and review tools; for end-to-end scaling, consider blending them with an AI content workflow like Scaleblogger.com to automate generation and benchmarking. These assets make quality predictable and reviews faster, which is exactly what speeds up consistent, high-performing publishing.

Conclusion

Bringing AI into brand storytelling pays off when the pieces work together: clear objectives, a thorough content audit, prompt-driven story variants, and a human-in-the-loop to keep voice and compliance intact. During the appendices, the prompt library and templates show how teams can spin dozens of on-brand drafts from a single brief, and the troubleshooting section proves that most problems are process gaps rather than technical limits. If you’re wondering whether AI will replace writers, the pattern is clear — it amplifies skilled storytellers rather than replaces them. If measurement feels fuzzy, start with engagement lifts and conversion tests tied to the story threads you identified.

Start small, iterate fast, and make governance non-negotiable. Run one pilot campaign using the prompt templates, pair every AI draft with human editing, and measure lift against baseline content. For teams looking to automate this workflow at scale or to accelerate a pilot, platforms like Explore Scaleblogger’s AI content strategy services can streamline setup and connect prompts to publishing pipelines. That step turns these practices from a weekend experiment into repeatable outcomes—so pick one campaign, apply the prompts, track results, and refine.