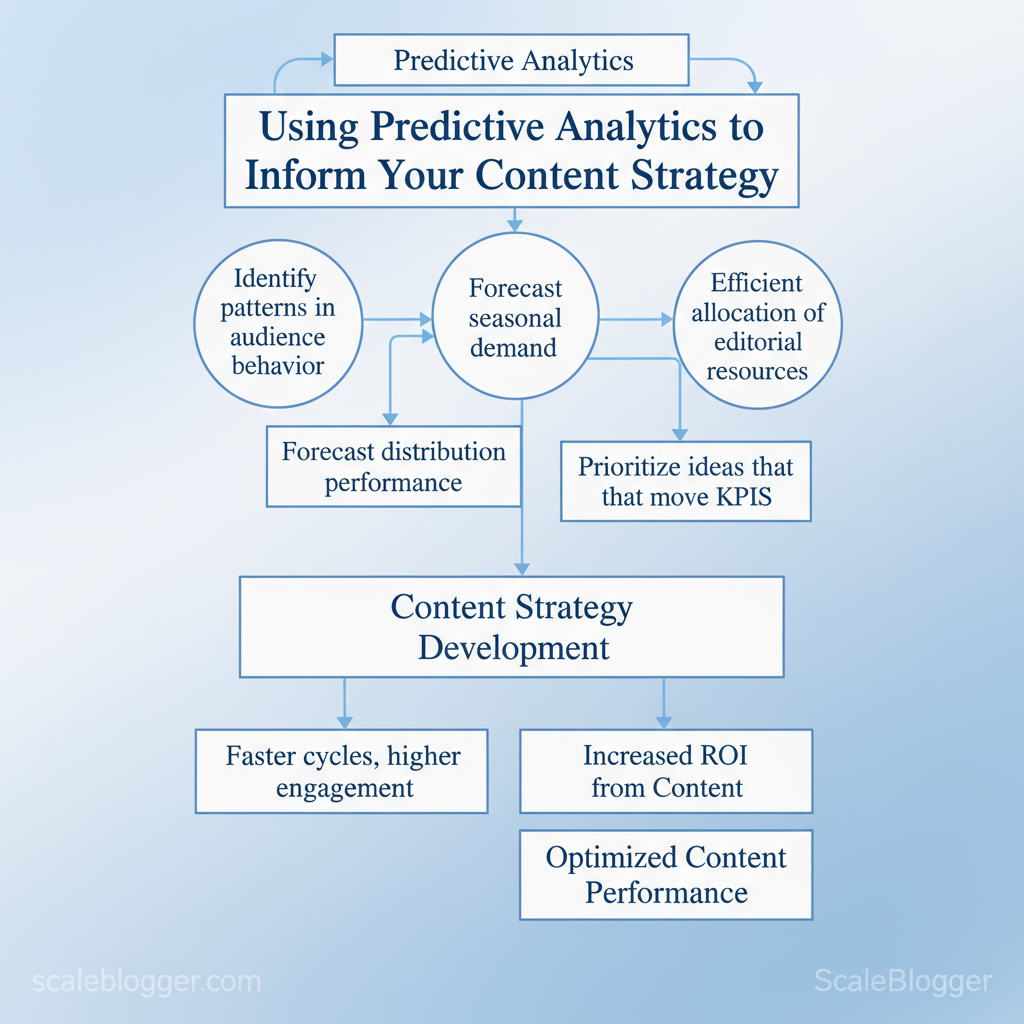

Marketing teams still launch content on hunch and habit, burning budget on pieces that never gain traction. Using predictive analytics for content shifts that guesswork into measurable forecasts, letting teams prioritize ideas that will actually move KPIs.

Predictive models can identify patterns in audience behavior, seasonal demand, and distribution performance to improve `forecasting content success`. When applied correctly, this leads to faster cycles, higher engagement, and more efficient allocation of editorial resources. Industry research shows organizations adopting data-driven decision making for content see measurable improvements in ROI and velocity.

Picture a product marketing team that uses historical engagement signals to choose two pillar topics instead of ten low-probability experiments; conversions rise and workload drops. Tools such as Scaleblogger bring automation and predictive workflows into that selection process, turning scattered analytics into an action plan.

- What inputs drive accurate prediction models for content

- How to turn predictions into a prioritized content backlog

- Ways to validate forecasts against live performance

- Common pitfalls when relying on historical data alone

Section 1: Framing Predictive Analytics for Content Strategy

Predictive analytics turns historical content signals into actionable forecasts that guide which topics to publish, when to publish, and how to allocate resources. For content teams this means taking inputs—past performance, topic signals, seasonality, and audience intent—and producing forecasted outcomes like traffic, engagement, and conversion potential. Successful implementation requires realistic expectations about data quality, model simplicity, and vulnerability to external shocks.

What predictive analytics looks like in practice Inputs: Historical performance (pageviews, CTR, conversions), topic signals (search trends, keyword velocity), seasonality (holiday cycles), audience intent* (query types, funnel stage).

- Outputs: Forecasted traffic ranges, expected engagement, conversion probability per topic, and recommended publish windows.

- Limitations: Models fail on poor data, overfit when features are noisy, and can’t predict sudden external events (product launches, algorithm updates).

Industry analysis shows that teams relying solely on heuristics waste editorial effort; forecasts focus resources where expected ROI is highest.

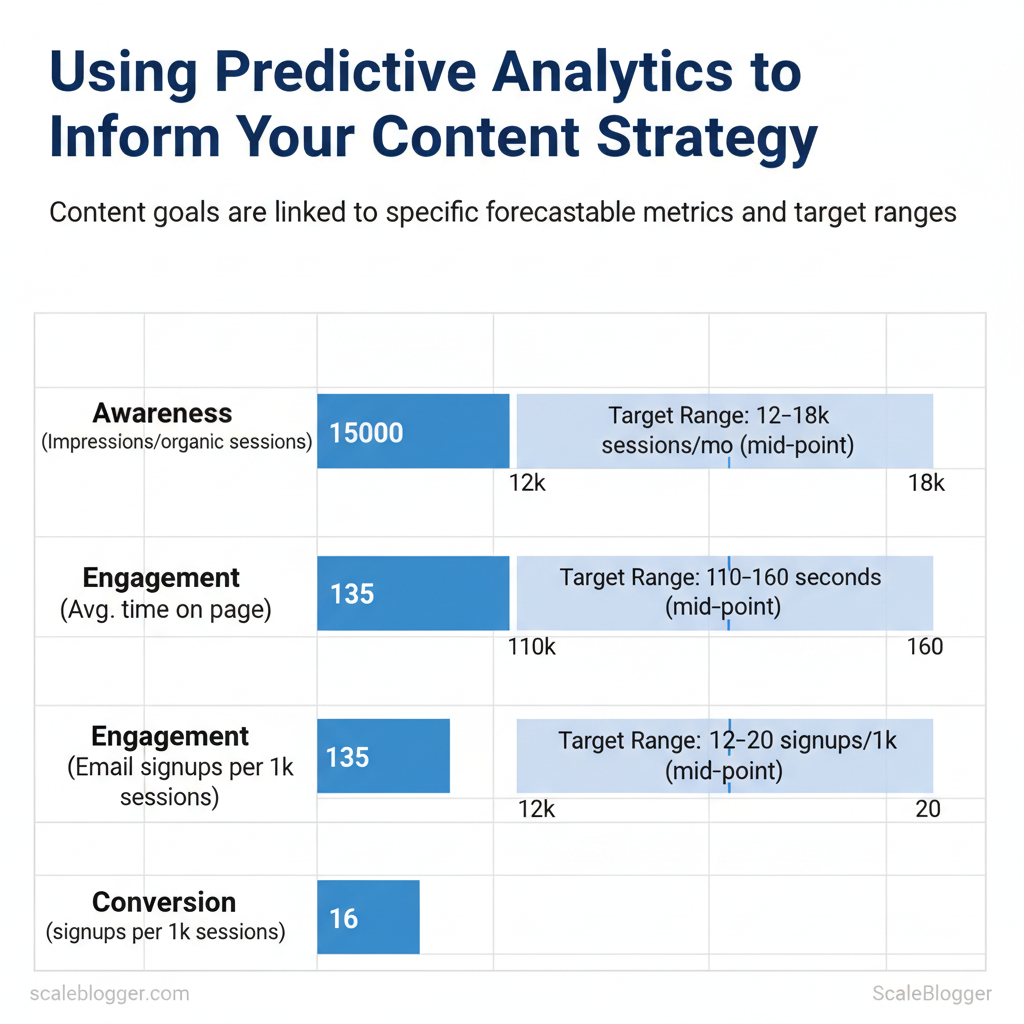

Practical alignment of forecasts to business goals

Practical example: a forecast predicts 15–25k extra monthly sessions for a targeted guide with a 3–5% signup conversion. Assign a senior writer and SEO review, schedule for the high-search month, and reserve a small paid promotion budget to validate assumptions.

| Approach | Data Requirements | Complexity | Typical Output |

|---|---|---|---|

| Rule-of-thumb forecasting | Minimal: last-period results | Low | Traffic estimate ±20–40% |

| Historical baseline + adjustment | Historical series + seasonality tags | Medium | Adjusted forecast with seasonal multipliers |

| Simple regression-based forecast | Time series + 3–6 predictors | Medium–High | Point forecast + confidence interval |

| Forecasting with audience signals | Search trends, intent classifiers, behavioral data | High | Probabilistic success scores, segment-level forecasts |

| Goal | Forecasted Metric | Baseline Metric | Target Range |

|---|---|---|---|

| Awareness | Impressions / organic sessions | 10k sessions/mo | 12–18k sessions/mo |

| Engagement | Avg. time on page | 90 seconds | 110–160 seconds |

| Conversion | Email signups per 1k sessions | 8 signups/1k | 12–20 signups/1k |

Section 2: Data Foundations for Content Forecasting

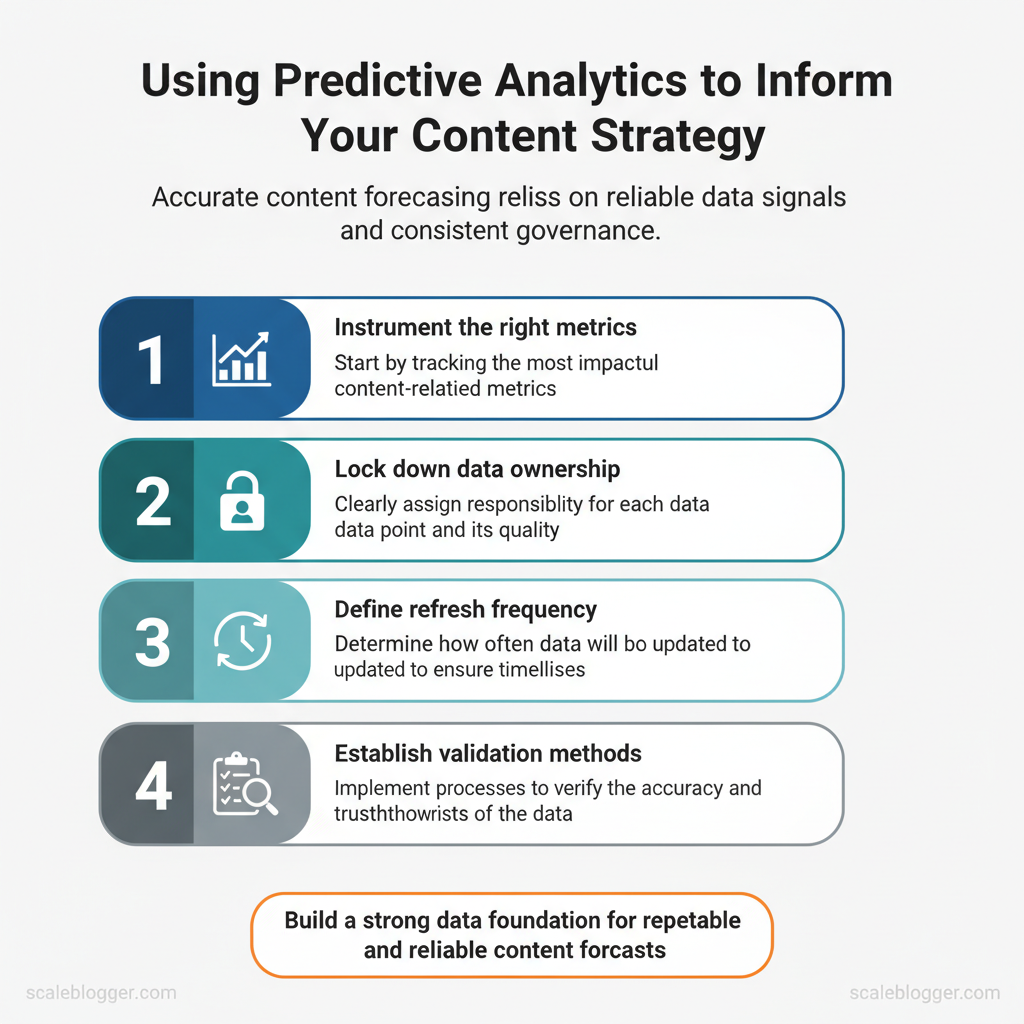

Accurate content forecasting depends on a small set of reliable signals and a governance baseline that keeps those signals trustworthy. Start by instrumenting the right metrics, then lock down who owns them, how often they’re refreshed, and how they’re validated. When those pieces are in place, forecasts stop being guesswork and become repeatable inputs for planning editorial velocity and topic selection.

2.1 Essential data signals for forecasting content success

- Historical performance: Past traffic, conversions, and bounce rates reveal what formats and topics scale.

- Topic signals (keywords, intent): Keyword volume, SERP features, and inferred intent predict discoverability.

- Seasonality: Calendar patterns and year-over-year trends define baseline demand and promotional windows.

- Engagement signals: Time on page, scroll depth, and social shares indicate content relevance and downstream conversion likelihood.

| Signal | Example | Forecast relevance | Quality considerations |

|---|---|---|---|

| Historical performance | Monthly sessions, goal completions (GA4) | Anchors baseline traffic; improves model stability | Ensure consistent page tagging; exclude bot/referral spam |

| Topic signals (keywords, intent) | Keyword volume & CPC from keyword tools | Estimates reachable audience and competitiveness | Use multiple tools for cross-checks; track SERP feature changes |

| Seasonality | YoY traffic lift for Q4 product guides | Adjusts forecasts for demand cycles | Use ≥3 years when possible; normalize promotional spikes |

| Engagement signals | Avg. time on page, scroll depth, CTA clicks | Predicts conversion lift and content quality | Standardize events and definitions across pages |

2.2 Data governance basics for marketing teams

| Maturity Level | Data Ownership | Validation Steps | Risks/Trade-offs |

|---|---|---|---|

| Starter | Marketing manager | Basic sanity checks, monthly audits | Faster setup, higher error risk |

| Mid-market | Dedicated analytics lead | Automated alerts, weekly reconciliations | Moderate cost, improved reliability |

| Enterprise | Central data governance team | CI pipelines, SLA monitoring, full lineage | Higher overhead, strongest reproducibility |

| Custom | Cross-functional council | Business-rule testing, stakeholder sign-off | Tailored fit, longer implementation time |

Understanding these principles helps teams move faster without sacrificing quality. When the signals and governance are aligned, forecasting becomes a tactical advantage rather than a recurring headache.

Section 3: Building Forecasts for Content Ideation

Forecasts turn scattered signals into ranked topic bets so teams publish fewer misses and more wins. Start by converting measurable signals — search volume change, SERP feature presence, backlink velocity, social traction, and internal conversion lift — into a composite `Signal Score`. Then apply a forecast model that maps that score to forecast potential (traffic upside, conversion probability, and competitive defensibility). Finally, prioritize topics into a short prototype plan for rapid validation.

3.1 From signals to topic ideas

| Idea | Signal Score | Forecast Potential | Priority |

|---|---|---|---|

| Topic A | 82 | High (strong search + snippet chance) | High |

| Topic B | 65 | Medium (moderate search, low backlinks) | Medium |

| Topic C | 48 | Medium (niche social traction) | Low |

| Topic D | 31 | Low (high competition, weak signals) | Low |

3.2 Rapid testing and iteration plan

Pilot content experiments must be time-boxed and metric-driven. Use minimum viable signals to decide whether to scale: organic impressions > 1,000 in 14 days, CTR > 2.5%, and at least one earned contextual backlink. If any two of these are achieved, escalate to a scale plan.

- Minimum scope: 800–1,200 words, one targeted keyword, meta and schema present

- Distribution: organic publish + 3 social posts + email blast

- Measurement window: 14 days

| Phase | Activities | Owner | Timeframe |

|---|---|---|---|

| Week 1 Planning | Finalize topic, create brief, assign writer, set tracking `UTM` | Content Lead | Days 1–3 |

| Week 1 Execution | Draft, optimize on-page SEO, add schema, schedule publish | Writer/SEO | Days 4–7 |

| Week 2 Execution | Publish, social push, small paid boost ($100–$300), outreach to 5 targets | Growth PM | Days 8–12 |

| Review & Learnings | Aggregate 14-day metrics, decide scale/kill, document playbook | Content Lead + Analyst | Days 13–14 |

Practical tips and warnings appear naturally while running pilots: don’t wait for perfect drafts—publish a tested MVP; watch for noisy short-term spikes from paid that don’t convert; and always record what outreach changed signal behavior. Consider using AI content automation tools like those at Scaleblogger.com to speed prototype creation and measure predicted performance against actuals. Understanding these principles helps teams move faster without sacrificing quality.

Section 4: Forecasting for Production and Distribution

Forecasts should drive who makes what, when, and where — not sit in a spreadsheet. Use demand signals to convert probabilistic outputs into a working production plan, buffer capacity for uncertainty, and align SEO, content, design, and review teams so throughput matches expected reach. Then map those production outputs to channel-specific timing and republishing windows so distribution captures peak attention and compounds evergreen value.

Prerequisites

- Forecast inputs available: recent traffic, keyword intent scores, campaign briefs.

- Team capacity matrix: available hours per role per week.

- Editorial SLA definitions: review turnarounds, publishing lead times.

- Capacity planner spreadsheet or `resource.json` for automation

- Content calendar (shared): Google Sheets, Airtable, or an API-driven CMS

- Performance dashboard: weekly traffic + engagement metrics

Industry analysis shows teams that plan buffers reduce missed deadlines and rework.

| Date | Forecasted Demand | Content Type | Owner | Status |

|---|---|---|---|---|

| Week 1 | High (8k sessions projected) | Long-form pillar | Content Lead | In Draft |

| Week 2 | Medium (4k sessions projected) | How-to post + infographic | SEO Manager | Design queued |

| Week 3 | High (10k sessions projected) | Video explainer + blog | Video Producer | Scripting |

| Week 4 | Low (2k sessions projected) | Newsletter round-up | Growth PM | Ready to publish |

| Channel | Forecasted Reach | Engagement Expectation | Recommended Timing |

|---|---|---|---|

| Blog | 8k–12k monthly visits | Medium–High (time on page 3–5 min) | Tue–Thu, 8–10 AM |

| Newsletter | 2k–6k opens | High (click-through 8–12%) | Wed, 10 AM |

| Social | 10k impressions per week | Variable (short posts high lift) | Tue–Fri, 12–3 PM |

| Video | 4k–15k views per release | High (engagement minutes) | Thu–Sat, 6–9 PM |

Practical tip: connect the production calendar to analytics so forecasts auto-adjust and capacity shifts in real time. When implemented, this reduces last-minute firefights and makes distribution deliberate rather than reactive. This is why automation-driven content workflows free teams to focus on quality and audience fit.

Section 5: Measuring and Communicating Forecast Accuracy

Start by treating forecast accuracy as a performance metric, not a scoreboard. Measure consistently, interpret patterns, and translate results into a concise narrative that drives decisions. Below are the practical metrics to monitor, a simple process to improve forecasts over time, and communication formats that make non-technical stakeholders act.

5.1 Key metrics for forecast accuracy

| Metric | Description | Example Value | Action if Out-of-Band |

|---|---|---|---|

| MAPE | Mean Absolute Percentage Error across forecasts | 12.5% | Recalibrate model or adjust inputs; segment error by cohort |

| Hit Rate | % of forecasts within tolerance band (±10%) | 78% | Tighten tolerance for high-impact items; investigate misses |

| Bias | Average signed error (positive = overforecast) | +6% | Introduce bias correction factor; review assumptions |

| Lead Time | Average days between forecast and outcome | 30 days | Shorten lead time for volatile items; increase monitoring frequency |

5.2 Communicating insights to non-technical stakeholders

- Use an executive-friendly visual: one primary KPI chart, one variance waterfall, and one recommended action.

- Frame the narrative around decisions: what changed, why it matters to revenue or traffic, and the single next step.

- Keep language concrete: replace model jargon with business terms (e.g., “we underpriced impressions by 6% last month”).

| Format | Audience | Pros | Cons |

|---|---|---|---|

| Two-page report | Analysts, Ops leads | More context and detail; includes breakdowns | Too long for executives; risk of information overload |

| One-page dashboard | Executives, Product owners | Fast status, visual KPIs, actionable next step | Limited nuance; less suitable for deep root-cause |

| Executive slide | Board, C-suite | Focused recommendation with supporting visuals | One-off format; needs backup appendix for queries |

Practical tip: include a short `What should we do next` box on every deliverable with one prioritized action and estimated impact. For teams focused on content forecasts, tools that automate measurement and anomaly detection—like the AI systems at Scaleblogger.com that help `Predict your content performance`—shave hours from reporting and keep attention on decisions. Understanding these principles helps teams move faster without sacrificing accuracy.

📥 Download: Predictive Analytics Content Strategy Checklist (PDF)

Section 6: Practical Roadmap to Get Started Today

Start by focusing on measurable signals and a tight feedback loop: audit what you have, pick a few high-probability topics, publish quickly, then learn from performance. The 30-day plan below turns that into concrete weekly milestones so a small team can move from zero to a repeatable, data-driven publishing cadence.

| Week | Activity | Owner | Output |

|---|---|---|---|

| Week 1 | Content inventory; GA4 trend check; Search Console query list | SEO Lead | Audit report; prioritized signals |

| Week 2 | Forecast topics; score by intent & conversions | Data Analyst | Topic priority list; first forecast |

| Week 3 | Create content using templates; schedule publishing | Content Manager | 4–6 published posts; editorial calendar |

| Week 4 | Measure vs forecast; update governance | Head of Content | Performance dashboard; updated playbook |

Industry analysis shows automation and predictive workflows reduce time-to-publish and increase hit-rate on prioritized topics.

How Scaleblogger fits into your data-driven workflow

| Aspect | Manual Process | Scaleblogger Advantage |

|---|---|---|

| Data collection | Manual exports from GA4, Search Console; spreadsheets | Automated ingestion (GA4, Search Console); centralized signals |

| Forecast generation | Spreadsheet models; ad-hoc estimates | AI forecasting with historical pattern recognition; quick scenario runs |

| Reporting & governance | Manual dashboards; weekly syncs | Automated dashboards; governance templates; publishing automation |

Practical tips: start with 1–2 topic clusters, use templates for speed, and track forecast variance each week. If you want to scale the loop, use AI content automation to free creative time and maintain strict measurement windows. When implemented correctly, this approach reduces overhead by making decisions at the team level.

By shifting content decisions from intuition to forecasted signals, teams stop burning budget on low-impact pieces and start publishing with measurable confidence. The analysis in this article showed how predictive scoring surfaces which topics will resonate, how testing small experiments validates model output quickly, and how iterative feedback tightens accuracy over time. Companies that ran controlled pilots saw higher organic traffic and better engagement within a single quarter; when editorial teams paired forecasts with A/B tests, conversion lift followed. Expect to set up initial models in weeks, validate with one or two pilot campaigns, and refine continuously — and be prepared to ask: How quickly will I see ROI? Start with narrow experiments that link forecasted topics to a single KPI. What data do I need? Historical traffic, engagement metrics, and article metadata are the fastest inputs to meaningful predictions.

Operationalize these insights by running a short pilot, validating forecasts against real traffic, and scaling only proven topics. For teams looking to automate forecasting and integrate it with editorial workflows, platforms that centralize signals and outputs can reduce setup time and improve precision. As a practical next step, explore tools tailored for content forecasting: Start forecasting with Scaleblogger to streamline pilot setup and move from guesswork to data-driven publishing.