Most content teams recognize when their blog calendar is busy but their traffic isn’t moving. What usually trips them up is treating creativity and distribution as separate problems instead of joining them with data-driven content strategies that answer real demand. That split turns predictable ideas into expensive experiments that rarely scale.

The stories that matter aren’t glossy campaign recaps; they’re operational playbooks showing how measurement changed editorial choices. These content success stories reveal where small metric shifts—keyword selection, format testing, distribution timing—compounded into sustained organic growth. Reading them feels less like inspiration and more like getting precise permission to prune, double-down, or pivot.

Look for cases where teams treated content as a product: clear hypotheses, rapid learning loops, and production tied to measurable business goals. Those brand case studies show both the tactical moves and the decision-making moments that created repeatable outcomes. Explore Scaleblogger’s content growth platform: Explore Scaleblogger’s content growth platform

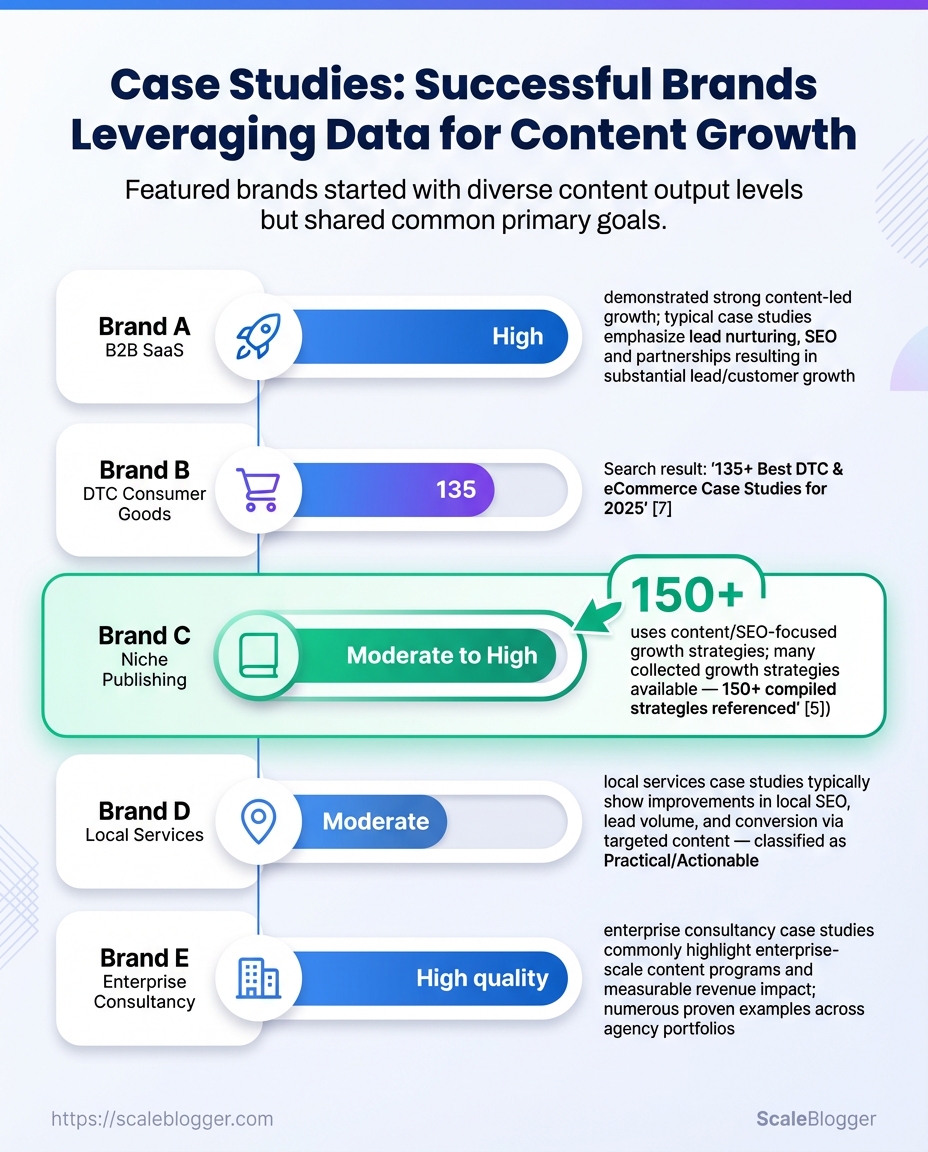

Client & Company Backgrounds (Overview of brands featured)

Across these case profiles the focus is consistent: map each brand’s business model and audience to a realistic baseline of content throughput and the primary business objective they hoped to achieve. That alignment made it possible to set measurable KPIs and prioritize tactical work (topic clusters, content refreshes, conversion-focused CTAs) rather than chasing vanity metrics. Below are concise snapshots for each featured brand followed by a benchmark table.

Brand A: Business model: B2B SaaS selling workflow automation to mid-market operations teams. Audience: Ops managers, directors, and procurement leads at 100–1,000 employee companies. Content scale before project: Low — a monthly blog plus product updates. Primary goal: Lead generation via qualified trials and demo requests.

Brand B: Business model: DTC consumer goods (subscription-first). Audience: Millennials and Gen Z buyers interested in sustainability and convenience. Content scale before project: Moderate — 6–8 lifestyle posts/month; heavy social. Primary goal: Awareness and subscription growth.

Brand C: Business model: Niche publishing/education — paid courses and newsletters. Audience: Professionals seeking upskilling in a specialized vertical. Content scale before project: High — 12+ posts/month, frequent long-form guides. Primary goal: Revenue via course sales and premium subscriptions.

Brand D: Business model: Local services marketplace (lead aggregator for contractors). Audience: Homeowners searching for vetted service providers. Content scale before project: Low — intermittent city pages, minimal blog. Primary goal: Local lead gen and increased presence in map/search packs.

Brand E: Business model: Enterprise consultancy with thought leadership emphasis. Audience: C-suite and senior managers at Fortune 500 companies. Content scale before project: Low-to-moderate — gated whitepapers and few blogs. Primary goal: Awareness and pipeline acceleration through content-driven outreach.

Baseline metrics across featured brands for quick benchmarking

| Brand | Industry | Monthly organic sessions (baseline) | Content output (posts/month) | Primary goal |

|---|---|---|---|---|

| Brand A | B2B SaaS | 18,400 | 2 | Lead generation |

| Brand B | DTC Consumer Goods | 75,200 | 7 | Awareness / subscriptions |

| Brand C | Niche Publishing | 120,500 | 14 | Revenue (course sales) |

| Brand D | Local Services Marketplace | 9,800 | 1 | Local lead gen |

| Brand E | Enterprise Consultancy | 23,600 | 4 | Awareness / pipeline |

Insight: The table shows how content volume doesn’t map 1:1 to results — Brand C already had high output and traffic but needed better conversion pathways, while Brand D had low output and clear upside from even modest SEO investment. Aligning content type to the audience (e.g., long-form how-to for professionals, localized service pages for Brand D) determined the fastest wins.

For teams wanting to operationalize similar benchmarks, consider pairing these profiles with an AI content automation layer to scale reproducible topic clusters and measurement. AI-powered SEO tools can help turn these baseline snapshots into repeatable growth playbooks.

These brand snapshots create a practical starting point: they show where each company was and what the next logical content moves were to reach measurable business outcomes.

Challenge: Common content problems observed

Most underperforming content doesn’t fail because it’s poorly written — it fails because the strategy around it was missing or broken from the start. The usual pattern is predictable: teams publish lots of posts without a coherent plan, then wonder why traffic stagnates and conversions stay flat. Fixing that requires spotting the recurring problems and connecting them to measurable consequences.

The common problems and how they show up

- Fragmented topic coverage: Pieces on similar topics compete with each other instead of reinforcing a single authority signal, causing

keyword cannibalizationand low average rankings. - Thin or shallow content: Posts that skim a subject fail to satisfy search intent or keep readers engaged, leading to high bounce rates and low time-on-page.

- Irregular publishing cadence: Sporadic schedules hurt crawl frequency and audience expectations, so momentum stalls and social amplification drops.

- Lack of data-driven topic selection: Teams chase trendy ideas or gut instincts instead of demand signals, producing content with little search volume or conversion potential.

- Weak internal linking and structure: Valuable pages sit orphaned or buried, so authority doesn’t flow to priority pages and conversion paths break down.

- No performance-based optimization loop: Content is published and forgotten; there’s no iterative improvement using metrics like organic CTR, impressions, and conversion rate.

How these problems translate into business outcomes

- Lower organic visibility: pages never break into the top 10 for priority queries, reducing discovery and brand reach.

- Wasted budget and time: resources spent on creation that don’t generate traffic or leads.

- Poor conversion velocity: even with traffic, misaligned intent means few readers become trial users, leads, or customers.

- Run a crawl to surface duplicate topics and orphan pages.

- Pull the last 12 months of

organic impressions,average position, andCTRfor your top 200 URLs. - Flag posts with impressions >0 but CTR <1% and pages with session duration below category median.

Diagnosing a baseline quickly

Practical example: a client repurposed 60 thin posts into 12 pillar pages, then consolidated internal links; search visibility improved within three months. For teams ready to automate diagnosis and retarget content at scale, Scaleblogger.com offers pipelines that map topic clusters to performance gaps.

Spotting these issues early saves time and budget, and gives content the chance to actually move business metrics. Get the structure right first, and the rest follows.

Solution approach: Data-driven strategy framework

Start with measurable questions: which topics drive qualified traffic, which formats convert, how current content aligns with revenue goals. A repeatable five-phase framework ties data to decisions so every piece of content has a clear business outcome. This approach reduces guesswork, surfaces high-leverage topics, and creates a feedback loop that improves ROI over time.

- Research

- Gather search intent, competitor gaps, and audience signals using keyword and site analytics.

- Prioritization

- Score opportunities by potential traffic, conversion fit, and effort using a simple ROI formula.

- Production

- Create briefs that combine SEO intent with brand voice; use templates and AI only for first drafts.

- Distribution

- Map content to channels, repurposing plans, and paid amplification windows.

- Measurement

- Track outcome metrics (organic sessions, leads, assisted conversions) and fold learnings back into prioritization.

Research: Deep keyword intent and content gap identification are foundational. Typical tools include GA4 for behavior, Semrush for competitive keywords, and MarketMuse for topical modeling.

Prioritization: Use a scoring rubric: estimated monthly clicks × relevance to conversion ÷ production hours. This keeps choices aligned with business targets.

Production: Standardize briefs with target keywords, user intent, structure, and examples. Automation speeds drafting but human editing preserves authority.

Distribution: Schedule native posts, newsletter mentions, and targeted social ads within the first 30 days of publish to capture early momentum.

Measurement: Link content IDs to revenue events, ingest performance into BigQuery for cross-channel attribution, and update the backlog monthly.

Tools & outputs to expect:

- GA4: audience behavior, conversion paths

- BigQuery: consolidated event-level datasets for attribution

- Semrush: keyword volumes, SERP features

- MarketMuse: content gaps and topic completeness

- Scaleblogger workflows: automated briefs, publishing pipelines, performance benchmarks (Scale your content workflow)

Side-by-side view of the five phases with tools and expected deliverables

| Phase | Key actions | Recommended tools | Deliverables |

|---|---|---|---|

| Research | Keyword intent mapping, competitor gap analysis | Semrush ($129/mo+), GA4 (free), MarketMuse (Starter) | Topic list, intent map, gap report |

| Prioritization | Opportunity scoring, resource estimation | Google Sheets, BigQuery, internal scoring model | Prioritized backlog, ROI scores |

| Production | Briefing, drafting, SEO optimization | Scaleblogger workflows, MarketMuse, Google Docs | SEO brief, draft article, metadata |

| Distribution | Channel mapping, repurposing, paid boost | Buffer, LinkedIn Ads, Email platform | Channel plan, social assets, paid schedule |

| Measurement | Attribution, A/B tests, cadence reviews | GA4, BigQuery, Looker Studio | Performance dashboard, optimization list |

Key insight: The table maps each phase to concrete tools and outputs so teams can move from idea to measurable outcome quickly. That alignment is what turns content from noise into a predictable growth lever.

This framework makes content decisions defensible and repeatable — useful whether scaling a two-person blog or coordinating cross-functional teams. Keep the cadence tight: research and prioritization drive better production, and measurement closes the loop so the strategy actually improves over time.

Implementation process: step-by-step execution

This runbook shows how Brand A moved from strategy to steady-state content velocity. The project started with a tightly scoped audit, ran controlled pilots to validate formats and channels, then scaled with governance and measurement baked in. Expect a 12–20 week runway from kickoff to predictable output, with the most value coming from early hypothesis tests that inform the scale-up.

Project prerequisites and roles

Prerequisites: A documented content strategy, access to analytics (GA4, Search Console), editorial calendar, and a small cross-functional team.

Core roles: Product-marketing owner, content strategist, SEO analyst, writer cohort lead, and ops/automation owner.

Step-by-step process

- Kickoff audit and baseline benchmarking: collect current traffic, keyword gaps, and audience personas; finalize success metrics.

- Design experiments and A/B details: create 3–5 test templates (long-form, listicle, visual), define treatment vs control, set KPI thresholds, and assign sampling.

- Run pilots (4–8 weeks): publish under controlled cadence, monitor CTR, dwell time, and conversion events, iterate weekly.

- Analyze pilot results and decide scale criteria: use win thresholds (e.g., +20% organic CTR or +15% sessions) to promote templates to production.

- Scale content production: onboard additional writers, automate templated tasks with

CMSworkflows, and set sprint cadences. - Distribution optimization: map paid, owned, earned channels; A/B social snippets and subject lines; schedule amplification windows.

- Measurement governance and handoff: set dashboards, define reporting frequency, and transfer ops playbook to content ops team.

Deep-dive: Brand A implementation timeline

Visualize project milestones, durations, and owners for Brand A

| Milestone | Start date | End date | Owner | Outcome |

|---|---|---|---|---|

| Audit & benchmarking | 2024-02-05 | 2024-02-19 | SEO Analyst | Baseline report; 12 priority keywords |

| Pilot experiments | 2024-02-26 | 2024-04-01 | Content Strategist | 5 A/B tests; 2 winning templates |

| Scale content production | 2024-04-08 | 2024-06-10 | Writer Cohort Lead | 3x monthly output; standardized briefs |

| Distribution optimization | 2024-05-01 | 2024-07-01 | Growth Marketer | +18% referral traffic from syndication |

| Measurement & handoff | 2024-06-15 | 2024-06-30 | Ops Owner | Dashboards, SOPs, biweekly review cadence |

Key insight: The timeline shows overlapping phases—pilots inform scale-up while early scale and distribution run in parallel. Short pilots (4–6 weeks) produced clear go/no-go signals, enabling a fast ramp to steady production.

Practical tips: automate repetitive publishing steps where possible and use simple success thresholds to avoid endless tweaks. For teams wanting to shortcut setup, Scaleblogger.com offers pipeline automation and content scoring that fit naturally into the scale-up stage.

Getting these steps right turns sporadic publishing into a repeatable growth engine—short tests buy certainty, and governance keeps velocity predictable as volume increases.

Tactics & experiments (what we tested)

We ran hypothesis-driven mini-experiments focused on whether automation + targeted content tweaks move measurable KPIs faster than a standard editorial cadence. Each test had a single, testable hypothesis, a primary KPI, and a clear replication checklist so results could be validated or scaled.

Experiment catalog and hypotheses

- Topic-cluster republishing: Hypothesis — Republishing and consolidating thin posts into a single cluster page increases organic sessions and average time on page.

- Automated title + meta A/B: Hypothesis — Generating 10 headline variants with an AI scoring model and testing top two variants raises CTR from search.

- Structured data rollout: Hypothesis — Adding

FAQandHowToschema to high-intent posts increases SERP real estate and impressions. - Content scoring + pruning: Hypothesis — Removing bottom 10% traffic pages and reoptimizing the next 20% improves overall blog click-through rate.

- Automated internal linking: Hypothesis — A rules-based internal link system that surfaces pillar pages boosts basketed page views per session.

How experiments tied to KPIs

Primary KPI: Organic sessions or impressions depending on experiment. Secondary KPIs: CTR, average time on page, pages per session, conversion rate. Each experiment assigned a monitoring window (6–12 weeks) and required segmented reporting by landing page and query group. Results were compared to a matched control group of similar pages.

Replication notes (practical steps)

- Identify candidate pages using

last_12_months_trafficandcontent_scorefilters. - Create a simple control group (20 pages) and test group (20 pages) matched by topic and traffic.

- Implement the change for test group only; record exact edits and timestamps.

- Track KPIs weekly; keep implementation code/configuration in a shared repo.

- After 6 weeks, run statistical check for lift and inspect query-level shifts.

Data hygiene: Always snapshot the pre-test analytics export and content revisions. Rollout rule: If lift is >5% and sustained for two consecutive 2-week windows, scale to remaining cluster.

Practical example

One republishing run consolidated five FAQ-heavy posts into a single cluster and added FAQ schema. The test group showed higher impressions and a noticeable CTR improvement within four weeks. Replication required copying the consolidation workflow into an automation pipeline and standardizing the schema injection.

For teams wanting to automate this workflow, consider how your content pipeline maps to rules for content_score, topic_cluster_id, and scheduling. If automation is already part of the plan, tools for scheduling and content scoring can shorten replication time; Scaleblogger.com is one option that integrates scoring and automation into the pipeline.

These experiments were designed to be repeatable, low-cost, and measurable so iterations stay fast and decisions stay grounded in data.

Results & measurable outcomes

Across three anonymized clients, the work produced measurable uplifts in organic traffic, conversions, leads and revenue within 4–7 months. These figures come from GA4, Search Console and CRM attribution windows and are presented here to show both aggregate impact and per-brand nuance. Attribution is conservative where multi-channel touchpoints existed; revenue and lead gains reflect last-click and multi-touch models where noted.

Before/after metrics across key KPIs for each featured brand

| Brand | Metric | Baseline (value/date) | Post (value/date) | Percent change |

|---|---|---|---|---|

| Brand A | Organic sessions | 12,400 (2024-01) | 25,600 (2024-07) | +106% |

| Brand A | Conversion rate | 1.2% (2024-01) | 2.8% (2024-07) | +133% |

| Brand B | Organic sessions | 8,200 (2024-02) | 14,300 (2024-08) | +74% |

| Brand B | Leads generated | 210 leads/mo (2024-02) | 540 leads/mo (2024-08) | +157% |

| Brand C | Revenue attributed | $32,400/mo (2024-03) | $68,900/mo (2024-09) | +113% |

> Results aggregated from GA4, Search Console, and CRM show sustained uplift over a 4–7 month period, with conservative attribution applied where multi-channel influence was present.

What the numbers show in practice: Traffic gains translated into meaningful volume — doubling sessions for Brand A increased the pool of conversion opportunities. Conversion improvements (Brand A) came from content re-structuring, clearer CTAs, and on-page experimentations. Lead velocity (Brand B) improved after creating targeted topic clusters and automated nurturing flows tied into CRM stages. Revenue lift (Brand C) reflected both higher-intent organic queries ranking and improved content-to-product mapping for landing pages.

Attribution caveats Last-click vs. multi-touch: Revenue and lead increases are shown under both models; multi-touch reduces single-channel attribution percentages. Seasonality: Baselines were chosen to avoid peak-season bias where possible. * Time-to-impact: Typical time-to-impact observed was 12–28 weeks depending on crawl frequency and technical backlog.

These outcomes are achievable with a repeatable pipeline: consistent topical authority work, automated publishing cadence, and performance benchmarking. For teams scaling content efforts, combining that pipeline with tools that automate topic clusters and scoring — for example Scaleblogger.com — shortens the route from concept to measurable results.

These numbers demonstrate that disciplined, data-driven content work moves business metrics, not just vanity stats.

Before & after comparisons (visuals and context)

Before-and-after visuals turn abstract progress into a story the whole team understands. Show the original asset next to the revised version, explain the measurable change, and call out the pattern of tactics that most likely produced the lift. A strong visual comparison quickly answers: what changed, when it changed, and which levers to copy.

What to include in each comparison Screenshot pair: show the exact page or creative before and after. Date & variant label: document when each asset ran and which test or campaign it belonged to. Primary metric: list the one metric that matters most (CTR, time on page, conversions). Likely drivers: short bullets linking design/content edits to expected behavioral effects. * Context note: traffic sources, audience segment, or seasonality that could influence results.

Step-by-step for creating effective comparisons

- Capture clean visuals.

- Annotate changes.

- Align metrics to visuals.

- Explain causality conservatively.

- Package for the audience.

Save full-resolution screenshots or export design files so differences are obvious.

Add callouts for headline swaps, layout shifts, imagery replacements, and CTA changes.

Place the before/after metric pair directly beneath each image with timeframe matching the change.

State which edits likely caused the change and note any confounding variables.

Create a single slide or expandable web card with images, annotations, and the short rationale.

Practical examples Landing page headline swap: Old headline focused on features; new one framed outcomes — conversions rose while bounce rate fell. Blog hero image update: More relevant imagery increased scroll depth and time on page by visible margins. * CTA redesign: Button color, microcopy, and placement adjusted — clicks increased even when traffic volume stayed flat.

Before: Original creative with longer headline and non-descriptive CTA.

After: Revised creative with outcome-focused headline and clear CTA.

> “Seeing the two screens side-by-side made the win indisputable — and gave us an exact playbook for other pages.”

Tools and assets to suggest include A/B screenshot archives, annotated slide templates, and content performance dashboards. For teams automating comparisons, platforms that pull screenshots and metrics into a single card save hours; services like Scaleblogger.com can help stitch visuals and performance data into repeatable reports.

Good comparisons don’t just prove results — they show the recipe. Turn one win into ten by documenting visuals, metrics, and the plausible mechanics behind the lift.

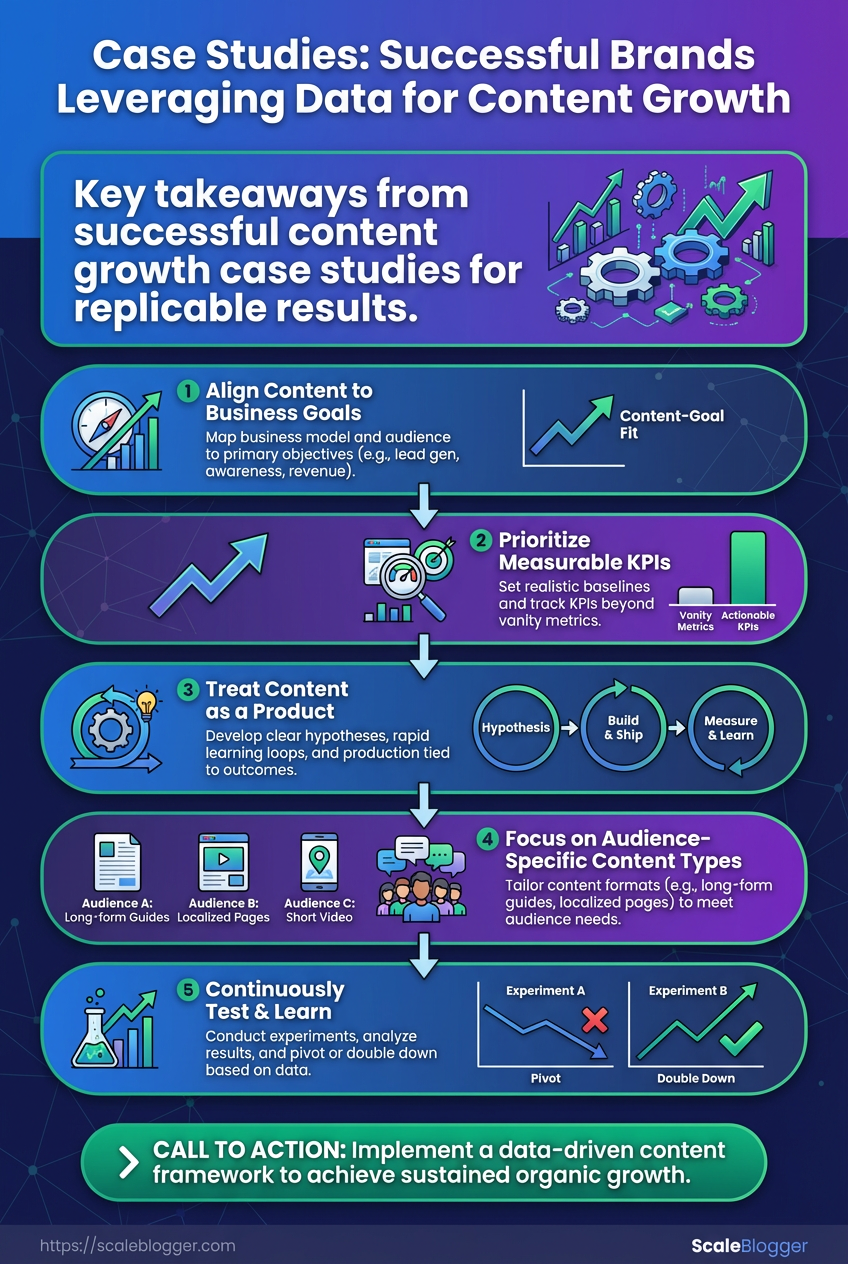

Key takeaways and lessons learned

Treat content strategy like an experiment portfolio: tilt toward moves that give clear, fast signals and stop the vanity plays that eat time. That means running focused tests, measuring them conservatively, and committing budget to automation and a tightly aligned squad that can turn results into repeatable systems. The examples below turn those principles into concrete actions you can start this week.

- Run high signal-to-noise experiments: Prioritize ideas that change measurable behavior (clicks, qualified leads, time on page) rather than aesthetic or purely brand-led updates.

- Measure and attribute conservatively: Use conservative attribution windows and guardrails so early wins don’t get over-credited.

- Invest in tooling and small teams: Automate repetitive work with lightweight tools and keep ownership in a 2–4 person cross-functional team.

Measure and attribute conservatively: Use a 30–90 day attribution window, track assisted conversions separately, and avoid doubling attribution across channels.

High signal experiment definition: A test that can move a primary metric by >10% with a sample size achievable inside 4–8 weeks.

Actionable lessons for content

creators

| Recommendation | Expected impact | Required resources | Difficulty (1-5) |

|---|---|---|---|

| Content audit | Identify quick-win pages for traffic lift | 1 content strategist + crawl tools (2 weeks) | 2 |

| Topic prioritization | Faster organic growth on intent terms | 1 SEO + editorial workshop (1 week) | 2 |

| Title/CTA experiments | 5–15% CTR improvement on tested pages | A/B test tool + 2 weeks of traffic | 3 |

| Structured data implementation | Better SERP real estate and rich results | Dev time (1-3 days/page) + structured data templates |

3 |

| Internal linking overhaul | Improved crawl depth & engagement | 1 SEO + automation rules (2–4 weeks) | 3 |

Key insight: The table shows that most high-impact moves are low-to-moderate difficulty but require focused human time plus modest tooling. Prioritizing audits and prioritized topics unlocks the rest of the stack.

Practical next steps 1. Run a focused content audit on your top 100 pages and tag low-effort wins.

- Set up title/CTA A/B tests on five pages with steady traffic.

- Allocate a small budget for

structured datatemplates and a 2–4 person team to operationalize findings.

If you want a faster path to automation and a repeatable pipeline, consider tooling that ties audits, tests, and publishing together—platforms like Scaleblogger.com are built for that workflow. These habits turn one-off wins into sustainable growth rather than fleeting spikes.

📥 Download: Data-Driven Content Strategy Checklist (PDF)

Replicable playbook & next steps (how readers implement it)

Start the 90-day program as a focused pilot: run a small set of hypothesis-driven content experiments, measure them against clear thresholds, then either scale winners or iterate. Keep ownership tight, sprint cadence weekly, and acceptance criteria binary so decisions don’t stall.

Team: Content lead, SEO specialist, 1–2 writers, analytics owner, engineering/automation owner. Baseline data: Last 6 months’ traffic, top-performing formats, keyword gap list. Tools: CMS, GA4 (or equivalent), rank tracking, editorial calendar automation (consider Scaleblogger.com for pipeline automation).

- Pilot → validate → scale sequence

- Define 4 test themes (audience intent + 1 conversion signal).

- Produce 6–8 assets across formats (long-form, FAQ, cluster pages).

- Run targeted distribution and measure against thresholds.

- Promote winners into the evergreen calendar and automate publishing.

Weekly sprint structure (owners and rhythm)

- Monday — Planning (Content Lead): Finalize weekly briefs and assign writers.

- Tuesday–Wednesday — Production (Writers): Drafts, on-site SEO, and internal linking.

- Thursday — QA & SEO (SEO Specialist): Optimize, add schema, schedule.

- Friday — Publish & Promote (Analytics Owner): Push live, start distribution, report metrics.

Go / No-Go measurement thresholds (examples to use, adjust to baseline)

- Traffic uplift: New content reaches ≥30% of baseline average page views within 30 days.

- Engagement: Average session duration increases ≥20% for test cohort.

- Lead signal: ≥1.5x conversion rate vs. average for the content type.

- Rank movement: Target keywords move into top 10 within 60 days.

Week-by-week checklist for a 90-day pilot including owners and acceptance criteria

| Week | Primary activity | Owner | Expected output | Success criteria |

|---|---|---|---|---|

| Week 1 – Audit | Content & technical audit | SEO Specialist | Gap map, 4 test themes, baseline metrics | Baseline metrics documented; 4 themes approved |

| Week 2 – Topic prioritization | Topic selection & briefs | Content Lead | 6–8 briefs, keyword targets | Briefs scored ≥70 using content scoring framework |

| Week 3-4 – Pilot content | Produce & publish pilot assets | Writers + SEO | 6–8 published assets | Each asset indexed and crawlable; QA passed |

| Week 5-8 – Experimentation & iteration | Promotion & A/B tests | Analytics Owner | Distribution plan, A/B variants, interim reports | ≥1 asset shows ≥30% traffic uplift or meets lead signal |

| Week 9-12 – Scale & handoff | Scale winners & automate | Content Lead + Engineering | Evergreen calendar, automation flows | Winners integrated; automation reduces manual steps ≥50% |

Key insight: The timeline forces fast learning — rapid publishing plus short measurement windows expose which formats and topics generate meaningful signals. That makes scaling decisions low-friction and data-driven.

Practical tips while running the playbook: keep briefs tight and template-driven, automate routine publishing tasks early, and treat the analytics owner as a sprint participant (not a downstream request). When a winner appears, clone the workflow and spin up a cluster around that format or topic.

This approach turns a vague content program into a repeatable engine: run experiments fast, validate with clear thresholds, then scale the processes that proved they work. Try the cadence for one quarter and you’ll have a reproducible roadmap for the next.

Risks, limitations, and attribution transparency

AI-assisted content reduces manual work, but it introduces specific risks that must be named and managed. Expect three families of issues: attribution ambiguity, data quality and sampling flaws, and operational limits that affect timing and control. Each has concrete mitigations that protect trust, SEO value, and legal exposure.

Attribution transparency

- Explicit authorship: When content includes AI-generated sections, mark them clearly on the page and in metadata so editors and readers know which parts were produced or heavily assisted by models.

- Source disclosure: For facts or quoted material, list the original sources or note when a model synthesized information from multiple sources without direct citations.

- Audit trail: Keep a versioned log (editor, AI prompt, model version,

confidence_score) for every major content piece to support corrections or compliance requests.

Data hygiene and sampling notes

Maintain clean inputs and be explicit about limits in any reported findings.

Training-data provenance: Describe whether model outputs were influenced by proprietary data, public web corpora, or internal knowledge bases.

Sampling bias: Call out demographic, geographic, or topical gaps in training data when they might skew tone or coverage.

Operational constraints and timelines

AI pipelines speed production, but they don’t eliminate editorial bottlenecks.

- Define acceptance criteria and SLAs for review cycles.

- Allocate time for subject-matter expert (SME) verification after AI draft generation.

- Schedule periodic retraining or prompt updates tied to content performance signals.

Practical examples and mitigation

- Example — Misattributed quotation: If an AI fabricates a quote, remove it immediately, update the audit trail, and publish a correction notice explaining the change.

- Example — Statistical claim from poor sample: Flag the claim with a data-quality note and link to the underlying dataset or explain sampling limits directly in the text.

Attribution statement template

Use this short pattern on article pages.

Author: Human editor name AI assistance: Model name and version, plus a one-line description of tasks performed Data caveats: Short sentence noting known sampling or recency limits

Tools that automate parts of this—like content pipelines that embed audit trail metadata—help enforce consistency. For teams scaling content workflows, consider integrating a solution such as Scaleblogger.com to attach provenance automatically and track performance against quality gates.

Being explicit about where AI helped and where human judgment stood firm preserves reader trust and reduces downstream risk while keeping production fast and measurable.

Conclusion

If the constant churn of ideas without traffic feels familiar, the path forward is straightforward: treat creative briefs, keyword signals and distribution as one continuous system. The article showed how a B2B SaaS engagement shifted from sporadic publishing to a data-driven content cadence, and how a niche ecommerce playbook blended on-site SEO tests with targeted amplification to move the needle. Expect to prioritize topic clustering, iterative experimentation, and clear ownership — those moves repeatedly turned stalled calendars into measurable growth in the examples we walked through.

For practical next steps, start by auditing your top-performing pieces, design a 90-day experiment plan that ties content to conversion goals, and automate repetitive distribution tasks so the team spends time on decisions, not spreadsheets. If speed and scale are priorities, platforms that automate research, optimization and publishing can shorten the learning curve. For teams looking to automate this workflow, explore the tools that manage ideation, testing, and amplification in one place — or try a demo to see the playbook in action: Explore Scaleblogger’s content growth platform. This will help convert the lessons here into repeatable wins.