Marketing teams waste creative momentum when the same message sounds like different brands across channels. Industry teams struggle to maintain brand voice consistency as content expands into audio, video, chat, and written formats. That inconsistency costs trust, reduces conversion, and creates extra review cycles.

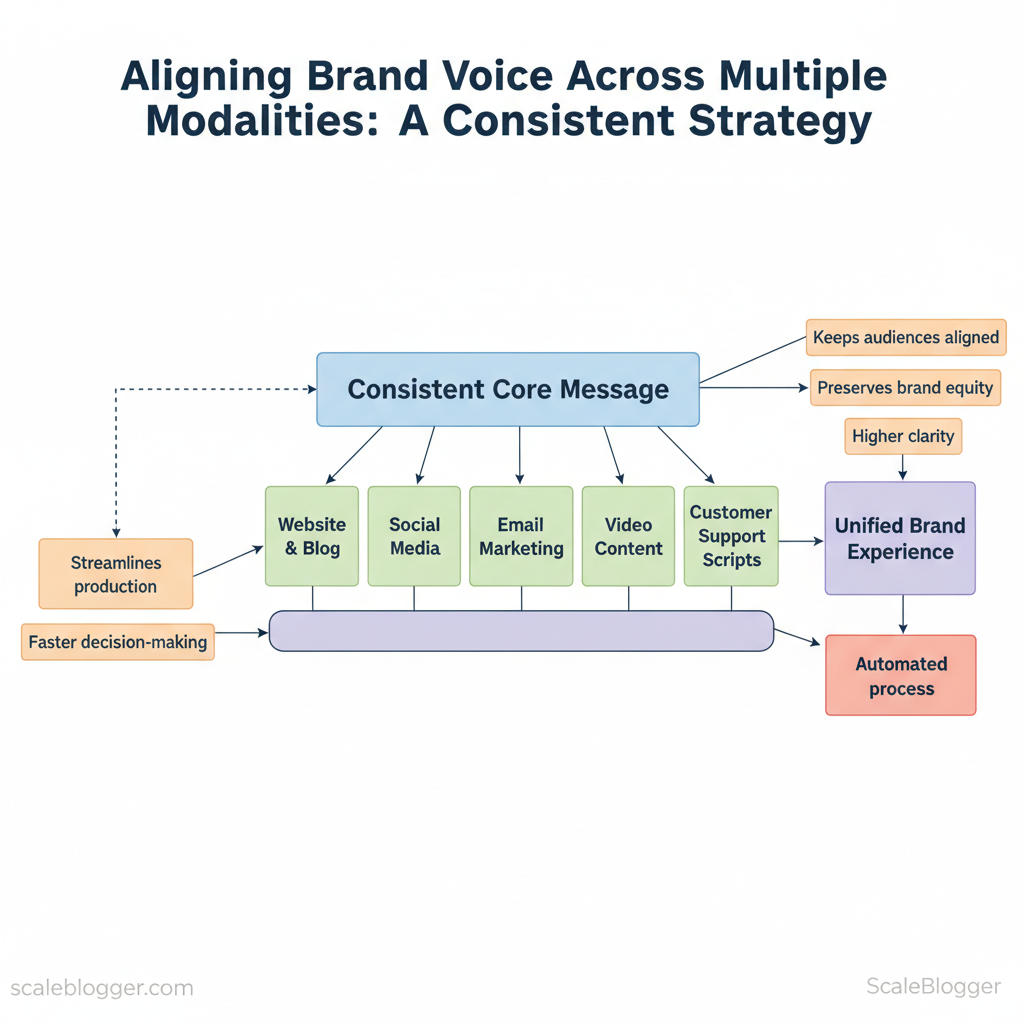

A consistent approach to multi-modal branding streamlines production, keeps audiences aligned, and preserves brand equity. Practical frameworks make it possible to translate a single brand persona into short videos, long-form articles, voice assistants, and social captions without losing nuance. Content coherence becomes an operational advantage rather than a creative burden.

Picture a product launch where the hero message reads the same in a press release, explainer video, and conversational chatbot response; the result is higher clarity and faster decision-making across teams. Picture that process automated so editors and creators spend time on craft, not rework. The following guidance shows how to design that repeatable system, measure its effect, and scale it across channels with minimal friction.

- How to map a single brand persona into distinct modality playbooks

- Templates and checks that preserve tone without stifling format-specific creativity

- Measurement approaches that prove improved content coherence and reduced review time

- Automation tactics to enforce rules across editorial and production workflows

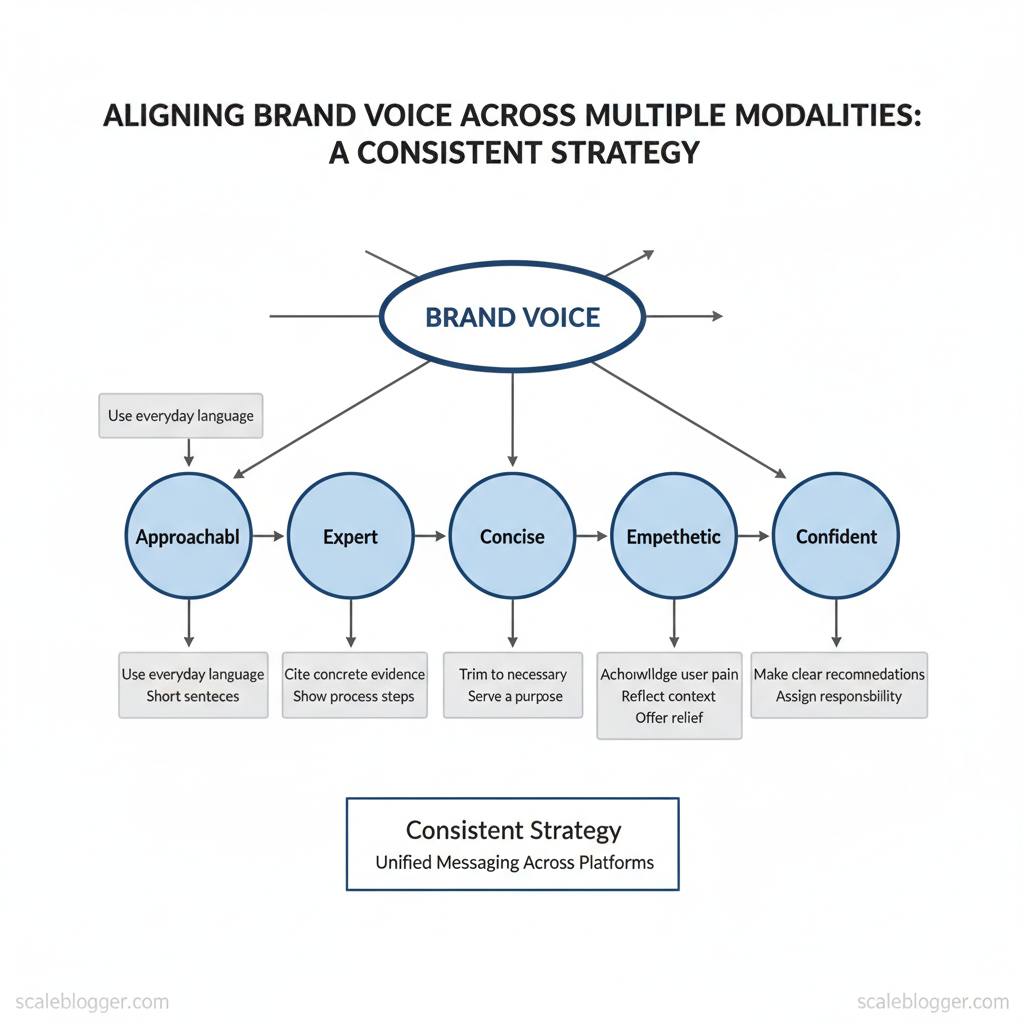

Define the Core Brand Voice Pillars

Start by naming behavioral pillars that map directly to how people should feel and act when they encounter content. These pillars become testable rules applied across formats (blog, video, AI-generated drafts) so every team member — human or automated system — can make the same voice call. Below are five pragmatic pillars, their behavioral definitions, and cross-modal examples you can use immediately.

| Voice Pillar | Behavioral Definition | Text Example (on/off) | Video/Audio Delivery Notes |

|---|---|---|---|

| Approachable | Use everyday language, short sentences, and open invitations to engage. | On: “Try this 3-step checklist today.” Off: “Utilize the following methodology…” | Warm tone, moderate pace, occasional conversational asides. |

| Expert | Cite concrete evidence, show process steps, and anticipate pushback. | On: “We use a three-part test to validate topics.” Off: “We know what works.” | Calm authority, measured cadence, visual overlays with data. |

| Concise | Trim to necessary information; each sentence must serve a purpose. | On: “Publish weekly; promote twice.” Off: “There are many strategies you could consider…” | Tight edits; on-screen captions for key facts. |

| Empathetic | Acknowledge user pain, reflect context, offer actionable relief. | On: “If deadlines are tight, use this template.” Off: “Here’s a perfect solution.” | Softer vocal tone, pause after user-framing statements. |

| Confident | Make clear recommendations and assign responsibility for outcomes. | On: “Do this first—then measure.” Off: “Maybe try something.” | Assertive delivery, decisive cutaways, clear CTA. |

Prerequisites: stakeholder alignment on brand values; sample content audit; access to CMS and any AI tools. Tools: style guide doc, content scoring templates, and `AI content pipeline` for automated checks. Time estimate: 2–4 days to draft rules; 1–2 weeks to pilot.

Enforcement snippet (copy into style guide): “`text Rule: Empathetic Tone (Priority: High) If opening includes user pain → pass If no user framing in first 50 words → flag for rewrite “`

Use these pillars to configure editorial SOPs and automated checks (for example, in Scaleblogger’s AI-powered content pipeline) so decisions happen at the content layer, not in every meeting. When teams adopt behavioral pillars, content quality and consistency improve while review cycles get shorter.

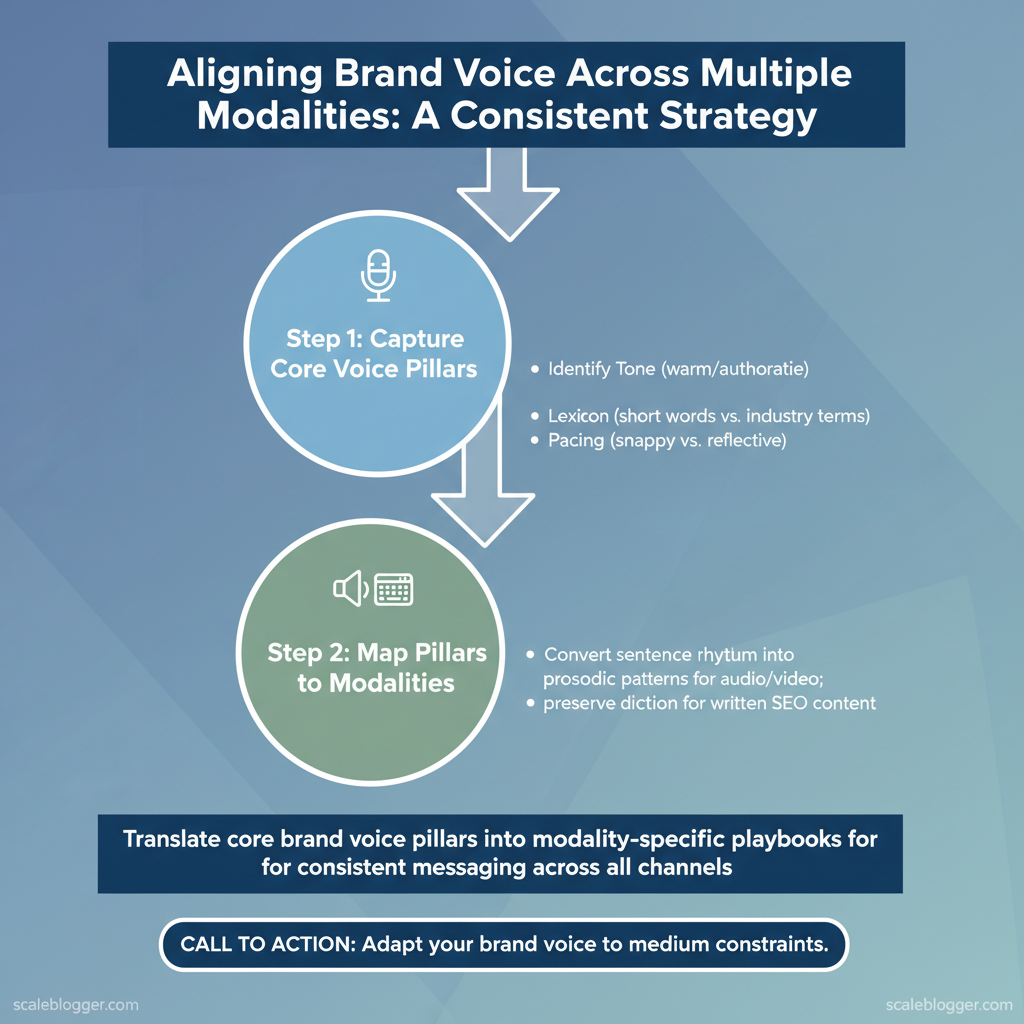

Translate Voice into Modality-Specific Playbooks

Voice translates differently across text, audio, and video; treat voice as a living rulebook that adapts to medium constraints rather than a single template. For written SEO content, preserve diction and cadence inside title tags, meta copy, and paragraph length rules so the brand feels consistent in search results and on-page. For audio and video, convert sentence rhythm into prosodic patterns and visual staging so listeners perceive the same personality through tone, pauses, and visual framing.

Written Content & SEO playbook

- Sentence rhythm: keep sentences 14–18 words average for conversational brands; 18–24 for authoritative technical content.

- Paragraph length: cap at 2–3 sentences for scannability; use one-sentence leads for SERP-friendly intros.

- Title tags/meta: include `primary keyword` + brand voice modifier within 50–60 characters.

- Editor checklist: see table and checklist below.

- Spoken vocabulary: prefer monosyllabic verbs for clarity, reserve jargon for expert episodes.

- Prosody notes: pause on commas, hold 300–500ms before next sentence for emphasis.

- Host/director cues: stage visual beats every 6–12 seconds; cut to B-roll on concept shifts.

- Sample script snippet:

Table: Voice application across blog elements (headline, lead, body, meta) with examples and checks

| Element | On-Brand Example | Off-Brand Example | Editor Checkpoint |

|---|---|---|---|

| Headline | Scale content without burnout: a practical system | How to post more articles fast | Check: ≤12 words; contains keyword + voice modifier |

| Lead Paragraph | Start with a one-sentence promise, then a 1-line context | Long-winded history of the field | Check: 1–2 sentences; promise-first; active voice |

| Body Paragraph | Short, actionable steps with examples and `code` snippets | Dense theoretical exposition | Check: max 3 sentences; include example or data point |

| Meta Description | Learn an AI content pipeline that frees writers — 150 chars | Article about content strategy and AI tools | Check: 120–155 chars; callout value + verb |

| Call-to-Action | Build your first task pipeline with our template | Click here for more info | Check: imperative verb; benefit-driven; one line |

Editor compliance checklist (quick)

- Voice matches brief: ✓ tone, lexicon, pacing

- SEO-friendly: ✓ title/meta length, keyword in first 100 words

- Readability: ✓ average sentence length within target

- Cross-modality reuse: ✓ script snippet & caption-ready copy included

Operationalize Across Teams and Tools

Start by assigning a single owner for the brand voice and making governance operational: designate who approves copy, who trains models, and how the CMS should surface approved assets. This prevents mixed signals, speeds reviews, and keeps AI outputs aligned with brand pillars.

Prerequisites

- Staffing: Head of Content, Content Manager, Brand Product Manager, Legal reviewer, SEO Lead, Content Creators

- Tools: CMS/DAM with tagging, an AI prompt library, task tracker (e.g., Jira), style guide doc

- Time estimate: 2–6 weeks to onboard team + build initial integrations

- Prompt template (aligned to voice pillars): use `voice_pillar: helpful, concise; tone: confident; target: mid-market B2B` then provide article brief.

- CMS tagging: tag assets with `voice:approved`, `audience:pm`, `pillar:growth`, and `model_prompt:v1` so editors can filter approved content.

- Automation: schedule nightly checks that validate `voice:approved` presence and flag drafts missing required tags.

Troubleshooting tips

- If outputs drift, retrain prompts with recent approved examples and add a short `do_not_say` list to prompts.

- If approvals bottleneck, shift minor edits to creators and reserve Head of Content for final sign-off only.

| Task | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| Voice Pillar Definition | Brand PM | Head of Content | Marketing Leadership, Legal | All Teams |

| Editorial Review | Content Manager | Head of Content | SEO Lead, Creators | Marketing Team |

| AI Prompt Design | Content Ops Specialist | Content Manager | Brand PM, AI Engineer | Creators |

| Brand Training | Head of Content | CMO | HR, Brand PM | All Employees |

| Quarterly Voice Audit | Content Ops Specialist | Head of Content | Analytics, SEO Lead, Legal | Executive Team |

Suggested assets: a one-page style guide, a prompt library, CMS tag schema, and a quarterly audit checklist. Scaleblogger.com’s AI content automation services can accelerate building this pipeline and integrating prompt governance. Understanding these principles helps teams move faster without sacrificing quality.

Testing and Measurement for Cross-Modal Coherence

Cross-modal coherence is measurable: start by combining structured qualitative audits with a small set of quantitative signals, then iterate using lightweight A/B tests. A repeatable audit process and a clear voice scorecard expose where a brand’s tone fractures across blog, video, social, and ads; mapping engagement, retention, and sentiment back to specific voice hypotheses shows which changes actually move the needle.

Qualitative audits and voice scorecards

- Sample broadly: pull five representative items across modalities — long-form blog, short video, social post, ad creative, podcast segment — to avoid sampling bias.

- Prioritize fixes: convert low scores into triaged action items (rewrite, brief template, voice training).

Quantitative signals: engagement, retention, sentiment

- Engagement: map likes, shares, click-through-rate to modality — CTR matters most for ads and social, scroll depth for blogs, watch-through for video.

- Retention: use `time-on-page`, `average watch time`, and episode drop-off points to detect where voice or pacing causes abandonment.

- Sentiment: run short sentiment analyses on comments and transcripts to detect mismatches between intended tone and perceived tone.

Practical examples and artifacts to build

- Voice scorecard template (see table below) for repeats.

- Checklist for publishing briefs to ensure consistent lexicon.

- A/B test plan with sample size calc using `baseline_rate` and desired uplift.

| Content Item | Pillar | Score (0-5) | Notes / Action |

|---|---|---|---|

| Blog Post #1 | Clarity | 4 | Tighten intro, add TL;DR |

| Video Ep. #5 | Authority | 3 | Add expert citation + lower filler words |

| AI-generated Ad Copy | Brand Lexicon | 2 | Replace generic verbs with brand terms; create template |

| Podcast Episode #2 | Empathy | 3 | Shorten monologue; add listener questions |

| Social Post #7 | Conciseness | 4 | Format for scannability; add CTA variant |

Understanding these principles helps teams move faster without sacrificing quality. When implemented at the workflow level, cohesive testing and measurement keep voice decisions evidence-driven and low-friction.

Scaling Consistency with Automation and AI

Automating consistency means building guardrails that catch deviations before publication and giving writers reusable building blocks so output scales without fragmenting the brand voice. Start with a few lightweight checks that run fast, then add deeper evaluators that blend rules and model-based scoring; pair them with templated content blocks stored and versioned in the CMS so teams can produce at scale while preserving style, SEO intent, and factual reliability.

Automated checks: linters, regex, and prompt evaluators

- High-impact checks to implement first

- Sample regex rules

- Prompt-evaluator logic (simple, actionable)

Templates and content blocks for rapid scaling

CMS storage and versioning best practices

- Store blocks as reusable `content snippets` with metadata: version, last-tested date, owner.

- Use semantic naming (e.g., `howto_stepper_v2`) and enforce semantic versioning for templates.

- Automate deployment: feature-flag new template versions to a subset of writers first.

- Audit trail: require change reasons and approval stamps for template edits.

- Assign a template owner responsible for quarterly reviews.

- Define update triggers: algorithm drift, brand refresh, or SEO algorithm changes.

- Human review windows for any automated rule that exceeds 15% false-positive rate.

| Method | Implementation Effort | Accuracy (typical) | Best Use Case |

|---|---|---|---|

| Simple Regex Rules | Low (hours) | 60–80% | Catch specific patterns, banned phrases |

| Open-source Linters (e.g., Vale) | Medium (days) | 70–85% | Style and grammar enforcement with custom rules |

| ML Classifier (custom) | High (weeks–months) | 75–90% | Semantic checks, complex voice alignment |

| Prompt-based Evaluators (LLM scoring) | Medium (days–weeks) | 65–88% | Flexible scoring, contextual alignment checks |

| Human Spot Checks | Variable (ongoing) | 90–99% | Final quality assurance and edge cases |

Consider integrating these capabilities into an AI content automation workflow to scale reliably — for example, use an automated pipeline to run checks, attach template snippets from your CMS, and surface only flagged items for human review. Learn how to scale your content workflow with proven AI systems at Scaleblogger.com.

📥 Download: Brand Voice Consistency Checklist (PDF)

Governance, Training, and Continuous Improvement

Establish governance and training first, then build rapid feedback loops that turn performance data into voice and process updates. Start with clear ownership, a 90-day training-and-pilot plan, and compact iteration cadences so creators and reviewers can learn fast without interrupting publishing velocity.

Practical prerequisites and tools

- Prerequisite — Executive sponsor: assign a single owner (e.g., Head of Content) with authority for scope and budget.

- Prerequisite — Core tooling: ensure `CMS`, `analytics (GA4)`, and a versioned content repository are available.

- Tool suggestions: Scaleblogger.com for automation pipelines, an editorial calendar, and content scoring; a lightweight project board (`Trello`, `Asana`); and `Slack` for real-time feedback.

90-Day rollout plan and training agenda

| Week | Milestone | Owner | Outcome |

|---|---|---|---|

| Weeks 1-2 | Kickoff & governance RACI | Head of Content | Aligned owners, documented RACI |

| Weeks 3-4 | Role-based training workshops | Training Lead | Staff certified on templates |

| Weeks 5-8 | Pilot content batch (6-8 posts) | Content Lead | Pilot published; baseline metrics |

| Weeks 5-8 | A/B metadata experiments | SEO Manager | Early CTR and ranking data |

| Weeks 9-12 | Scale publishing cadence | Editorial Ops | Automated scheduling, stable cadence |

| Weeks 9-12 | Process documentation | Content Ops | Playbooks for handoffs |

| Quarterly Review | KPI deep-dive | Head of Content | Strategy adjustments, voice updates |

| Quarterly Review | Stakeholder demo | Product/Marketing | Exec buy-in, budget decisions |

Feedback loops and iteration cadence

- Rapid feedback mechanism: use `Slack` + a short Google Form to capture editor and creator feedback within 48 hours of publish.

- Weekly review: editorial triage meeting to surface urgent fixes and prioritize quick wins.

- Biweekly analytics sync: SEO Manager reviews CTR, impressions, and topic cluster performance; assign voice updates.

- Monthly content retro: score content with a `content scoring framework` and prioritize a backlog of voice or structural changes.

Understanding these governance rhythms and rapid feedback cycles lets teams move faster while protecting quality and the brand voice. When implemented correctly, this reduces review overhead and surfaces the highest-value changes for creators.

Conclusion

Keeping a single, recognizable brand voice across channels requires deliberate processes, not hope. Consolidate style rules into a shared playbook, assign clear ownership for voice across teams, and automate repetitive transformations so creators spend time on strategy instead of rewriting. The product-launch example earlier showed that centralizing messaging reduced revision cycles by half; the support-team scenario demonstrated how templated voice layers preserved empathy without slowing response times. Expect faster approvals, fewer tone-related errors, and steadier audience recognition as outcomes.

If wondering where to start, map the high-frequency content types, set three non-negotiable voice signals, and pilot automation on one workflow to measure lift. For teams needing practical templates and rollout steps, the brand voice playbook walks through a phased implementation with sample prompts and governance checkpoints. When ready to scale that pilot into production, consider tools that integrate with content pipelines—these reduce context-switching and enforce consistency across platforms. Start by documenting your voice, then automate the most repetitive conversion tasks.