Marketing teams are drowning in content tasks that feel urgent but yield little strategic ROI — repetitive drafts, inconsistent voice, and slow approvals. Industry conversations now focus on balancing speed with quality, and on turning automation from a cost saver into a growth engine. That tension defines the AI marketing landscape: more tools, more data, and more choices about where to invest.

Scaleblogger helps teams convert those friction points into scalable workflows, connecting creative direction with `AI` automation to protect brand equity while accelerating output.

- What common AI content challenges derail execution and how to fix them

- Practical ways to capture measurable opportunities in AI marketing

- Workflow changes that reduce manual hours without sacrificing tone or accuracy

- Metrics to track so automation truly drives business outcomes

Current State of AI in Content Marketing

AI has moved from experimental to operational across the content lifecycle: teams use models to generate drafts, automate repetitive tasks, surface audience signals, and personalize experience at scale. Today’s focus is less on “can we?” and more on “how do we integrate AI so it speeds delivery without hollowing out quality.” Practical benefits show up as faster production, consistent brand voice, and richer personalization across channels.

- Content ideation & drafting: rapid topic expansion and first drafts, freeing senior writers for strategy.

- SEO optimization: automated keyword research, content briefs, and on-page suggestions.

- Personalization: dynamic content modules and recomposed copy for segments.

- Distribution & testing: automated scheduling, multivariate copy testing, and optimized send times.

- Analytics & prediction: forecasting performance and surfacing underused opportunities.

Tools typically integrate via `API` calls, webhooks, or platform plugins; larger platforms offer native connectors to CMS, analytics, and marketing platforms, while point solutions rely on sandwiching integration with middleware like `Zapier` or custom scripts. Choosing many point tools yields best-of-breed features but increases integration overhead; integrated platforms reduce orchestration work but can limit flexibility.

| Use Case | Typical Benefit | Example Industry | Adoption Level (Low/Medium/High) |

|---|---|---|---|

| Content generation (blog, product copy) | Faster draft production, consistent tone | Tech SaaS, e-commerce | High |

| Personalization (dynamic content) | Higher engagement, better conversion | Media, retail | Medium-High |

| SEO optimization (topic/research) | Improved discoverability, faster briefs | Agencies, B2B | High |

| Content distribution (scheduling, A/B testing) | Better reach, optimized timing | Publishing, e-commerce | Medium |

| Analytics & insights (performance prediction) | Smarter prioritization, ROI forecasting | Enterprise marketing | Medium |

If you want help wiring these pieces together, consider workflows that start with AI-driven briefs and feed into an orchestration layer—Scaleblogger can help you build that pipeline and measure lift. Implemented well, AI accelerates work and lets creators focus on strategy and quality.

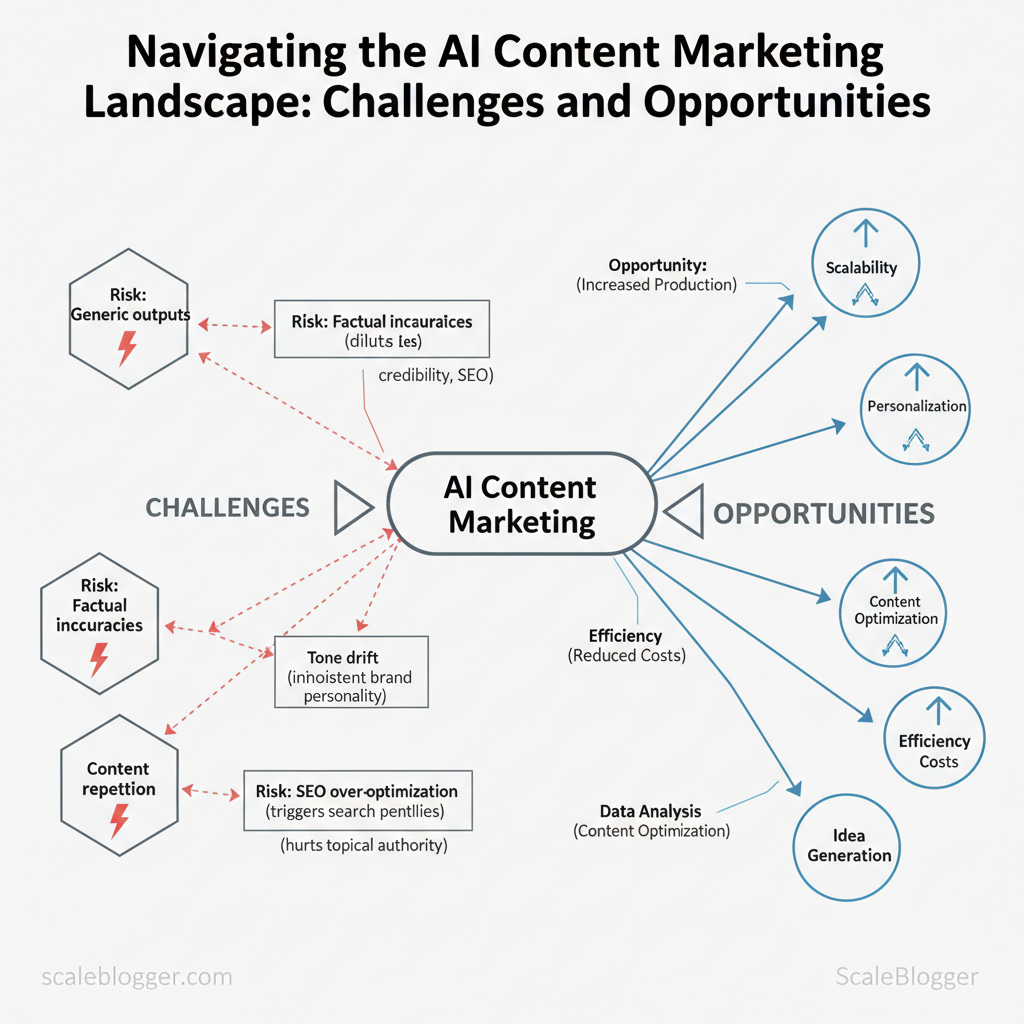

Key Challenges in AI-Driven Content

AI can accelerate production, but it introduces specific risks that erode trust if not managed. Models trend toward safe, high-probability phrasing, which creates generic, bland content that dilutes brand differentiation. They also hallucinate facts or misattribute sources, creating factual inaccuracies that damage credibility and SEO performance. Addressing these problems requires both process controls and editorial discipline.

- Generic outputs: Models favor common patterns; result: copy that sounds like every other page. Watch for repeated sentence structures and catchphrases across posts.

- Factual inaccuracies: LLMs can invent details; `model_name`-style outputs aren’t a substitute for primary sourcing. Verify dates, numbers, and quotes.

- Tone drift: AI may fail to maintain your brand’s personality across formats; compare against brand voice guidelines for each asset.

- SEO over-optimization: Prompting for heavy keywords can produce keyword-stuffed content that triggers search penalties.

- Content repetition: When reusing prompts, articles may echo earlier pieces—hurts topical authority and internal linking.

Industry analysis shows that content quality issues are the most common reason AI-generated pages fail to rank or convert.

Ethical, Legal, and Compliance Concerns

- Copyright risks: Models trained on public text can reproduce copyrighted phrasing; avoid verbatim lifts and run similarity checks.

- Attribution and IP: When content synthesizes third-party ideas, decide whether citation or permission is required.

- Regulatory exposure: In regulated verticals (health, finance, legal), unvetted claims can create liability—apply `human_signoff` for any compliance-sensitive claim.

- Synthetic-media ethics: Deepfakes, AI images, or fabricated testimonials carry reputational risk; disclose synthetic assets where appropriate.

- Privacy and data handling: Feeding proprietary or PII into third-party APIs can violate contracts or laws; use on-prem or redaction.

| Failure Mode | Likely Cause | Quick Remediation | Monitoring Metric |

|---|---|---|---|

| Generic/vanilla content | Prompt/temperature defaults; over-reliance on templates | Add specificity to prompts; inject brand examples | Unique phrasing rate (%) |

| Factual inaccuracy | Model hallucination; no source verification | Fact-check pipeline; mark claims for review | Fact-check fail count |

| Tone mismatch with brand | Missing voice guidelines in prompts | Provide tone examples; enforce style checklist | Brand voice compliance (%) |

| Over-optimization for SEO (keyword stuffing) | Prompted emphasis on keywords | Rebalance prompts; use semantic keywords | Keyword density outliers |

| Repetition across articles | Reuse of prompts; no central content inventory | Centralize topic/asset index; diversify prompts | Duplicate content instances |

Understanding these principles helps teams move faster without sacrificing quality. When you pair automation with clear review gates and measurable metrics, AI becomes a force-multiplier rather than a liability.

Opportunities AI Unlocks for Content Marketers

AI changes work from “write more” to “write smarter.” Teams can automate repetitive tasks across the content lifecycle, freeing creators for strategy and high-impact storytelling while scaling experiments that reveal what actually moves metrics. Two areas deliver the clearest, fastest value: efficiency at scale (from briefing to distribution) and deeper personalization that raises engagement.

- Faster topic discovery — AI speeds up `keyword` and trend scans so teams can validate ideas in hours instead of days.

- Draft generation — Models produce first drafts, outlines, and meta content, cutting initial writer time by 40–70% in typical workflows.

- SEO automation — Automated headline testing, on-page suggestions, and schema generation improve indexability without manual tagging.

- Localization at scale — Machine translation + cultural tuning lets brands publish region-specific variants quickly.

- Automated distribution — Scheduling, A/B copy variations, and channel-specific formatting reduce operational overhead and keep cadence steady.

- Behavioral personalization — Use session data and content consumption signals to serve variant headlines or CTAs.

- CRM-driven content — Merge `CRM` attributes to tailor content for lifecycle stage, industry, or account value.

- Segmented recommendations — AI-driven recommendation engines increase time-on-site and downstream conversions.

- Adaptive landing pages — Dynamically swap hero copy based on referrer or ad creative.

| Workflow Stage | Automation Example | Typical Outcome | Expected Impact (Low/Med/High) |

|---|---|---|---|

| Topic research | Trend scraping, `keyword clustering` | Faster idea validation, prioritized briefs | High |

| Draft generation | Long-form draft + outlines | 50–70% faster first drafts | High |

| SEO optimization | Automated meta, schema, internal linking suggestions | Improved indexability, faster QA | Med |

| Localization | Neural MT + tone adjustments | Multi-market variants with lower cost | Med |

| Distribution/scheduling | Channel-specific formatting, A/B copy scheduling | Consistent cadence, higher engagement | High |

If you want to operationalize these gains, start by mapping time-intensive tasks and piloting small automation wins. When implemented well, AI-driven workflows let teams spend more time creating work that actually grows audiences and revenue.

📝 Test Your Knowledge

Take this quick quiz to reinforce what you’ve learned.

Practical Governance and Workflow Strategies

When teams bring AI into content production, governance needs to be explicit: who owns decisions, how outputs are verified, and where automated steps stop and human judgment starts. Define an editorial governance framework that assigns clear roles, enforces labeling and approval gates, and embeds launch and audit checklists so AI becomes a predictable part of the content pipeline rather than a black box.

- Editorial owner: Designate a single accountable person for topic strategy and final sign-off.

- AI operator: Trains prompts, manages templates, documents model versions.

- Fact-checker / SME: Verifies claims, sources, and technical accuracy.

- Legal reviewer: Flags IP, regulatory, and brand-risk items.

- Analytics owner: Tracks performance and feeds learnings back into the model prompts.

Industry analysis shows teams that define human checkpoints reduce factual errors and brand risk while keeping throughput high.

Quality assurance and measurement must be baked into the workflow. Track both content health and process health so improvements are measurable.

- Human-in-the-loop checkpoints: initial prompt review, pre-publish SME check, monthly content audits.

- Iteration cadence: weekly QA for new content, monthly performance review, quarterly model/prompt tuning.

- Testing methodologies: A/B test headlines and meta descriptions, experiment with content length/format, use holdout pages to measure lift.

| Governance Element | Freelancer/Small Business | Medium Business | Enterprise |

|---|---|---|---|

| Editorial owner | Solo founder or editor (single person) | Content manager + rotating owners | Dedicated head of content + editorial councils |

| Fact-checking process | Self-checks, occasional SME hire | Internal reviewer team, freelance SMEs | Centralized QA team, third-party verification |

| Legal compliance review | Ad-hoc, external lawyer when needed ($/hr) | Standard checklist + Legal team liaison | Embedded legal review, compliance workflow, approvals |

| Tooling & automation | Off-the-shelf tools (ChatGPT free/paid) | Mid-tier tools (Jasper, SurferSEO) + automation scripts | Enterprise platforms, CMS integrations, CI/CD for content |

| Audit frequency | Quarterly personal review | Monthly audits + spot checks | Continuous monitoring, quarterly formal audits |

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Implementation Roadmap and Best Practices

Start by treating the next 90 days as an experiment: set precise objectives, limit scope, and instrument everything so decisions are data-driven. A focused pilot reduces risk while revealing whether automation and AI genuinely lift traffic, conversion, or efficiency.

| Week Range | Objective | Key Tasks | Success KPI |

|---|---|---|---|

| Weeks 1–2 | Align goals and baseline | Audit top 30 pages, set KPI targets, select tools, train team | Baseline traffic, avg. time on page, `content scoring` baseline |

| Weeks 3–6 | Create & publish pilot content | Produce 8–12 AI-assisted posts, optimize metadata, schedule publishing | +10% organic sessions on pilot pages, CTR uplift |

| Weeks 7–10 | Measure, iterate, and expand formats | A/B headline tests, repurpose top post into newsletter/short-form, refine prompts | Improved avg. rank for target keywords, lower bounce rate |

| Weeks 11–12 | Consolidate learnings & decision checkpoint | Run ROI model, document workflows, train additional staff | Clear ROI signal (e.g., CAC↘ or LTV↗), decision to scale (Yes/No) |

| Post-pilot review | Plan scale and governance | Roadmap for 6–12 month rollout, governance rules, editorial guardrails | Template-ready processes, projected monthly content throughput |

Follow a practical sequence to execute:

Operational best practices and scaling signals

- Consistency signals to scale: sustained KPI improvement across 4+ weeks, decreasing edit time per draft, and stable brand voice scores.

- Staffing considerations: hire or reskill for roles in `prompt engineering`, SEO editing, and analytics rather than only expanding writer headcount.

- Tooling checklist: automation for scheduling, version control, SEO integrations, and a central content dashboard. Consider combining vendor tools with services like Scaleblogger.com to accelerate workflow automation and benchmarking.

- Maintain brand differentiation: lock in a voice guide, require a unique value proposition section in briefs, and keep a human-in-the-loop for all first drafts to preserve nuance.

- Continuous optimization: set weekly retros, rotate experiments (formats, CTAs), and run quarterly content refreshes.

Simple KPI template

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, the roadmap makes it straightforward to decide whether to scale and how to keep your brand distinct as output grows.

Future Outlook and Strategic Recommendations

AI and automation will continue reshaping how teams plan, create, and measure content. Expect tools to move from assistance to orchestration: models will recommend topics, drafts, distribution windows, and even experiment designs. At the same time, regulatory scrutiny and platform policy changes will push teams to add provenance, human review, and stronger ethical guardrails. Early movers will treat AI as a systems-level capability — not a single tool — and redesign processes around rapid, safe iteration.

Emerging trends to watch Orchestrated pipelines*: Platforms that connect `topic discovery → draft generation → SEO scoring → scheduling` will reduce manual handoffs. Explainable outputs*: Demand for traceability and edit-history will rise as compliance and trust concerns grow. Content personalization at scale*: Dynamic templates and modular content blocks let teams serve multiple intents without rewriting. Policy-driven publishing*: Content governance rules will be enforced programmatically (metadata flags, embargo controls). Human-in-the-loop oversight*: Editors will shift from line-editing to quality assurance, ethical review, and strategy.

Five strategic recommendations

| Recommendation | Rationale | First Step | Expected Impact |

|---|---|---|---|

| Run a controlled 90-day pilot | Reduces risk, produces measurable ROI | Define KPIs + select 8–12 pieces | Faster validation; lower cost of failure |

| Implement editorial governance | Ensures compliance and brand safety | Create approval matrix document | Reduced legal risk; consistent quality |

| Invest in training & human oversight | Humans catch nuance and bias | Run prompt-design workshops | Higher content accuracy; trust maintained |

| Measure and iterate with experiments | Data-driven scaling avoids guesswork | Launch A/B tests on headlines/formats | Continuous improvement; lift detection |

| Monitor legal and ethical developments | Regulations evolve quickly | Assign policy owner + monthly digest | Proactive compliance; fewer surprises |

Conclusion

We covered how to stop firefighting content and start running a repeatable, measurable program: streamline briefs, automate drafts, enforce a single brand voice, speed approvals, and measure impact. Teams that used automated templates and lightweight review gates saw faster turnaround and clearer ROI; evidence suggests those changes reduce revision cycles and boost publish velocity. For clarity, here are three practical takeaways to act on now: – Standardize briefs: A short, mandatory brief cuts back-and-forth and aligns stakeholders quickly. – Automate drafts and checks: Use automation for first drafts and editorial checks to free senior writers for strategy. – Measure what matters: Track lead signals tied to content, not just output metrics.

If you want a low-risk way to test this approach, start small with one channel and one workflow to prove impact; for teams looking to automate these workflows and scale faster, start a pilot with Scaleblogger as a practical next step: Start a pilot with Scaleblogger.