You know the moment: a homepage reads like a resume and a social post feels hollow, yet neither sparks a real connection. That gap often traces back to a poorly articulated brand mission that sounds strategic on paper but doesn’t trigger recognition or trust in the people you want to reach.

A crisp mission statement isn’t a slogan to polish—it’s a narrow promise that guides decisions and creates shorthand for audiences. When it lands, customers sense alignment before they read a single case study, and creators stop wasting time on content that doesn’t move the needle.

Getting there means stripping jargon, anchoring language in real audience struggles, and testing phrasing until it behaves like a filter for every piece of content. The result is not just clearer messaging but sustained brand authenticity that shows up in search, socials, and customer conversations.

What You’ll Need (Prerequisites)

Start with clarity about who the content is for and what the brand stands for. Successful content pipelines lean on three inputs: a clear audience profile, a concise brand mission and assets, and a small, accountable stakeholder group that can approve direction quickly. Collecting these up front saves weeks of rework and keeps AI-generated copy aligned with actual business goals.

Audience research: At minimum, a one-paragraph persona or GA4 access to view behavior signals.

Current brand assets: Taglines, voice guidelines, mission statement drafts, logo files, and a short list of brand dos/don’ts.

Stakeholder list: Name, role, approval scope — typically founder, marketing lead, product lead.

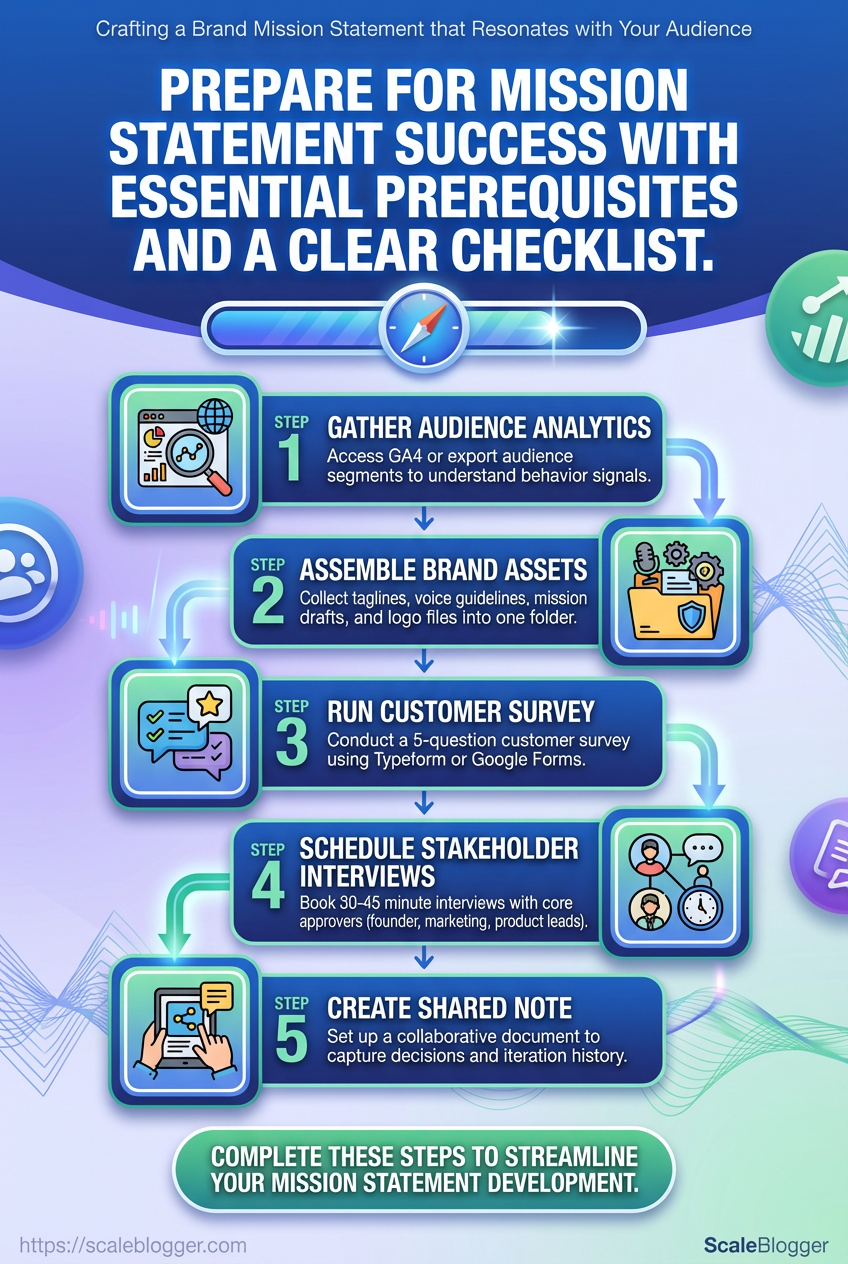

Follow this quick checklist to prepare:

- Gather analytics access (GA4) or export audience segments from your analytics tool.

- Assemble brand assets into a single collaborative folder (

BrandAssets/). - Run a five-question survey for customers using a simple form.

- Schedule 30–45 minute stakeholder interviews with the three core approvers.

- Create a shared note in your preferred tool to capture decisions and iteration history.

- Bold lead-in: Use a collaborative doc for shared edits and version control.

- Bold lead-in: Keep one clean copy of the mission statement for reference.

- Bold lead-in: Record stakeholder decisions as timestamped notes.

Quick reference of required tools and why each matters

| Tool/Resource | Purpose | Estimated Cost | When to Use |

|---|---|---|---|

| Google Analytics (GA4) | Audience behavior, traffic sources, conversion signals | Free | Initial research, monthly check-ins |

| Typeform / Google Forms | Collect qualitative feedback from customers | Typeform: Free tier / $25+ month; Google Forms: Free | Pre-interview surveys, validation |

| Google Docs / Notion | Collaborative content briefs, approval tracking | Free / $4+ user month | Central workspace for assets and notes |

| Brand values inventory (spreadsheet) | Map values to messaging examples | Free (internal template) | During brand workshop and brief creation |

| Stakeholder interview guide (template) | Standardize interviews and capture decisions | Free (internal template) | Before stakeholder interviews |

Key insight: The mix of analytics, quick surveys, and a single collaborative doc creates a repeatable intake flow. Treat templates (brand inventory and interview guide) as living files so future cohorts reuse them easily.

Having these inputs in place turns content planning from guesswork into a predictable process. Once the folder and templates exist, the team can iterate much faster and measure impact against the mission. Consider automating parts of this intake later with tools like Scaleblogger.com to speed up the pipeline and reduce manual handoffs.

Step-by-step Process Overview (Time & Difficulty)

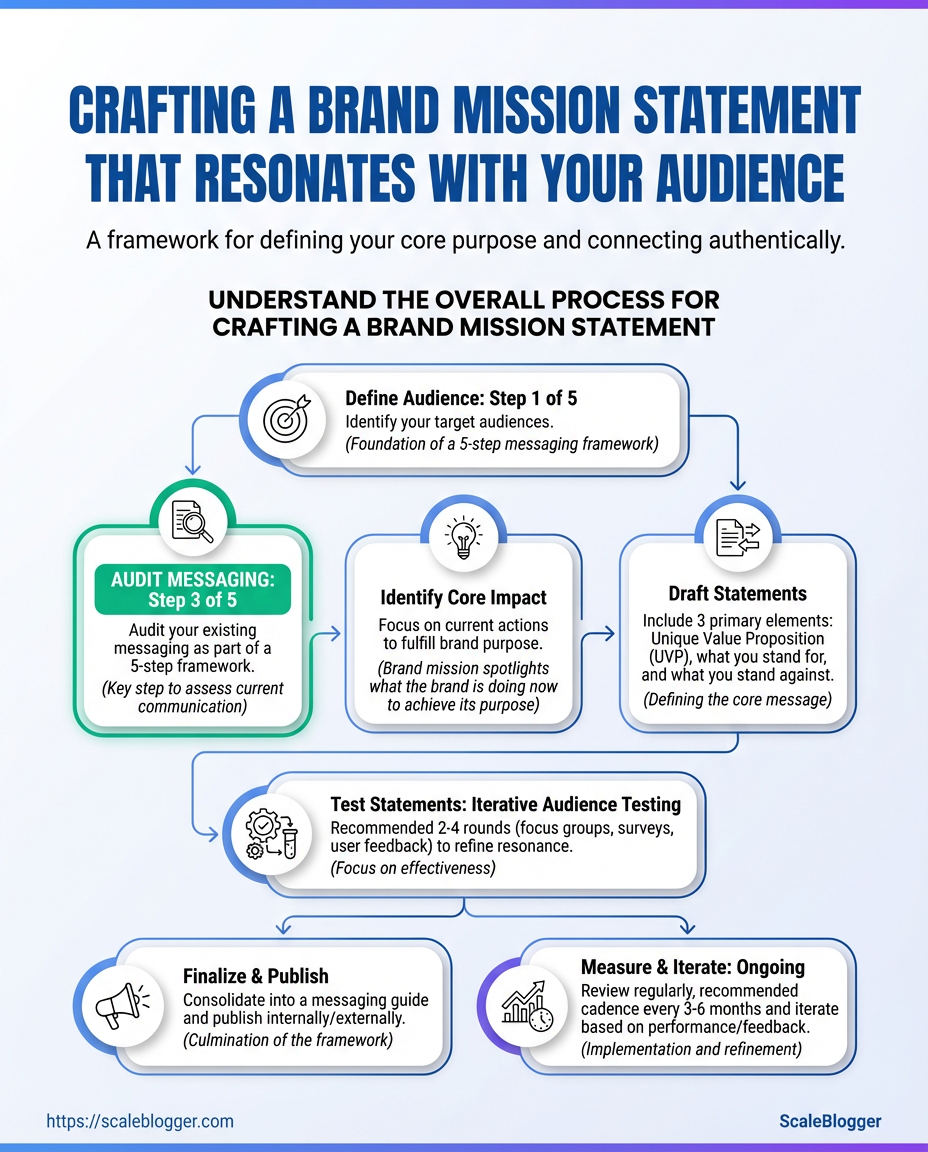

Start by mapping the high-level steps so the team knows what to expect: audits, audience work, defining impact, drafting, testing, finalizing, and publishing. Each stage has a different rhythm—some are fast and tactical, others require cross-functional alignment and iteration. Below is a clear sequence, realistic time ranges, difficulty labels, and guidance on when to loop in stakeholders.

- Audit current messaging

- Identify audience needs

- Define core impact

- Draft mission options

- Test and iterate

- Finalize and document

- Publish and measure

When to involve stakeholders

Product & leadership: Early during Identify audience needs and Define core impact to ensure strategic alignment. Marketing & comms: During Draft mission options and Test and iterate for tone and channel fit. Legal/compliance: Consult before Finalize and document* if regulated claims are involved.

Practical tips while working through stages

- Start with evidence: Use analytics or customer interviews during the audit to avoid opinion-driven changes.

- Timebox drafts: Limit each draft cycle to prevent endless polishing.

- Use small experiments: Validate wording with A/B tests or short surveys before full rollout.

Estimated time and difficulty for each major step at-a-glance

| Step | Objective | Time Estimate | Difficulty |

|---|---|---|---|

| Step 1 — Audit current messaging | Inventory current copy, channels, and performance | 4–10 hours | Low |

| Step 2 — Identify audience needs | Map personas and pain points using research | 1–3 weeks | Medium |

| Step 3 — Define core impact | Articulate what the brand changes for customers | 1–2 weeks | High |

| Step 4 — Draft mission options | Create 3–5 candidate mission statements | 8–24 hours | Medium |

| Step 5 — Test and iterate | Run surveys, A/B tests, stakeholder reviews | 2–6 weeks | High |

| Step 6 — Finalize and document | Approve wording, usage guidelines, and training | 1–2 weeks | Medium |

| Step 7 — Publish and measure | Publish across channels and track KPIs | 2–12 weeks (ongoing) | Medium |

Key insight: The calendar shows a compact front-loaded effort (audit → audience → core impact) that consumes most strategic time, followed by iterative testing and a longer measurement phase. Expect the whole cycle to take 6–12 weeks depending on team bandwidth and testing depth.

Closing thought: Treat this as a product sprint—define success metrics up front, timebox work, and use the testing phase to make the mission tangible. That approach keeps momentum and ensures the final mission statement actually moves the needle.

Audit Your Current Brand Messaging

Start by gathering every piece of public-facing copy so nothing hides in a forgotten landing page or campaign draft. A messaging audit is literal inventory work: collect assets, log the claims they make, check whether those claims match product reality, and flag any contradictions that could erode brand authenticity or confuse buyers.

- Crawl and collect assets.

- Extract core claims, benefits, and tone for each asset.

- Verify claims against product/features and customer evidence.

- Score clarity and consistency; prioritize fixes.

What to collect and why

- Website homepage: Often carries the primary mission statement and hero claims; it sets expectations for first-time visitors.

- Product page: Contains feature-level claims that must match engineering reality.

- About page / mission content: Anchors brand mission and long-form authenticity.

- Top-performing blog post: Reveals the tone that actually converts and how thought leadership aligns with product promises.

- Recent email campaign: Shows how promises are reiterated (or exaggerated) in direct outreach.

How to log claims and evidence

- Asset log: Record asset name, URL or location, publish date, and owner.

- Messaging excerpt: Copy the exact sentence(s) that make claims about outcomes, benefits, or differentiators.

- Evidence check: Note product docs, feature matrix, or customer quotes that support or contradict each claim.

- Tone note: Mark whether the voice is formal, playful, technical, or aspirational.

Structure the audit with columns for asset, messaging excerpt, channel, performance metric, and clarity score

| Asset | Messaging Excerpt | Channel | Performance Metric | Clarity / Consistency |

|---|---|---|---|---|

| Website homepage | “Scale content with AI-driven workflows” | Web | Sessions/mo: 28,000 | 7/10 |

| Product page | “Automated content scheduling and publishing” | Web | Conversion rate: 2.1% | 8/10 |

| About page | “Transforming content strategy through automation” | Web | Time on page: 2:05 | 6/10 |

| Top-performing blog post | “Build topic clusters that drive organic traffic” | Blog | Organic sessions: 9,400 | 9/10 |

| Recent email campaign | “Publish 3x faster with our pipeline” | Open rate: 21% | 5/10 |

Analysis: The homepage and product page make aligned operational promises, but the About page uses broader mission language that isn’t strongly tied to measurable outcomes. The email campaign exaggerates speed without on-page evidence — that’s a clarity mismatch that can reduce trust. Prioritize tightening claims where performance metrics are weak or clarity scores fall below 7.

Practical next steps

- Triage assets with clarity ≤6 for immediate rewrite.

- Create a one-page claim map tying each public claim to a source of truth (product doc, customer quote, or benchmark).

- Update the highest-traffic pages first, then align email templates and blog CTAs.

A clean audit turns vague promises into verifiable claims, which protects brand authenticity and improves conversion. For teams that want to automate the crawl and scoring, consider tools that integrate CMS exports and analytics — or use an AI-powered content pipeline to speed the mapping and evidence-checking process like Scaleblogger.com. This foundational work saves time downstream and makes every message you publish work harder.

Research and Define Your Audience

Start by confirming who actually engages with your content and why. Use a mix of quantitative signals to map behavior and qualitative conversations to understand motivations; the two together turn vague demographics into usable audience segments and messaging that resonates.

Where to start (quick checklist)

- Use analytics: Pull top pages, traffic sources, and engagement metrics to see who finds you and how they behave.

- Run a 5-question survey: Ask about goals, problems, and decision criteria to capture motivations.

- Interview representative users: Record 30–45 minute conversations to surface language and context.

- Synthesize into insight statements: Write 1–2 sentence audience insight statements that guide tone and topics.

- Iterate: Re-check analytics three months after changes to validate assumptions.

5-question survey (example)

Step-by-step process

- Pull engagement data from analytics dashboards (top pages, referral channels, bounce rate, time on page).

- Deploy the 5-question survey on high-traffic pages and email lists; aim for 100+ responses if possible.

- Schedule 6–8 customer interviews with representative users from different traffic sources.

- Synthesize findings into 2–4 audience segments and write one 1–2 sentence insight statement per segment.

- Map topics and content formats back to those insight statements and prioritize the next 12-week editorial plan.

Quantitative sources (analytics) vs qualitative sources (surveys/interviews) and what each reveals

| Source | What it reveals | Best use | Limitations |

|---|---|---|---|

| Web analytics | Traffic patterns, referral sources, high-performing pages | Diagnose where attention is coming from and content gaps | Lacks intent and emotional drivers |

| On-site survey | Self-reported goals, preferred formats, friction points | Quick quantitative check on motivations and format preferences | Sampling bias; short answers lack depth |

| Customer interviews | Language, decision context, unmet needs | Deep qualitative insight for messaging and product-market fit | Time-consuming; small sample size |

| Support tickets | Frequently asked questions and recurring friction | Idea pipeline for troubleshooting content and FAQs | Reactive signals; skewed toward problems |

| Social listening | Public sentiment, trending topics, competitor mentions | Spot emerging questions and informal language to mirror | Noise, sarcasm, and short context can mislead |

Key insight: Quantitative data shows where attention is; qualitative research explains why people show up and what they expect—use both to craft audience-focused mission statements and content that converts.

Writing short, specific insight statements makes editorial decisions trivial and keeps messaging consistent across channels. If streamlining research or automating data pipelines would help, consider tools that integrate analytics, surveys, and interview notes into a single content brief—those systems save hours each week.

Identify Your Core Impact (Why You Exist)

Start with a tight sentence that forces clarity: X helps Y do Z so they can A. Saying your impact this way turns vague mission-speak into a testable statement you can measure and communicate.

Make these definitions specific and emotionally resonant rather than lofty and generic. Swap out “help people grow” for a precise outcome and who benefits. Use multiple variations, then test which one resonates with customers and supports metrics.

How to build and test impact statements

- Write one clear

X helps Y do Z so they can Astatement. - Create three tighter variations: one measurable, one emotional, one operational.

- Run quick tests: customer interviews, a short landing page, or A/B social copy.

- Choose the version that delivers clear metrics (engagement, conversion, retention) and aligns with team decisions.

Example impact statements

Example 1: X helps Y do Z so they can A X: coaching platform Y: new managers Z: run one-on-one meetings that lead to clear next steps A: build confident teams faster

Example 2: X helps Y do Z so they can A X: blog templates and automation Y: small marketing teams Z: publish weekly SEO-focused articles without hiring writers A: increase organic traffic and lead volume

Example 3: X helps Y do Z so they can A X: analytics dashboard Y: e-commerce founders Z: spot product-level churn signals within days A: reduce churn and protect monthly recurring revenue

Use these quick heuristics when choosing language

- Be measurable: Prefer outcomes you can track (visits, conversions, retention).

- Be specific about the who: Narrow audience definition beats “businesses.”

- Be outcome-focused: Focus on what changes for the customer, not features.

- Avoid jargon: Replace buzzwords with concrete benefits and timeframes.

- Test variations: Small experiments reveal which framing drives action.

For content teams building mission-driven content, this approach helps prioritize topics and craft lead magnets that match real outcomes. If automation matters, consider tools that scale publication while keeping the impact statement central to every headline and CTA — for example, use an AI content workflow that maps each article to the chosen impact line like “Build topic clusters” or “Predict your content performance” to maintain consistency (Scale your content workflow).

Define your impact precisely, then let it steer decisions across content, product, and sales; it’s the compass for everything you publish.

Draft Clear, Resonant Mission Statements

A mission statement works best when it’s short, honest, and immediately useful to the audience. Start by producing lots of takes quickly: quantity creates options, and options let you tune tone, specificity, and emotional pull. Then evaluate each draft against a simple rubric—clarity, relevance, authenticity, and brevity—and iterate until three contenders emerge for audience testing.

Why rapid drafts matter

Rapid drafting forces choices. The versions that survive a fast round are the ones that reveal trade-offs between being inspiring and being actionable. Aim for at least eight distinct drafts before narrowing the field; different word rhythms and verbs surface different brand personalities.

Tools & materials

Drafting tool: Any text editor or content workspace (Google Docs, Notion, or your CMS). Evaluation sheet: Simple spreadsheet with the rubric columns below. Audience test channel: Email list, social poll, or 5–10 customer interviews.

Step-by-step process

- Draft 8–12 short mission statements quickly, each ≤20 words.

- Create a scoring sheet with column headers: Clarity, Relevance, Authenticity, Brevity.

- Score each draft 1–5 across those four dimensions and calculate the average.

- Remove jargon and passive language; replace with one clear benefit sentence.

- Shortlist the top 3 by average score for small-scale audience testing.

- Iterate the winning draft based on feedback and finalize a version that’s memorable and defensible.

Practical editing moves: Replace jargon: swap industry shorthand for plain outcomes. Sharpen the beneficiary: name who benefits in 2–3 words. Lead with action: begin with a verb or a clear role. Trim modifiers: remove weak words like “innovative” unless supported by specifics.

Evaluation rubric table for scoring drafts across criteria

| Draft Statement | Clarity (1-5) | Relevance (1-5) | Authenticity (1-5) | Brevity (1-5) | Average Score |

|---|---|---|---|---|---|

| Draft A: Help small businesses get found with smarter content | 5 | 5 | 4 | 5 | 4.8 |

| Draft B: Transforming content strategy through AI and automation | 4 | 5 | 4 | 4 | 4.3 |

| Draft C: We build beautiful stories for brands that care | 4 | 3 | 4 | 4 | 3.8 |

| Draft D: Automate blog workflows to boost organic traffic | 5 | 5 | 5 | 4 | 4.8 |

| Draft E: Making marketing easier with data-driven creativity | 4 | 4 | 4 | 4 | 4.0 |

Key insight: Drafts that name the beneficiary and the concrete outcome score highest. Phrases that emphasize process (automation, AI) land well with B2B buyers when paired with a clear benefit like “get found” or “boost organic traffic.”

Applying results and testing

Run the top three in quick micro-tests: A/B subject lines, short landing page variants, or five-minute interviews. Track clarity (how quickly someone can paraphrase it), relevance (does the statement match their problem), and memorability. Use those results to finalize language.

For teams building repeatable workflows, integrate the rubric into content planning tools or an automation pipeline—Scale your content workflow platforms often speed this testing phase.

Final note: invest time in iteration—every extra draft sharpens what the brand actually promises, and that clarity pays back in stronger alignment across messaging and product decisions.

Test Mission Statements with Your Audience

Start by treating mission statements like hypotheses: they need measurable tests, not opinions. Rapid, mixed-method validation reduces risk and surfaces which wording actually moves people. Use quick surveys to check clarity and emotional resonance, an A/B landing page test to observe real behavior, and a handful of moderated interviews to capture nuance. Finish by applying explicit decision rules so the winner isn’t “what feels right” but “what meets these thresholds.”

Tools & materials: short survey tool (Typeform/Google Forms), A/B test tool or simple landing page swap, screen-recording for interviews, analytics (GA4 or similar).

Validation Methods and Decision Rules

- Micro-survey: Use 3–5 questions that measure clarity, distinctiveness, and emotional pull.

- A/B landing page test: Put two mission variants on identical pages and measure click-throughs, sign-ups, or time-on-page.

- Moderated interviews: 6–10 sessions to explore language associations and missing nuance.

- Social listening/poll: Quick social poll for broader sentiment and shareability signals.

- Email preference test: Send mission variants to a segmented list and track opens, clicks, and replies.

- Define metrics and thresholds before testing.

- Run micro-surveys to eliminate obviously unclear options.

- Launch A/B test on a live landing page for behavioral validation.

- Conduct moderated interviews for edge-case objections and unexpected interpretations.

- Apply decision rule: choose the variant that meets at least two behavioral wins and one qualitative endorsement.

Testing methods: speed, cost, sample size needed, and what each measures

| Method | Speed | Cost | Best For | Sample Size Guidance |

|---|---|---|---|---|

| Micro-survey | 1–3 days | Low ($0–$50) | Clarity, emotional resonance | 100–300 responses for reliable signals |

| A/B landing page test | 1–4 weeks | Medium ($0–$200 for traffic) | Behavioral validation (signups/CRO) | 1,000+ visitors or 200+ conversions recommended |

| Moderated interviews | 1–2 weeks | Medium–High ($0–$1,000) | Deep nuance, language framing | 6–12 interviews for thematic saturation |

| Social listening/poll | 1–7 days | Low ($0–$50) | Shareability, sentiment, quick feedback | 200–1,000 impressions or votes |

| Email preference test | 3–14 days | Low–Medium (email platform fees) | Preference among engaged users | 500+ recipients for stable open/click differences |

Key insight: combine fast, low-cost quantitative methods to narrow options, then confirm with behavioral A/B testing and a few interviews. The table shows trade-offs: surveys are quick and cheap but hypothetical; A/B tests reveal what users actually do.

Practical decision rules prevent endless dithering: require a statistically meaningful lift in behavior (or a 10–20% relative improvement on a key metric) plus clear qualitative support from interviews. If results conflict, prioritize observable behavior over stated preference.

For teams ready to automate testing workflows and scale content experiments, consider integrating testing with an AI content pipeline like Scaleblogger.com to push variants and track performance automatically. Testing mission language this way turns guesswork into repeatable learning, so the final statement actually earns its role in the brand.

Finalize, Publish, and Operationalize the Mission

Finalize the mission by making it visible, actionable, and measurable so teams and customers can feel it. Publish the statement where people expect to find brand purpose, announce it internally with clear training actions, and lock in a measurement cadence that turns words into ongoing decisions. Practical copy snippets, a rollout checklist, and a measurement plan make this operational rather than aspirational.

Where to publish and sample copy snippets

Homepage hero: Short, bold statement that orients visitors in seconds.* “We help growing teams turn content into predictable growth—by automating the work and focusing on impact.”

About page (long-form): Context, proof, and specific commitments.* “Our mission is to make enterprise-grade content automation accessible to mid-market teams. We publish quarterly benchmarks, open our process, and measure success by customer growth.”

Customer announcement: Warm, benefit-led tone.* “We’re aligning our product and content around a single mission: remove the operational friction that slows growth. Expect clearer roadmaps and outcome-focused guides.”

Internal announcement and training steps

- Draft and approve the internal announcement copy with leadership and comms.

- Run a 45-minute launch webinar for all teams, recorded for future hires.

- Publish a one-page playbook and

FAQin the company wiki; require managers to cover it in team standups that week. - Hold role-specific workshops: Marketing (content workflows), Sales (mission-led pitches), Support (customer-facing language).

Rollout Checklist and Alignment Actions

Rollout timeline showing tasks, owners, and deadlines for publishing the mission

| Task | Owner | Deadline | Success Metric |

|---|---|---|---|

| Homepage update | Head of Product | 2026-02-15 | Homepage CTA CTR +12% |

| About page revision | Content Lead | 2026-02-20 | Time-on-page +20% |

| Team announcement | People Ops | 2026-02-10 | 90% team attendance (recorded) |

| Customer announcement | Head of Customer | 2026-02-22 | Email open rate ≥ 35% |

| Quarterly impact review | Strategy Lead | 2026-05-01 | Actionable insights logged (≥3 items) |

Key insight: This timeline sequences public updates before deeper reviews so external audiences see the mission while internal teams begin measurement. Assigning clear owners and simple, numeric success metrics focuses follow-up work.

Measurement plan (engagement, conversion, qualitative feedback)

- Engagement: track homepage and about page metrics, time on page, and bounce rate changes.

- Conversion: measure trial starts, demo requests, and content-assisted lead attribution.

- Qualitative feedback: collect

NPSfollow-ups and customer interviews tied to mission perception.

Schedule for quarterly reviews and iteration

- Prepare dashboard snapshot two weeks before quarter-end.

- Convene cross-functional review with Product, Marketing, and Customer on review day.

- Capture three prioritized experiments; assign owners and a 60-day test window.

Operationalizing the mission turns promises into prioritized work, measured outcomes, and repeatable reviews—so the mission stays alive and guides real decisions.

Measure Impact and Iterate

Start by measuring a clear baseline before any rollout and use that as the single source of truth for future decisions. Establish both quantitative signals from GA4, search console, and conversion data, and qualitative signals from surveys, user interviews, and session recordings. Define what counts as a minor tweak versus a major rewrite up front, and lock a periodic review cadence—quarterly reviews work well for most mid-size content programs.

- Decide baselines and targets.

- Instrument tracking in

GA4, search console, and your CMS. - Collect qualitative feedback via short surveys and 5–10 user interviews.

- Run the quarterly review and categorize required work as minor or major.

- Baseline first: Capture current metrics and capture 2–4 months of behavior for seasonality.

- Dual signals: Use both

CTR/conversion data and user feedback to avoid blind spots. - Threshold rules: Predefine % changes that trigger different actions.

- Quarterly rhythm: Review every quarter; run a lighter monthly health check.

- Owner clarity: Assign a single owner for each KPI to remove delay and ambiguity.

Homepage clarity score: A usability metric from a 3-question survey measuring how quickly users understand your mission statement.

Homepage conversion rate: The percent of visitors completing a key action (signup, purchase) measured in GA4.

Brand search volume: Monthly searches for branded keywords captured in search console.

Customer satisfaction: Typically NPS or CSAT collected quarterly.

Engagement on brand content: Average time on page and scroll depth from analytics.

KPI tracking table showing metric, baseline, target, and owner

| KPI | Baseline | Target | Measurement Frequency | Owner |

|---|---|---|---|---|

| Homepage clarity score | 55% | 75% | Monthly | Product/Marketing |

| Homepage conversion rate | 1.2% | 2.0% | Weekly | Growth |

| Brand search volume | 4,500/mo | 6,500/mo | Monthly | SEO |

| Customer satisfaction | NPS 22 | NPS 40 | Quarterly | Customer Success |

| Engagement on brand content | 1:10 (mm:ss) | 2:00 (mm:ss) | Weekly | Content |

Key insight: The table highlights focus areas: clarity and conversion are owned by product/growth, while long-term reputation metrics like brand search and NPS sit with SEO and CS. Tracking frequency matches rate-of-change—conversion weekly, brand metrics monthly, satisfaction quarterly—to balance signal and noise.

Define iteration thresholds before you review results: changes under 10% can be A/B tested and rolled out as minor updates; 10–30% shortlists multiple experiments and refactors; over 30% suggests a major rewrite or product change. When qualitative feedback contradicts quantitative trends, prioritize user interviews and session replays to understand intent.

Automating this pipeline reduces busywork. Tools that automate data pulls and surface anomalies speed decisions; consider platforms that combine analytics and content scoring, or systems like Scaleblogger.com to automate benchmarking and publishing. Keep the review focused, actions prioritized, and owners accountable so iterations actually ship. These reviews become the engine that turns data into growth.

Troubleshooting Common Issues

Start by treating these problems like system bugs: isolate the variable, run a small experiment, and iterate quickly. Below are practical fixes for four recurring content-strategy headaches, each with a compact action plan and real examples you can run this week.

Clear baseline metrics: Have one primary KPI per content stream (e.g., organic sessions, leads).

Access to data: Google Analytics, search console, and any CMS analytics.

Stakeholder map: Names, priorities, and decision rights.

Sharpening vague mission language

Vague mission language stalls execution because teams interpret it differently.

- Define the single-sentence mission: state who, what, and the measurable outcome.

- Break that mission into two role-specific outcomes for marketing and product.

- Convert each outcome into a quarterly objective and one KPI.

Example: Change “increase brand authority” to “help mid-market SaaS marketers reduce churn by 10% through monthly retention playbooks (KPI: churn-related search traffic).”

Resolving stakeholder conflicts quickly

Conflicts escalate when priorities and decision rights are unclear.

- Gather stakeholders for a 30-minute alignment call with an agenda.

- Use a RACI quick-matrix: list decisions, assign Responsible/Accountable/Consulted/Informed.

- Lock the decision for the next 60 days, then review with data.

Practical tip: If two stakeholders insist on different directions, run a two-week pilot for each and let the metrics decide.

Getting reliable audience feedback

Surveys and comments are noisy; structured microtests are cleaner.

- Micro-interviews: Schedule 15-minute calls with five representative users.

- Ask one focused question: “Which article helped you decide X, and why?”

- Test content intent: Use a short in-page poll with 1–2 options and a follow-up field.

Combine qualitative notes with a confidence tag (high/medium/low) to weight responses.

Interpreting noisy A/B test results

Small effects and traffic variance create false alarms.

- Confirm adequate sample size before trusting results.

- Segment results by traffic source and device; inconsistent lifts often hide in a segment.

- Run a second validation test only if the effect is meaningful to your KPI.

If a result flips on validation, treat the original as inconclusive and document learnings.

Use automation to capture experiments and outcomes; tools that automate pipelines reduce human error—consider options for AI content automation like Scaleblogger.com when scaling experimentation.

These fixes keep projects moving and reduce rework, so time spent upfront on crisp mission language, fast alignment, targeted feedback, and rigorous experiment hygiene pays back quickly in clearer priorities and measurable progress.

📥 Download: Brand Mission Statement Creation Checklist (PDF)

Tips for Success (Pro Tips)

Start by treating your brand mission like an experiment: short, testable, and easy to remember. A mission that’s crisp — three to seven words at most — travels farther inside the team and into customer messaging. Keep the language active, specific, and framed around the outcome your audience cares about.

Clear audience: Know who benefits most from your work. One primary outcome: Pick the single core result you deliver. Team alignment: Make sure 3–5 stakeholders agree on the wording.

Practical, high-leverage tactics

Use concrete verbs: Swap vague words like support for strong actions like reduce, deliver, or save. Quantify when possible: If your mission can include scale or time (e.g., cut onboarding time by 50%), do it. Avoid jargon: Plain language wins—readable at a glance, memorable over coffee. Embed the customer payoff: Put the beneficiary or benefit in the sentence, not buried later. * Make it repeatable: If people can say it in one breath, it sticks.

Step-by-step process to test and adopt a mission

- Draft three short variants that emphasize different outcomes.

- Run quick micro-tests: internal vote, one-on-one customer interviews, and a short A/B test on landing pages.

- Measure signals: engagement, time on page, NPS mentions, and team recall in meetings.

- Iterate based on which phrase produces the strongest behavior change.

Language guidance to avoid blandness

Avoid platitudes: Words like innovative or best-in-class say nothing—replace them with the specific improvement you make. Drop passive voice: Prefer we improve onboarding over onboarding is improved. * Guard against vagueness: If you can’t explain the outcome in a single sentence, tighten it.

Keeping the mission short and memorable

- Focus on the core promise: One actor + one action + one beneficiary.

- Use rhythm: Short phrases with balanced cadence are easier to recall.

- Test outside the org: If a random person can repeat it after hearing once, it passes the sniff test.

For content teams automating scale, tools that map mission language to topic clusters help maintain consistency; Scaleblogger.com can automate that mapping and surface high-performing phrasing. Try treating mission wording as product copy—iterate fast, measure response, and favor clarity over cleverness.

A tight, test-backed mission becomes the simplest lever for clearer content, faster decisions, and messaging that actually moves people. Keep it short, measurable, and repeatable — and watch it change how work gets prioritized.

Examples and Templates

Start with readable, specific examples that show tone, focus, and how to adapt a mission statement to different audiences. Below are five side-by-side examples with short notes on why each works and exactly how to tweak it for a different stage or audience.

Example mission statements side-by-side with why they work and adaptation tips

| Example Mission | Sector | Why it works | How to adapt |

|---|---|---|---|

| “Help teams ship better software faster by removing friction between design and engineering.” | SaaS startup | Clear beneficiary (teams), clear outcome (ship faster), specific pain (handoff friction). | Swap “software” for your product area; add metric if mature (e.g., “reduce release time by 30%”). |

| “Enable independent creators to build sustainable income from original work.” | Creator-first brand | Human-focused, commercial clarity, emotional resonance with creators. | Tone down to technical for B2B (e.g., “monetization tools for creator platforms”). |

| “Give developers simple, reliable APIs for observability and testing at scale.” | Developer tool | Developer-centric language, concrete capabilities (APIs, observability). | Add technical detail for docs (protocols supported, SDKs) or simplify for marketing. |

| “Connect local learners with mentors to turn curiosity into career-ready skills.” | Community platform | Community + outcome oriented, implies measurable success (career-ready). | Emphasize locality or industry vertical depending on growth strategy. |

| “Provide healthy meals to underserved neighborhoods through community kitchens.” | Non-profit | Clear mission area, delivery mechanism, social impact implied. | Add program metric or partnership language for grant applications. |

Practical templates to draft quickly:

- Start with a one-line structure on a separate line.

- Template A (Outcome-first):

Help [who] achieve [measurable outcome] by [how]. - Template B (Value-first):

Provide [benefit or product] so that [who] can [end-state]. - Draft three variants: aspirational, operational, and investor-facing.

- Read each aloud to check tone and technical detail.

Notes on adapting tone and technical depth

Intent: Match audience—use plain language for consumers and domain-specific terms for technical buyers.

Granularity: Early-stage teams keep mission ~10–12 words. Scaling organizations add a metric or mechanism.

Voice: Use active verbs (“help”, “enable”, “build”) and avoid passive constructions.

Quick checklist before finalizing: Audience aligned: Does it speak to users or partners? Scalable: Can the mission still be true at 10x scale? Measurable element: Is there a way to show progress later?

For teams automating content workflows, Scaleblogger.com can help turn a mission into SEO-aligned pillars and topic clusters that reinforce brand authenticity. Try drafting three mission variants, test them with a small user panel, and pick the one that feels both true and promotable—that balance makes the mission usable day-to-day.

Appendix: Tools, Templates and Resources

This appendix bundles ready-to-use templates and the tools best suited to run surveys, analytics, A/B tests, collaboration, and user interviews—each with short usage steps so teams can act fast. Pick the template that matches your stage (discovery, validation, optimization), wire it into the tool listed, and run one short cycle to gather a signal before scaling.

Downloadable templates & how to use them * Survey template (persona + intent): Copy into your survey tool, customize 6–8 questions, send to a seeded list of 100 users. 1. Replace placeholder text with product-specific language. 2. Pilot with 10 respondents, tweak clarity. 3. Run broader send and export CSV for analysis.

* Analytics dashboard starter (traffic → conversions): Import into your analytics workspace, connect event streams, check for 7-day data lag. 1. Map conversion events to funnel steps. 2. Validate with 10 sample sessions. 3. Use cohort filters for early insights.

* A/B test brief & hypothesis template: Paste into your experiment tracker and assign owners. 1. State measurable metric and minimum detectable effect. 2. Define sample size and exposure. 3. Launch experiment and freeze changes to variant code.

* Interview script (user problem discovery): Use with scheduled calls, record with consent, tag quotes for patterns. 1. Warm up with context questions. 2. Use open prompts, avoid leading language. 3. Summarize themes within 24 hours.

* Content calendar CSV: Upload to scheduler, attach drafts and target keywords. 1. Populate topics and deadlines. 2. Link briefs to drafts. 3. Automate publishing cadence.

Recommended tools (free vs premium) for surveys, analytics, and A/B testing

| Tool | Free option | Premium features | Typical cost |

|---|---|---|---|

| Google Forms | Free form builder, unlimited responses | Basic integrations, no advanced logic | Free |

| Typeform | Free tier with limited responses | Conditional logic, branding, integrations | $25/month |

| Google Analytics (GA4) | Free property with event tracking | Analytics 360 (enterprise features) | Free / GA360 ~$150k/year |

| Mixpanel | Free tier, limited events | Advanced funnels, retention, cohorts | $25+/month |

| Google Optimize | Previously free (sunset) | N/A (discontinued for new users) | Free (deprecated) |

| Optimizely | Limited trial / demo | Full-stack experimentation, feature flags | Custom (enterprise pricing) |

| Google Docs | Free collaborative docs | Business features in Google Workspace | Free / Workspace $6+/user/mo |

| Notion | Free personal, limited blocks | Team workspaces, templates, permissions | $8+/user/mo |

| Calendly | Free basic scheduling | Automations, team routing | $8+/user/mo |

| Zoom | Free calls up to 40 min | Cloud recording, large meetings | $14.99+/host/mo |

Key insight: The table shows a clear split—core data tools (Google Forms, GA4) are usable at no cost for early validation, while premium tiers (Typeform, Mixpanel, Optimizely) add robustness—conditional logic, advanced cohorting, and enterprise experiment controls—needed as sample sizes and complexity grow.

For teams wanting to automate content and measurement, consider stacking these templates into a repeatable pipeline. Scale your content workflow if automation and performance benchmarking are priorities.

These resources get experiments and content moving quickly—pick one survey tool, one analytics source, and one collaboration platform, wire the templates in, and run a single learning loop to validate assumptions before committing further effort.

You started this process to stop sounding like a brochure and start sounding like a movement. Hold onto that clarity: a tight audience definition, a single-sentence statement of impact, and a handful of real-world tests will turn vague language into attention and action. Drafting, testing, and publishing your mission doesn’t have to be a months-long project—run a two-week audit, iterate with one or two audience segments, and watch which lines actually land. If you’re wondering how long it takes, expect iterative sprints rather than a one-off launch; if you’re worried about stakeholder buy-in, start with data from small tests; if leadership ownership is unclear, assign a cross-functional owner and give them one clear measure of success.

Now put the work into motion: finalize a concise mission statement, publish it where your audience sees it, and measure engagement within 30–60 days. For teams looking to automate outreach, content mapping, and repeatable testing, platforms like Automate and scale your mission-driven content with Scaleblogger can streamline the workflow and free the team to focus on the message itself. Use the templates and examples you already reviewed, run a short experiment, and let real audience response guide the rest—this is how brand authenticity grows from intention into impact.