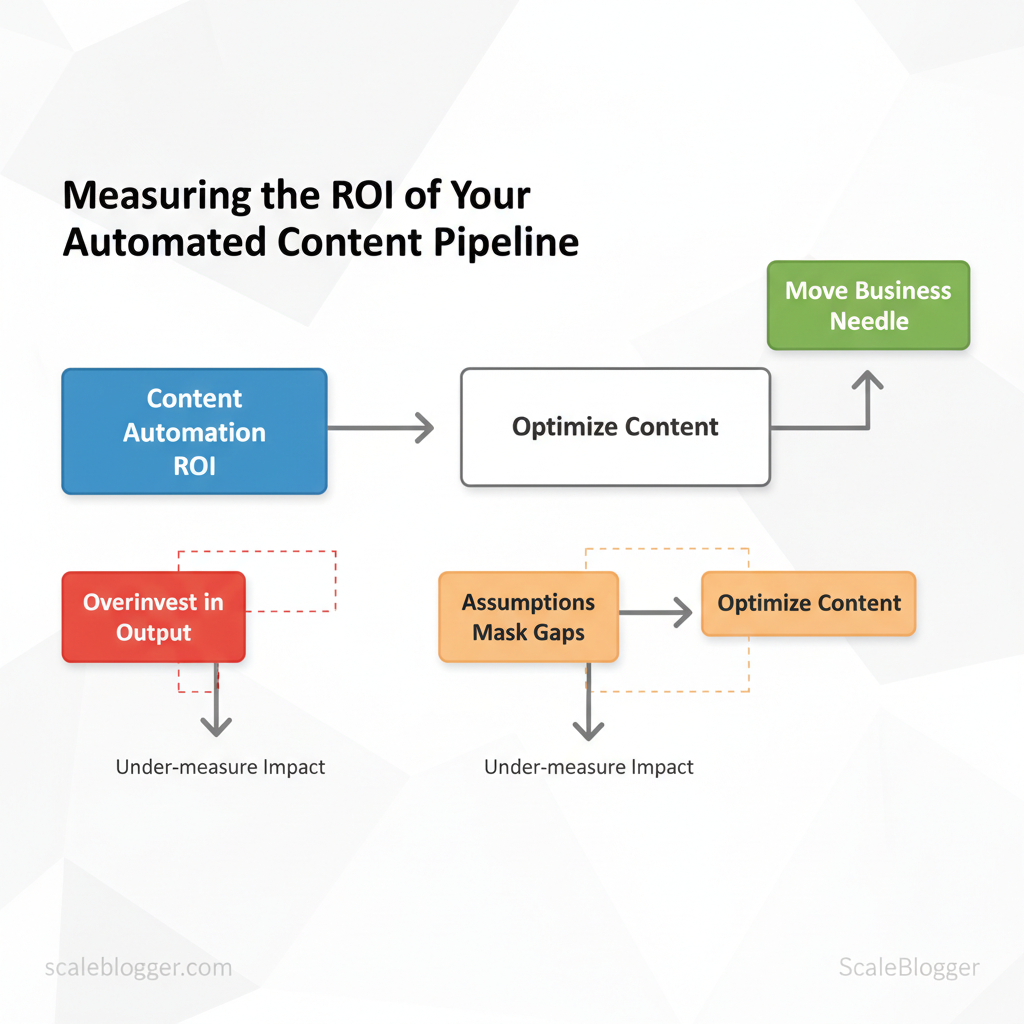

Marketing teams often overinvest in output and under-measure impact, leaving growth opportunities hidden in plain sight. When the pipeline is automated, assumptions about efficiency can mask gaps in actual returns — that’s where content automation ROI becomes the decisive metric rather than simple volume or throughput. By focusing on the right signals, teams stop guessing and start optimizing content that moves the business needle.

Measuring content success depends on mapping workflows to outcomes, choosing the right `KPIs`, and attributing results across channels. That makes content marketing metrics and attribution models essential tools, not afterthoughts. Picture a team reallocating resources from low-performing templates to high-converting topic clusters and increasing qualified traffic without adding headcount.

- What to measure to calculate true ROI from automation

- How attribution changes when publishing scales rapidly

- Practical `KPI` templates for content funnels and lead impact

- Ways to validate experiments without harming baseline performance

- How to use automation data to inform editorial strategy

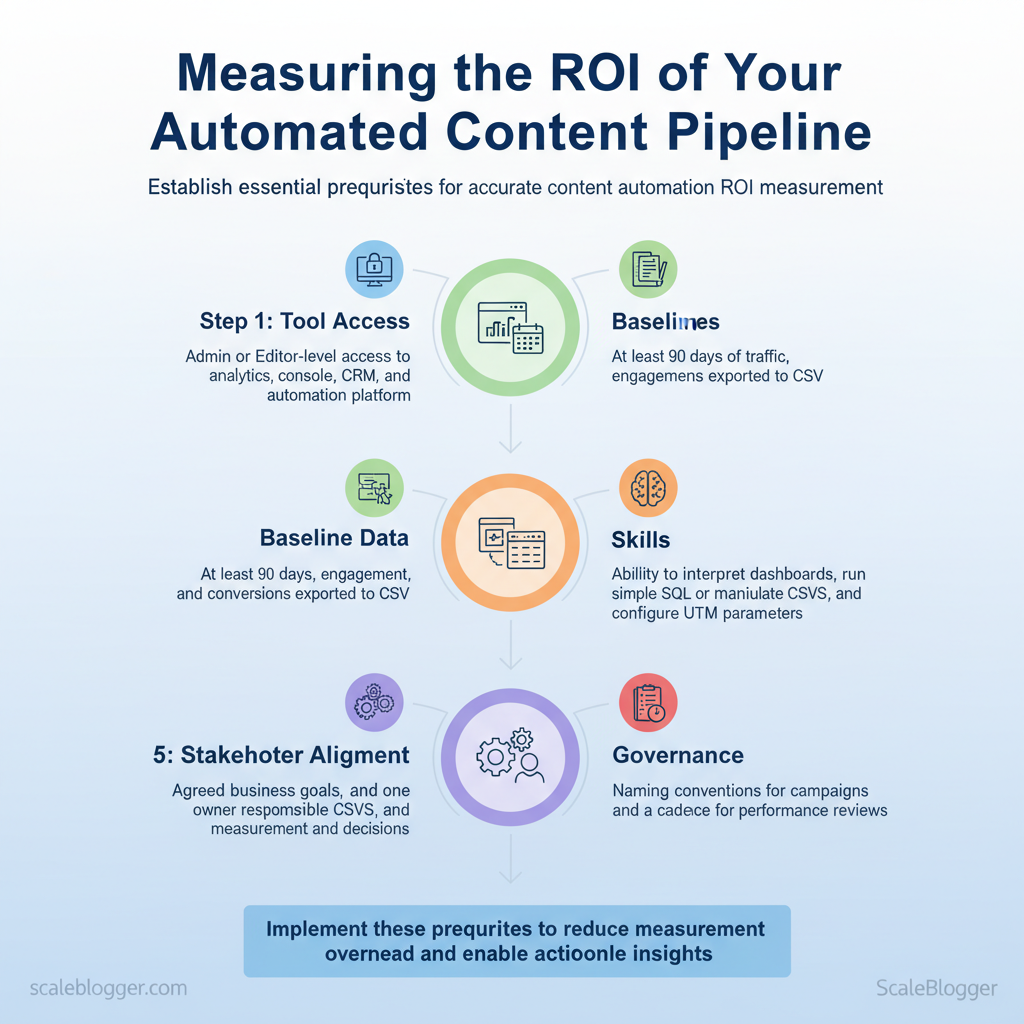

Prerequisites & What You’ll Need

Start with the practical minimum: to measure ROI from content automation you need clean tracking, baseline performance, basic data skills, and aligned goals. Without 90 days of historical metrics and admin-level access to analytics and publishing platforms, any automation experiment will be blindfolded. The list below establishes the concrete tools, access levels, and quick setup actions that unlock reliable measurement and iteration.

- Tool access: Admin or Editor-level access to analytics, search console, CRM, CMS, and any automation platform.

- Baseline data: At least 90 days of traffic, engagement, and conversions exported to CSV so you can model seasonality and trends.

- Skills: Ability to interpret dashboards, run simple SQL or manipulate CSVs in `Google Sheets`/`Excel`, and configure UTM parameters.

- Stakeholder alignment: Agreed business goals (e.g., traffic, leads, revenue) and one owner responsible for measurement and decisions.

- Governance: Naming conventions for campaigns and a cadence for performance reviews (weekly for launch, monthly for optimization).

| Tool/Resource | Purpose | Required Access Level | Quick Setup Action |

|---|---|---|---|

| Google Analytics (GA4) | Behavioral metrics, conversion funnels, engagement time; event-based tracking | Editor or Admin with property-level access | Create `conversion` events, enable cross-domain, link to Search Console |

| Google Search Console | Organic discovery, impressions, CTR, index coverage | Owner or Full user for site property | Verify site, submit sitemap, enable URL inspection |

| Marketing Automation / CRM (e.g., HubSpot, Salesforce) | Lead capture, attribution, LTV tracking, multi-touch attribution | Admin with API / integration rights | Map lead lifecycle fields, tag campaigns with UTMs, enable web tracking |

| Content Automation Platform (content pipeline/tooling) | Workflow automation, content generation, scheduling, publish APIs | Project Admin or Integrator | Connect CMS via API, configure publishing templates, set content scoring rules |

| Spreadsheet (Google Sheets / Excel) | Data consolidation, pivot analysis, simple attribution models | Editor access with sharing enabled | Import CSVs, build pivot tables, add `date`, `source`, `campaign` columns |

Understanding these prerequisites avoids noisy experiments, speeds up valid A/B tests, and makes ROI conclusions actionable. When implemented correctly, this setup reduces measurement overhead and lets teams focus on content that actually moves business metrics.

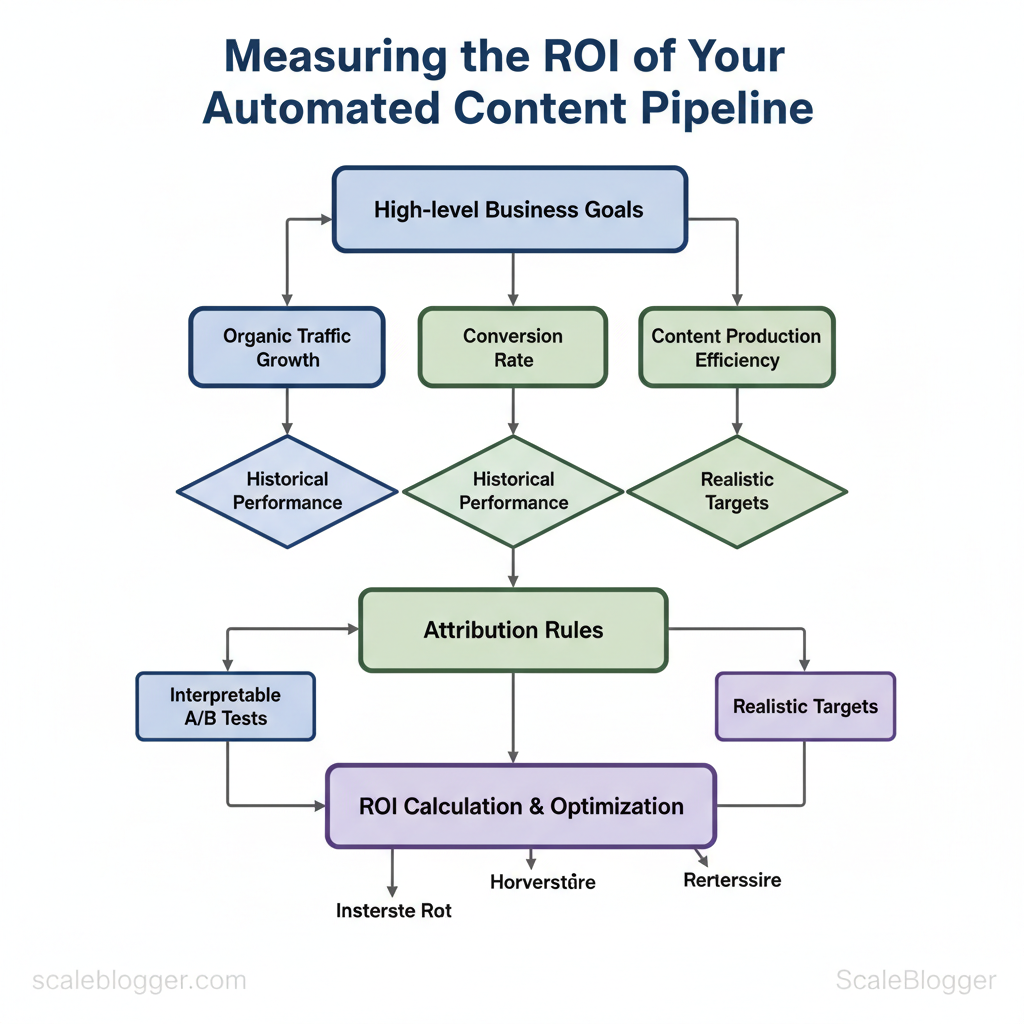

Define Clear Objectives & Success Criteria

Start by translating high-level business goals into a small set of measurable KPIs that guide every content decision. Pick 2–4 KPIs per objective, fix attribution rules up front, and use historical performance to set realistic targets. That discipline prevents chasing vanity metrics and makes A/B tests interpretable.

Why this matters

- Focus: Narrow KPIs keep teams aligned on outcomes rather than outputs.

- Clarity: Attribution rules avoid post-test confusion about which channel ‘deserves’ credit.

- Pacing: Realistic targets reduce churn from repeated failed experiments.

- Primary KPI: The main outcome (e.g., `organic sessions`, `SQLs`, `new revenue`).

- Secondary KPI: Supporting signals (e.g., CTR, bounce rate, assisted conversions).

- Attribution rule: Which touch gets the credit (e.g., `last non-direct click`).

| Business Objective | Primary KPI | Secondary KPIs | Recommended Timeframe |

|---|---|---|---|

| Brand awareness | Impressions / Reach | CTR, social shares, branded search volume | 30–90 days |

| Lead generation | MQLs / Form fills | Conversion rate, CPA, assisted conversions | 30–90 days |

| Direct revenue | Attributed revenue | AOV, conversion rate, repeat purchase rate | 3–6 months |

| User engagement | Engaged sessions / Time on page | Pages per session, scroll depth, bounce rate | 30–60 days |

| SEO growth | Organic sessions | Top-3 keyword count, domain authority, organic CTR | 3–12 months |

Troubleshooting tips

- If targets are routinely missed, revisit baseline data and attribution assumptions.

- If secondary KPIs move opposite the primary KPI, pause and diagnose before scaling.

- If ownership is unclear, assign a single KPI owner responsible for reporting and next steps.

Instrument Your Pipeline: Tracking & Attribution Setup

Start by treating tracking as code-first infrastructure: standardize identifiers, capture micro-conversions, and make analytics a single source of truth by tying it to CRM records. This prevents attribution drift, accelerates optimization cycles, and gives content teams reliable signals to prioritize topics and formats.

“`text ?utm_source=newsletter&utm_medium=email&utm_campaign=may_launch&utm_content=pillar-2025-seo-guide-v1 “`

Practical examples and templates

- UTM template: Use the code snippet above and provide a one-click URL generator in editorial tooling.

- Event payload example: `{“event”:”cta_click”,”content_id”:”pillar-2025-seo-guide-v1″,”user_id”:”anon_123″,”timestamp”:…}`

- Mismatch between CRM and analytics: Check for lost `content_id` during redirects or cross-domain flows.

- Low-quality event data: Audit naming inconsistencies and duplicate events at the tag manager level.

- Tag manager: centralize event wiring; analytics: configure custom dimensions; CRM: add custom fields for identifiers. Consider integrating AI-powered orchestration like Scale your content workflow from Scaleblogger.com for automated tagging and publishing where relevant.

Collect & Normalize Data

Collecting and normalizing data means turning messy exports from multiple systems into a single, trustworthy dataset that informs content decisions. Start by aligning time ranges, canonical identifiers, and timezone references; then deduplicate conversion rows and validate that every event maps to a consistent content ID. The objective is a clean daily dataset that answers: which pieces earned traffic, which converted, and which distribution channels drove value.

Prerequisites

- Access: API or export permissions for Analytics, CRM, CMS, Ad platforms, and your automation tool.

- Standard IDs: A canonical `content_id` or `slug` used across systems.

- Time baseline: Agreement on `timezone` (prefer `UTC`) and reporting window.

- Extraction: platform APIs (GA4, HubSpot, WordPress REST API, Facebook Ads API)

- Normalization: Python/pandas or ETL tools (Airbyte, Fivetran) and a staging database

- Validation: simple SQL queries and checksum scripts

Practical examples

- Example CSV header:

- Normalization rule: Map `utm_campaign` → `campaign_name` and trim to 64 chars.

- Dedupe logic: Keep earliest `event_time` for identical `session_id` + `conversion_type`.

| System | Field | Purpose | Example Value |

|---|---|---|---|

| Analytics | event_time | Timestamp for session-level alignment | 2025-05-28T14:22:03Z |

| Analytics | page_path | Link content to CMS slug | /blog/how-to-scale |

| CRM | contact_id | Tie conversions to user records | 0035a00001LxZ9A |

| CRM | deal_close_date | Revenue attribution timing | 2025-05-30 |

| Automation Platform | campaign_id | Map messaging to content outcomes | camp_72f3 |

| Automation Platform | send_time | Correlate sends to traffic spikes | 2025-05-28T13:00:00Z |

| CMS | content_id | Canonical content identifier | cb-2025-001 |

| CMS | word_count | Content quality signal | 1,450 |

| Ad Platform | ad_id | Ad-level spend attribution | 98234 |

| Ad Platform | cost | Paid spend to include in ROI | $312.45 |

Troubleshooting tips

- If totals don’t match, confirm timezone and `event_time` parsing first.

- If many unmatched rows appear, expand matching keys to include `page_path` and `referrer`.

Calculate ROI: Step-by-Step Formulae

Start by attributing revenue where it’s clearest, then layer in attribution sophistication as data permits. For many content teams, a straight revenue-attribution formula gives quick, defensible ROI; multi-touch or algorithmic models follow when channels and touchpoints multiply. The approach below converts engagement and attribution metrics into business-ready ROI statements that stakeholders understand.

Practical formulae and examples

- Basic revenue attribution: assign revenue from tracked conversions directly to content: `Content Revenue = Tracked Conversions × Average Order Value`

- Normalized ROI: `ROI = (Content Revenue – Total Content Cost) / Total Content Cost`

- Multi-touch weighted revenue: allocate revenue by touchpoint weight: `Weighted Revenue = Σ (Revenue × Touchpoint Weight_i)`

- Lifetime value adjustment: use `LTV` for subscription or repeat-purchase models: `Adjusted Revenue = Tracked Conversions × LTV`

Content Revenue = 120 * $80 = $9,600 ROI = ($9,600 – $6,000) / $6,000 = 0.6 → 60% “`

Attribution model comparison for choosing the right method

| Attribution Model | Simplicity | Data Requirements | Best Use Case |

|---|---|---|---|

| Last-Click | Very simple | Minimal: conversion path logs | Short funnel, few touchpoints |

| First-Click | Very simple | Minimal: entry source data | Brand-awareness campaigns |

| Linear | Moderate | Full path data across sessions | Consistent cross-channel influence |

| Time-Decay | Moderate | Timestamped touchpoint sequences | Short sales cycles with recency bias |

| Algorithmic/ML | Complex | Full-funnel signals + modeling | Enterprise multi-channel optimization |

Practical tips and warnings

- Start simple: run last-click pilots to validate measurement flows before complex attribution.

- Estimate conservatively: when using LTV or multi-touch weights, apply a 10–30% uncertainty margin for decision-making.

- Automate reporting: integrate attribution into dashboards to spot trends quickly — consider `Scale your content workflow` tooling to reduce manual reconciliation.

Run the Measurement Plan: Step-by-Step Implementation

Begin by putting the plan into motion with a disciplined, repeatable sequence. Execute tracking, validate data quality, run calculations, and present findings so decisions follow evidence rather than intuition. The process below turns measurement design into operational reality and ensures stakeholders can trust the numbers.

Prerequisites

- Access: Admin-level access to analytics, tag manager, and data warehouse.

- Instrumentation checklist: Event names, parameters, and expected values documented.

- Owner alignment: Clear assignment of who owns each metric and cadence for reporting.

- Analytics platform: `GA4`, `Mixpanel`, or `Adobe Analytics`

- Tag manager: `Google Tag Manager` or equivalent

- Query engine: `BigQuery`, `Snowflake`, or your SQL-ready warehouse

- Visualization: `Looker`, `Data Studio`, or a BI tool

Example SQL snippet for a simple conversion rate “`sql SELECT date, SUM(CASE WHEN event_name = ‘purchase’ THEN 1 ELSE 0 END) / NULLIF(SUM(CASE WHEN event_name = ‘view_item’ THEN 1 ELSE 0 END),0) AS conversion_rate FROM events WHERE date BETWEEN ‘2025-01-01’ AND ‘2025-01-31’ GROUP BY date; “`

Presenting results

- Bold visualization: lead with a one-slide summary card that answers the business question.

- Context row: show expected vs. actual, confidence intervals, and known tracking caveats.

- Action layer: attach recommended experiments or content changes.

Interpret Results & Present Findings to Stakeholders

Begin by translating metrics into business impact: state the revenue uplift, change in CPA, or customer LTV delta before diving into technical attribution caveats. Stakeholders care about dollars and decisions, not raw charts. Be explicit about where results are confident and where attribution is probabilistic so decision-makers can act with appropriate risk assumptions.

Start here: convert outcomes into three headline metrics—revenue impact, cost-per-acquisition (CPA) movement, and predicted ROI—then walk through what drove those numbers.

Prerequisites

- Access: latest analytics exports (GA4/CSV), ad-platform spend reports, CMS engagement data.

- Alignment: agreed attribution model and reporting window.

- Audience: list of stakeholders and their decision scope.

- Data extracts: GA4, Google Ads, CRM revenue exports.

- Visualization: Tableau/Looker/Google Data Studio.

- Template: slide deck and appendix CSVs (prepared for audit).

How to present results (step-by-step)

Reporting and Recommendations table

| Slide Title | Content | Data Visual | Takeaway |

|---|---|---|---|

| Executive summary | Monthly revenue change: +$45,000; CPA change: -12% | Area chart: revenue vs. spend | Quick decision metric: invest in channel A |

| Methodology & attribution | Attribution: probabilistic MMM + last-click layer; confidence ±8% | Sankey + confidence band | Attribution uncertainty documented |

| Top performing assets | Top 5 posts: page, conversions, revenue | Bar chart: revenue by asset | Focus content refresh on top 3 |

| Recommendations & next steps | 3 steps: scale paid, refresh content, test landing pages; cost $12k | Gantt + ROI forecast | Expected 3x ROI in 90 days |

| Appendix: raw data | GA4 exports, ad spend CSVs, model code snippets | Tables & downloadable CSV | Auditable evidence for stakeholders |

When proposing next steps, tie each recommendation to a dollar forecast and required resources so leaders can approve quickly. Understanding these principles helps teams move faster without sacrificing rigor.

Troubleshooting Common Issues

Start by isolating the failure mode: whether tracking, attribution, or data ingestion is broken. Reproduce the symptom, capture logs, then run targeted diagnostics—this prevents chasing transient noise. Below are repeatable steps, validation techniques, and escalation paths that fit a production content measurement pipeline.

Prerequisites

- Access: analytics account with debug permissions, CRM import logs, publishing platform logs

- Tools: GA debug view or `gtm.debug` console, CRM CSV sample, network tab, API client `curl`

- Time estimate: 10–60 minutes per issue depending on complexity

Common Problems and Fixes Map problem → symptom → diagnostic step → fix

| Problem | Symptom | Quick Diagnostic | Fix |

|---|---|---|---|

| Missing UTM | Sessions show `direct` or `referral` instead of campaign | Check landing page URL in server logs and GA debug view for absent `utm_*` params | Append `utm_source/medium/campaign`, enforce canonical redirects preserving query strings |

| Duplicate content IDs | Page-level events tied to two IDs; inflated event counts | Inspect page HTML and `data-content-id` with browser console; compare to CMS export | Normalize to single `content_id`, deploy redirect or CMS template fix |

| Analytics sampling | Reports show sampling warning; metrics unstable | Open GA report sampling indicator or check BigQuery query limits | Use unsampled exports (BigQuery), reduce date range, or increase property quota |

| CRM import mismatch | Contacts fail import or fields misaligned | Review CRM import logs and sample CSV rows vs. field map | Align header names/types, use consistent `email` or `external_id`, re-run import with dry-run first |

| Time zone discrepancies | Dayparting reports off by several hours | Verify GA property time zone and server timestamps in logs | Standardize on UTC for ingestion, adjust reporting layer timezone conversions |

- Confirm event appears in GA debug view within 30 seconds

- Compare pre/post row counts in CRM import summary

- Run the same test across 3 different networks/browsers

- Log a timestamped screenshot and network HAR for audit

- Analytics engineer: for tag/container regressions or sampling fixes

- CRM admin: for mapping, deduplication, or import reconfiguration

- Platform ops: for server-side redirects and time zone standardization

📥 Download: Content Automation ROI Measurement Checklist (PDF)

Tips for Success & Pro Tips

Start by treating content operations as a production system: define predictable inputs, remove bottlenecks, and measure quality at every handoff. Automate routine tasks, enforce clear naming and version control, and present results with uncertainty built into reporting so stakeholders make better decisions.

Prerequisites

- Team alignment: agree on KPIs, publishing cadence, and ownership.

- Baseline data: a 3–6 month performance snapshot of traffic, CTR, and conversions.

- Access controls: single source of truth for content assets (CMS + cloud storage).

- Version control: Git or a CMS with revision history.

- Automation platform: an orchestration tool or an AI content pipeline (consider `Scaleblogger.com` for accelerating workflows).

- Analytics: Google Analytics / GA4, Search Console, and a reporting spreadsheet or BI tool.

- Naming convention template: a short `site-section_slug_v01_yyyymmdd` spec.

- Automate QA checks: run spellcheck, broken-link scans, and metadata completeness checks on pull or pre-publish.

- Automate scheduling: use an automated scheduler to avoid manual publish errors and to maintain a steady cadence.

- Outcome: lower human error and predictable throughput.

- Tag releases: tag content rollouts with `vX.Y` so performance experiments map to specific versions.

- Outcome: traceability between changes and performance.

- Include ranges: report metrics as `mean ± CI` where practical, or provide week-over-week ranges for small samples.

- A/B experiments: always accompany lift claims with sample size and p-values or Bayesian credible intervals.

- Outcome: stakeholders get realistic expectations and reduce overreaction to noise.

- Use clear slugs: short, intent-driven URLs improve discoverability.

- Automate summaries: generate executive summaries from analytics weekly.

- Build topic clusters: group content by intent and internal-link systematically.

Next Steps: Operationalizing ROI Measurement

Start by assigning clear owners, automating as much of the pipeline as possible, and locking a review cadence that forces learning. That combination removes ambiguity about who tracks what, reduces the time analysts spend on repetitive reporting, and creates a regular feedback loop for improving attribution and content investments.

Prerequisites and tools

- Defined goals: revenue, leads, assisted conversions, or engagement.

- Instrumentation stack: `GA4` or server-side analytics, `BigQuery`/data warehouse, CRM integration, and campaign `UTM` standards.

- Automation platform: ETL tool or scheduling (Airflow, Fivetran, or built-in publisher APIs).

- Reporting layer: Looker Studio, Tableau, or a lightweight dashboarding tool.

90-day rollout: step-by-step

- Automation first: Move routine reporting to scheduled queries and dashboards within 30 days.

- Review cadence: Weekly slice reviews, monthly stakeholder reviews, quarterly strategy adjustments.

- Content measurement playbook (roles, data sources, definitions).

- Scheduled dashboards with drill-downs to campaigns and landing pages.

- Training session for PMs and writers on reading ROI dashboards.

| Timeline | Milestone | Owner | Success Criteria |

|---|---|---|---|

| Week 1-2 | Define KPIs, owners, and instrumentation | Content Lead | KPIs documented; `UTM` taxonomy set; ownership sheet signed |

| Week 3-4 | Build ETL to warehouse and baseline dashboards | Data Engineer | Automated ETL runs; dashboard shows last 30 days data |

| Month 2 | Implement attribution model and CRM join | Analytics Lead | First attribution model validated; CRM match rate > 80% |

| Month 3 | Automate weekly reports and stakeholder training | Growth Manager | Weekly reports auto-delivered; 2 training sessions completed |

| Ongoing | Recalibrate models and continuous optimization | Measurement Owner | Quarterly model updates; ROI-informed content roadmap live |

Operationalizing ROI measurement is an execution problem as much as a technical one—clear roles and automation remove friction, while a steady review rhythm converts data into decisions. When teams adopt this approach, measurement becomes a driver of content strategy rather than a retrospective chore.

Conclusion

By aligning measurement with content production, teams stop guessing and start optimizing. Centralize attribution, automate repetitive tasks, and focus on topics that demonstrably move pipeline — those steps reduce wasted output and reveal growth opportunities. For example, teams that automated attribution and prioritized conversion-driving topics often reallocated budget to higher-ROI campaigns and shortened time-to-lead; another group that standardized briefs and templates cut content turnaround by weeks while improving engagement rates.

Expect initial setup to take a few weeks, with measurable wins appearing within one to two quarters; if wondering what to track first, prioritize leads influenced, conversion rate by topic, and content-to-pipeline velocity. Begin by auditing where manual work piles up, then standardize briefs, instrument attribution, and automate reporting so analysis becomes a routine deliverable rather than an afterthought. For teams looking to accelerate that transition, platforms like Try Scaleblogger to automate content workflows and measurement can streamline orchestration and make measurement repeatable.

Next steps: run a two-week audit, create three standardized templates, and schedule the first automated attribution report. Those actions surface low-effort wins fast and create momentum for broader content transformation.