Key Takeaways

- How AI content insights turn raw metrics into actionable editorial decisions

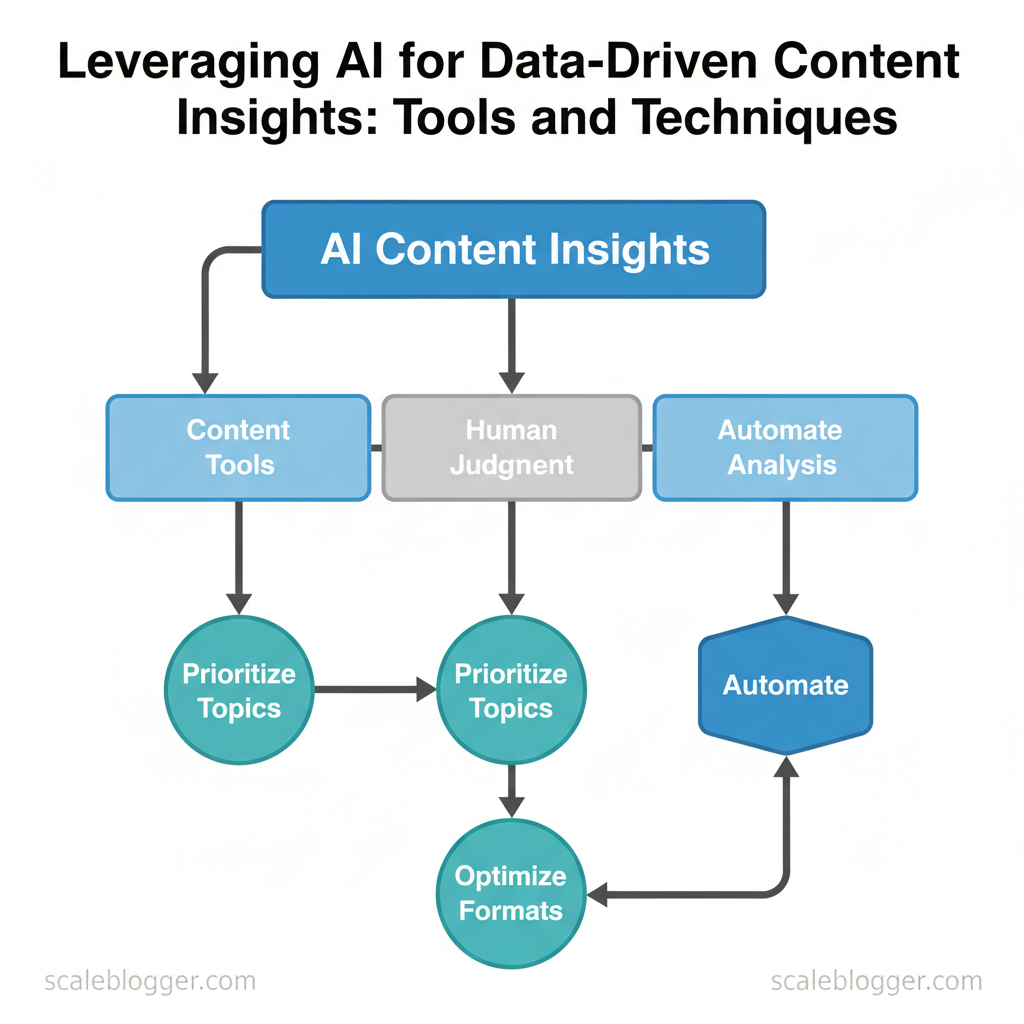

- Practical ways to combine `content analytics tools` with human judgment

- Techniques to scale testing and personalization without bloating workflows

- Measurable outcomes from data-driven content: traffic lift, engagement, conversion

- How Scaleblogger integrates AI and automation into content operations

This matters because data-driven content directly improves ROI: faster topic validation, smarter distribution, and personalized experiences that increase time on page and conversion rates. Picture a content program that uses AI to surface a recurring user intent, tests three headline variants automatically, and lifts click-through by double digits within weeks.

Industry practitioners recommend layering automated insight with editorial judgment to avoid overfitting to short-term trends. The following sections show concrete workflows, tool choices, and guardrails for scaling AI-driven content processes. Start an AI-driven content pilot with Scaleblogger: https://scaleblogger.com

— Why AI Changes the Game for Content Insights

AI transforms content insight from occasional intuition into continuous, measurable advantage. Advanced models and automation make it possible to scan keywords, competitor signals, user intent, and on-page performance at scale, then surface prioritized actions that editors can execute. That changes how teams plan, decide, and measure content: ideation stops being a guessing game and becomes a data-driven pipeline that still depends on human judgment to craft the final narrative.

What follows are the concrete ways AI shifts the work, and realistic guardrails for expectations. This is practical: faster ideation, clearer prioritization, and measurable ROI — but only when paired with editorial craft and verification.

— Business benefits of AI-driven content insights

AI shortens research cycles, improves topic selection, and increases content throughput while preserving quality through automation and scoring. These systems typically combine NLP for topic clustering, SERP analysis for intent signals, and performance forecasting to rank opportunities by potential impact. Integrating an AI content pipeline lets teams move from low-confidence guesses to a prioritized backlog of high-probability wins.

- Faster ideation: Generate and validate dozens of topic angles in minutes.

- Data-backed prioritization: Rank topics by estimated traffic, difficulty, and commercial intent.

- Improved ROI: Focus resources where uplift probability is highest; reduce wasted briefs.

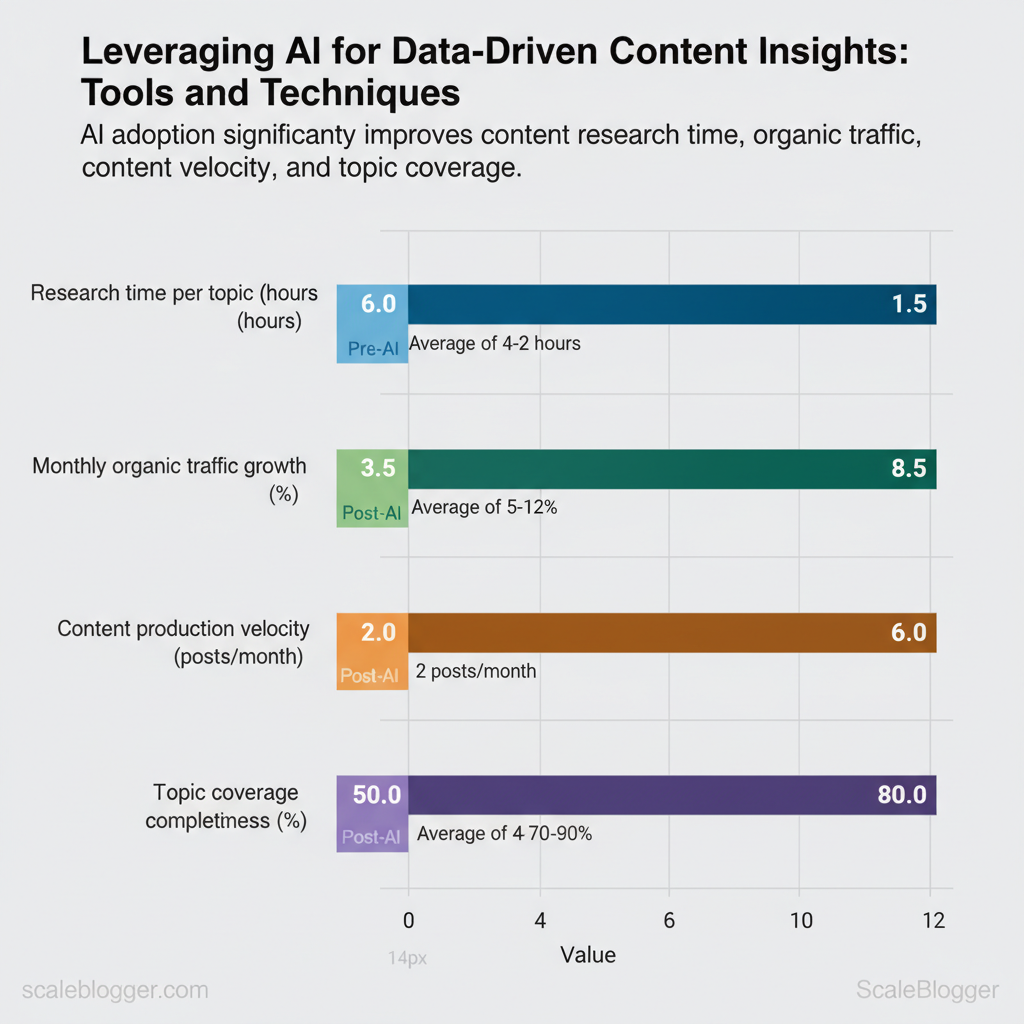

| Metric | Typical pre-AI value | Typical post-AI value | Notes |

|---|---|---|---|

| Research time per topic | 4–8 hours | 1–2 hours | `AI-assisted briefs`, source aggregation |

| Monthly organic traffic growth | 2–5% | 5–12% | Ongoing optimization and topic targeting |

| Content production velocity | 2 posts/month | 4–8 posts/month | Faster briefs + automated scheduling |

| Topic coverage completeness | 40–60% | 70–90% | Better cluster mapping and gap analysis |

| Time to identify content gaps | 30–60 days | 1–7 days | Continuous monitoring and alerts |

Practical example: a SaaS blog used an AI workflow to surface under-served long-tail topics, reducing research time per post from 6 hours to 90 minutes and doubling monthly publishing output. Tools that automate briefing and scheduling, including proprietary solutions like the AI content automation offered at Scaleblogger.com, accelerate that shift.

— Common misconceptions and realistic expectations

AI augments — it does not replace editorial judgment. Expect faster pattern detection, not perfect writing. Models reveal trends and surface opportunities, but human editors still shape voice, verify facts, and make nuanced strategic calls.

Practical verification step: “`bash

Simple content-gap query pattern

search_terms=”brand keyword + intent phrase” query_model –cluster –serp –traffic_estimate “$search_terms” “`When implemented with clear processes and human oversight, AI-driven insights move teams faster and focus effort where it matters. Understanding these principles helps teams scale without losing editorial quality.

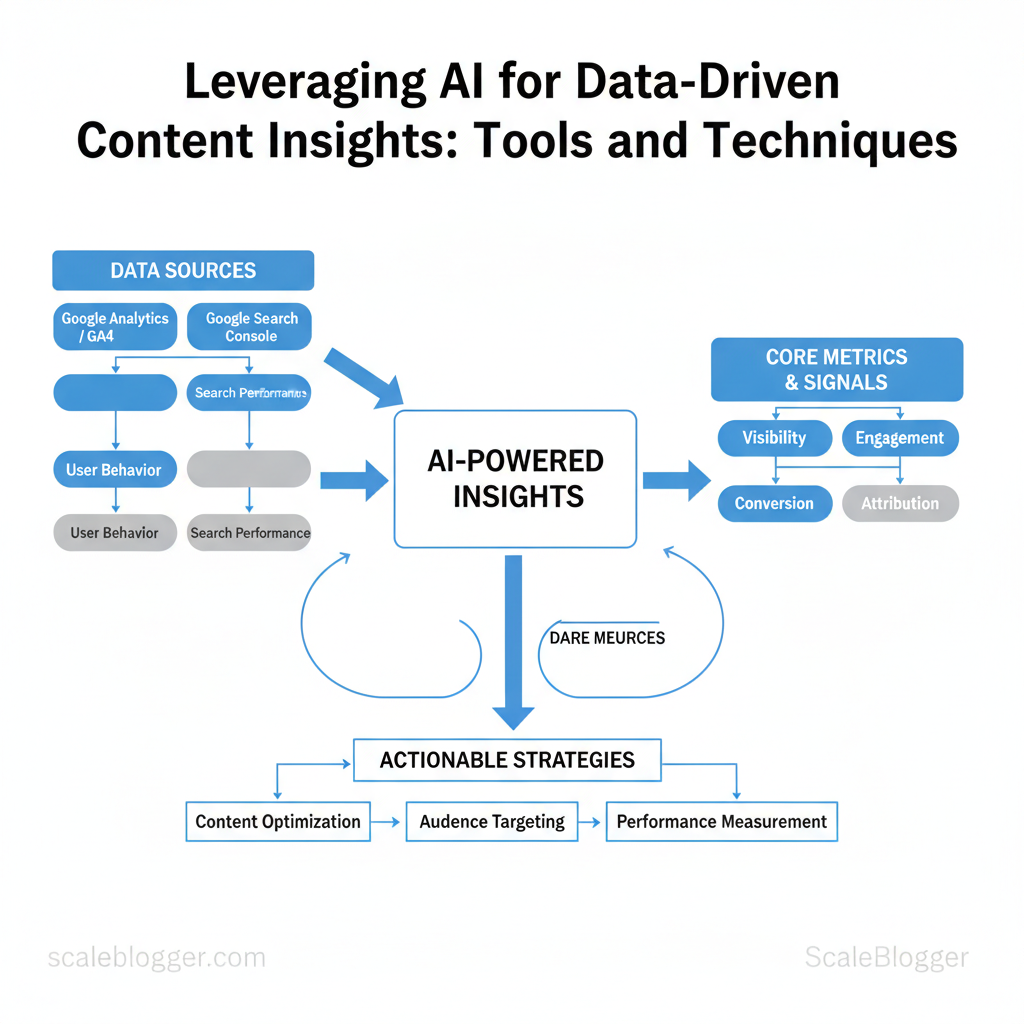

— Core Metrics and Signals for Data-Driven Content

Start by tracking a tight set of metrics that link visibility, engagement, and conversion—these are the signals that tell whether content is discoverable, useful, and aligned with intent. Focus on metrics that are measurable in `Google Analytics`/GA4 and `Google Search Console` because they combine user behavior with search performance; then layer in content-quality signals derived from NLP and path analysis to prioritize work. Practical examples: a page with steady impressions but CTR below 1.5% usually needs a meta/title rewrite; a page with high time-on-page but zero conversions may have a mismatched CTA or offer.

— SEO and engagement metrics to monitor

- Organic traffic: absolute visits from organic search; look for month-over-month trends and seasonal baselines.

- Impressions → CTR: how often your snippet shows vs how often it’s clicked; low CTR with high impressions signals poor metadata.

- Average time on page: indicates engagement depth; combine with scroll depth to avoid confusing long time = interest vs idle time.

- Bounce rate / scroll depth: quick exits or low scroll depth often indicate mismatched intent or poor structure.

- Goal conversion rate: final measure of whether content drives business actions.

| Metric | What it measures | Actionable threshold / red flag | Recommended action |

|---|---|---|---|

| Organic traffic | Visits from search engines | Decline >15% MoM on core pages | Audit content + backlinks; update SERP-targeted headings |

| Impressions → CTR | Visibility vs clicks | CTR <1.5% with >1k impressions | Rewrite title/meta; test schema or FAQ snippets |

| Average time on page | Engagement duration | <40s on long-form >1,200 words | Improve intro, add TL;DR, restructure for skimmability |

| Bounce rate / scroll depth | Immediate exits vs engagement depth | Bounce >70% or scroll <25% | Fix intent mismatch, add internal links, visual anchors |

| Goal conversion rate | Business actions per visit | <0.5% on conversion pages | Optimize CTA, add social proof, A/B test forms |

— Behavioral and content-quality signals AI can detect

- Session-path analysis: reconstructs common click sequences; use it to find intent mismatches where users bounce to competitors.

- Topic-cluster detection with NLP: extracts subtopics and shows coverage gaps; useful when consolidating short articles into authoritative hubs.

- Content scoring: combines readability, topical depth, and entity coverage into a single `score` to rank pages by fix-priority.

— Essential Tools: AI Platforms and Analytics Stacks

Start with the platforms that convert signals into action: pick AI systems for creative expansion and analytics stacks for measurable outcomes. Modern content teams separate ideation from validation—use generative AI to scale concept generation quickly, then apply pattern-based analytics to prioritize ideas with traffic and conversion potential. This two-step approach reduces waste: it produces a broad set of topical directions with `ChatGPT`-style models and then filters those ideas using keyword and gap analysis from tools like Ahrefs or SEMrush.

— Tools for topic discovery and content ideation

Generative models are best when novelty and speed matter; pattern-based tools win when you need evidence and positioning. Use AI to draft topic clusters, headlines, and angle variations; then run those outputs through analytics-driven tools to test search demand and competitive difficulty.

- Prompt and filter tips: Be specific—start prompts with `Write 10 blog topics for X audience targeting intent Y`; add constraints like target word count or competitor examples; use iterative prompting to refine intent.

- Operational workflow: 1. Generate 30 raw ideas with an AI model. 2. Deduplicate and cluster using NLP tools. 3. Score by search demand and content gap. 4. Create briefs for high-priority cluster items.

— Tools for content performance analysis and CRO

Predictive tools forecast potential ROI; descriptive tools explain what already happened. Use both: predictive AI models estimate traffic lift from targeting a keyword cluster, while analytics stacks (GA4, Looker, BigQuery) provide conversion paths and channel attribution.

- Predictive vs descriptive: Predictive tools use historical patterns and machine learning to estimate future impressions and conversions; descriptive tools report sessions, bounce, and funnel metrics.

- Integration tips: Sync CMS with analytics via `GA4` measurement protocol, export content metadata to BigQuery, and feed performance labels back into your content pipeline for model retraining.

- AI alerts and workflows: Set thresholds for automated editorial alerts—e.g., content that drops >30% traffic or pages with conversion rate >X get flagged for priority updates. Use webhook-driven notifications to create editorial tickets automatically.

— Techniques and Workflows to Turn Insights into Content

Signal-led content creation works best when insights are treated as actionable tasks instead of vague ideas. Start by converting signals — search intent changes, traffic dips, competitor wins, or trending questions — into prioritized work items, then move them through a short, repeatable pipeline that combines human judgment with AI accelerators. This reduces wasted drafts and keeps editorial focus on measurable outcomes: traffic, conversions, or engagement.

Workflow: From signal detection to editorial brief

Begin with prioritization using an impact × effort matrix: assign each signal an estimated traffic or revenue upside (impact) and a production cost score (effort). High-impact, low-effort items become immediate briefs; low-impact, high-effort items get deferred or bundled.

What an AI-augmented editorial brief should include:

- Title & working angle: one-line headline, plus one sentence on why it matters.

- Target intent & keywords: primary intent (informational/commercial) and 3–5 keyword clusters.

- Success metrics: target pageviews, CTR uplift, or lead targets.

- Content scaffold: H2/H3 outline, recommended word range, and required assets (data, screenshots).

- Voice & references: brand tone, audience persona, and 3 authoritative references.

- AI guardrails: banned claims, citation needs, and sections requiring human verification.

- Editor: fact-checks claims, data points, and sourcing.

- SME (subject-matter expert): approves technical details and sensitive statements.

- SEO analyst: confirms keyword mapping and internal linking plan.

“`markdown Title: [Working headline] Angle: [Why it matters] Primary keywords: [x, y, z] Outline: H2/H3 skeleton Metrics: [pageviews, leads] Notes: [verify stats, do not claim X] “`

Takeaway: A disciplined brief converts noise into a clear execution plan and limits rework by defining success up front.

Workflow: Continuous optimization and A/B testing with AI

Run optimization as a steady loop rather than one-off audits. Design low-risk experiments focused on modular elements — headline, meta description, first 300 words, or a single CTA — so changes can be reversed quickly if they fail.

How to design low-risk tests:

- Start small: change one variable per test.

- Use holdouts: test 10–20% of traffic before full rollout.

- Automate measurement: wire experiments into analytics for real-time monitoring.

When to roll out changes broadly:

- Statistical confidence: observed lift sustained for 7–14 days with consistent sample sizes.

- Business signal alignment: lift aligns with KPI thresholds (e.g., ≥10% CTR improvement).

- Quality checks pass: no negative impact on user behavior or brand voice.

Scaleblogger.com’s AI content automation fits naturally in these steps for brief generation and scheduled optimization, but teams should always keep editorial verification layers intact. When implemented correctly, this approach reduces overhead by moving decision-making to the team level and freeing creators to focus on high-value storytelling.

— Measurement, Governance, and Ethical Considerations

Measurement and governance are not optional add-ons; they determine whether an AI-augmented content program actually drives business value while staying compliant and credible. Start by mapping a small set of outcome-focused KPIs to business goals, run regular review cadences that mix automated dashboards with human interpretation, and enforce governance checkpoints around accuracy, sourcing, and data privacy. Teams that treat measurement as a continuous feedback loop—rather than a quarterly audit—scale faster and make safer automation choices.

— Measurement framework and KPIs

A compact measurement framework ties content outputs to engagement and commercial outcomes. Use a layered KPI stack: baseline SEO health, content engagement, and direct business signals. Reviews should be frequent enough to catch regressions but not so frequent they create noise.

- Baseline SEO metrics: track visibility and click-through trends to detect algorithm shifts.

- Engagement signals: capture time on page, scroll depth, and return visits for quality signals.

- Business conversions: connect content to leads, MQLs, or revenue where possible.

| KPI | Definition | Review cadence | Benchmark / target |

|---|---|---|---|

| Organic sessions | Visits from unpaid search | Monthly | +5–15% YoY for active programs |

| SERP CTR | Click-through rate from search results | Monthly | 3–10% site average; top pages >15% |

| Average time on page | Mean session duration on page | Monthly | 1.5–3 minutes depending on content depth |

| Content-generated leads | Leads attributed to content (form fills, demos) | Quarterly | 5–20 leads/month for mid-size programs |

| Pages refreshed per month | Content updates performed per month | Monthly | 10–50 pages depending on site size |

— Governance, accuracy checks, and privacy

Governance must combine automated checks with human judgment. Implement a `human-in-the-loop` step for any content affecting legal, medical, or financial decisions, and require clear citation rules for facts and quotes. Use data-minimization principles when handling analytics or personalization.

- Human review cadence: editorial sign-off for published pieces, expert review for high-risk topics.

- Citation policy: obligate inline citations for data points and list source type (primary/secondary).

- Privacy checklist: minimize PII capture, anonymize analytics, document retention periods.

Tools and services that automate parts of this pipeline—like AI-assisted drafting with mandatory editor approval—reduce repetitive work while keeping accountability. Platforms such as Scaleblogger.com fit naturally where teams want an AI-powered content pipeline combined with governance hooks and performance benchmarking. Understanding these principles helps teams move faster without sacrificing quality.

📥 Download: AI-Driven Content Insights Implementation Checklist (PDF)

— Next Steps: Implementing an AI-Driven Content Program

Start by defining a narrow pilot with measurable outcomes and the simplest tech that proves value quickly. Successful pilots focus on one content type (e.g., SEO blog posts or product guides), a clear performance metric (organic traffic, conversion lift, time-to-publish), and a repeatable process for drafting, editing, and publishing using AI-assisted tools. This approach limits risk, surfaces integration issues early, and produces the case studies leadership needs to fund wider rollout.

How to structure the pilot and early scaling decisions:

- Scope: pick a single channel and 10–20 target topics to test content quality and workflow.

- Success metrics: choose 2–3 KPIs such as organic sessions, average time-on-page, and content ROI.

- Minimum viable stack: lightweight CMS integrations, an LLM workspace, SEO research tool, and simple analytics—avoid heavy engineering work up front.

- Governance: assign a pilot owner and a reviewer who understands brand voice and compliance.

- Feedback loop: capture editor prompts and model outputs to refine `prompt` templates and publishing rules.

— 30/60/90 day pilot roadmap

| Timeframe | Objective | Key deliverables | Owner |

|---|---|---|---|

| Weeks 1-2 | Setup and research | Keyword list (20 topics), `prompt` templates, tooling checklist | Content Lead |

| Weeks 3-4 | First draft loop | 5 AI-drafted drafts, editorial guidelines, publishing workflow doc | Editor |

| Weeks 5-8 | Optimize and publish | 10 published posts, on-page SEO to `GA4` goals, CRO test ready | SEO Specialist |

| Weeks 9-12 | Measure and iterate | Performance dashboard, content scoring report, updated prompt library | Analytics Lead |

| Post-pilot evaluation | Decide scale path | ROI report, scale recommendations, staffing needs | Pilot Sponsor |

— Resources, training, and scaling tips

Start training on concrete skills and clear operational decisions to avoid common traps.

- Essential training topics

- Centralize vs decentralize

- Budget and hiring signals

Practical templates and a `prompt` library accelerate adoption; consider partnering with a vendor or service like Scaleblogger.com to jumpstart pipeline automation and performance benchmarking. When implemented correctly, this approach reduces overhead by making decisions at the team level and lets creators focus on strategic storytelling. Understanding these principles helps teams move faster without sacrificing quality.

Conclusion

You now have a clear path from metrics to editorial decisions: use content analytics to spot opportunity gaps, apply human judgment to prioritize high-impact topics, and run rapid experiments to validate what resonates. Practical examples earlier showed how teams that layered search-intent signals with performance metrics accelerated traffic growth, and how editorial A/B tests clarified which headlines and formats scaled. Keep these three actions front and center: – Combine quantitative signals with qualitative checks to avoid chasing noise. – Prioritize topic clusters that map to buyer intent for sustainable organic growth. – Pilot small experiments, then scale winners to preserve velocity without sacrificing quality.

If accuracy or workflow disruption feels like a blocker, start with a narrow pilot that automates data collection but keeps editorial control — that addresses both reliability and change management without heavy upfront cost. For teams ready to streamline execution and scale faster, platforms that automate content ops and measurement can remove manual friction; for a practical next step, consider testing that approach in a focused program. When ready, take action: Start an AI-driven content pilot with Scaleblogger. This gives a structured way to validate hypotheses, align teams, and turn insights into repeatable content wins.