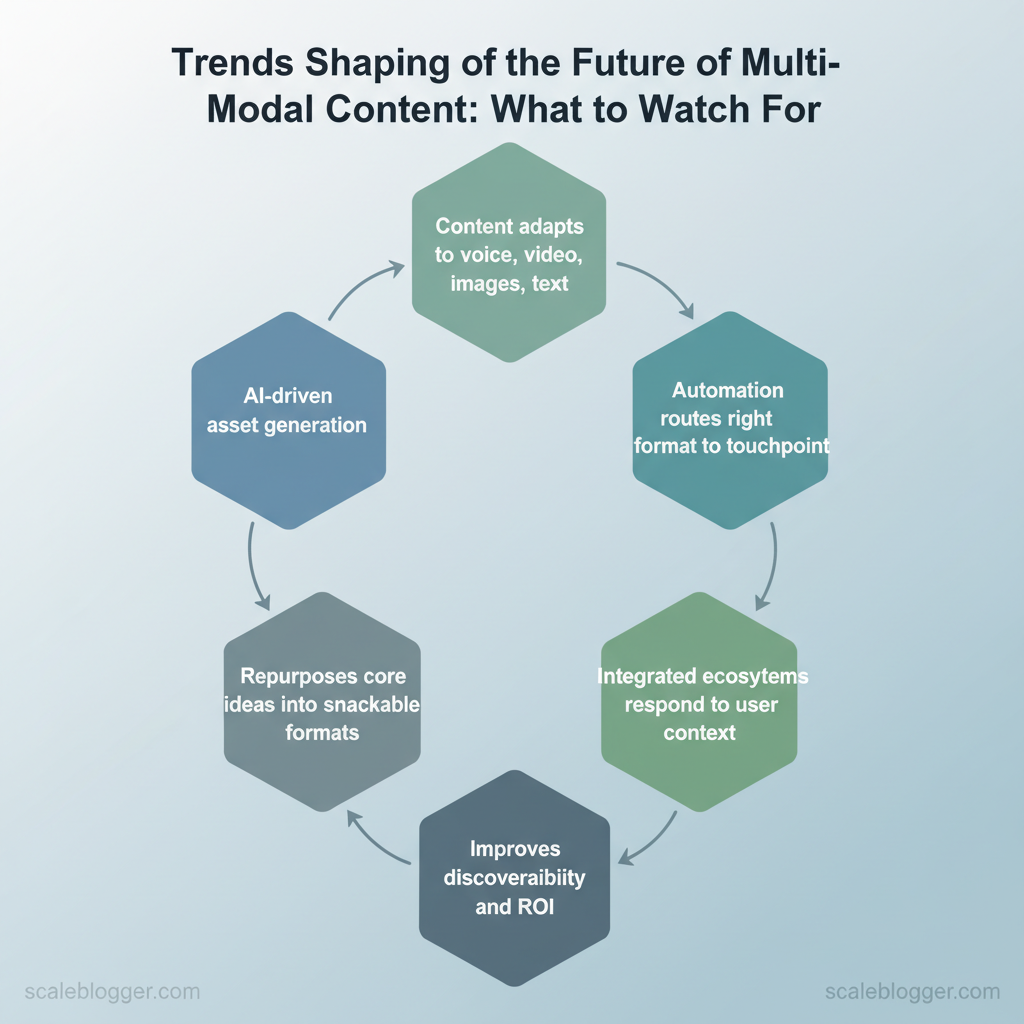

This change rewires workflows and measurement. Modern production pipelines pair `AI`-driven asset generation with automation to route the right format to the right touchpoint, reducing time-to-publish and improving relevance. The shift matters for discoverability and ROI because search and social platforms prioritize rich, interactive signals over plain text alone.

Picture a brand that uses short-form video, interactive transcripts, and adaptive images to lift conversion across channels while the content engine automatically repurposes core ideas into snackable formats. That practical approach to the future content strategies landscape turns experimentation into repeatable advantage.

- How automation integrates with creative workflows to speed production

- Ways emerging content formats improve discoverability and engagement

- Metrics that reveal cross-format performance, not just vanity counts

- Practical steps to convert text-first assets into multi-modal experiences

Trend 1 — AI-Generated Multi-Modal Creative

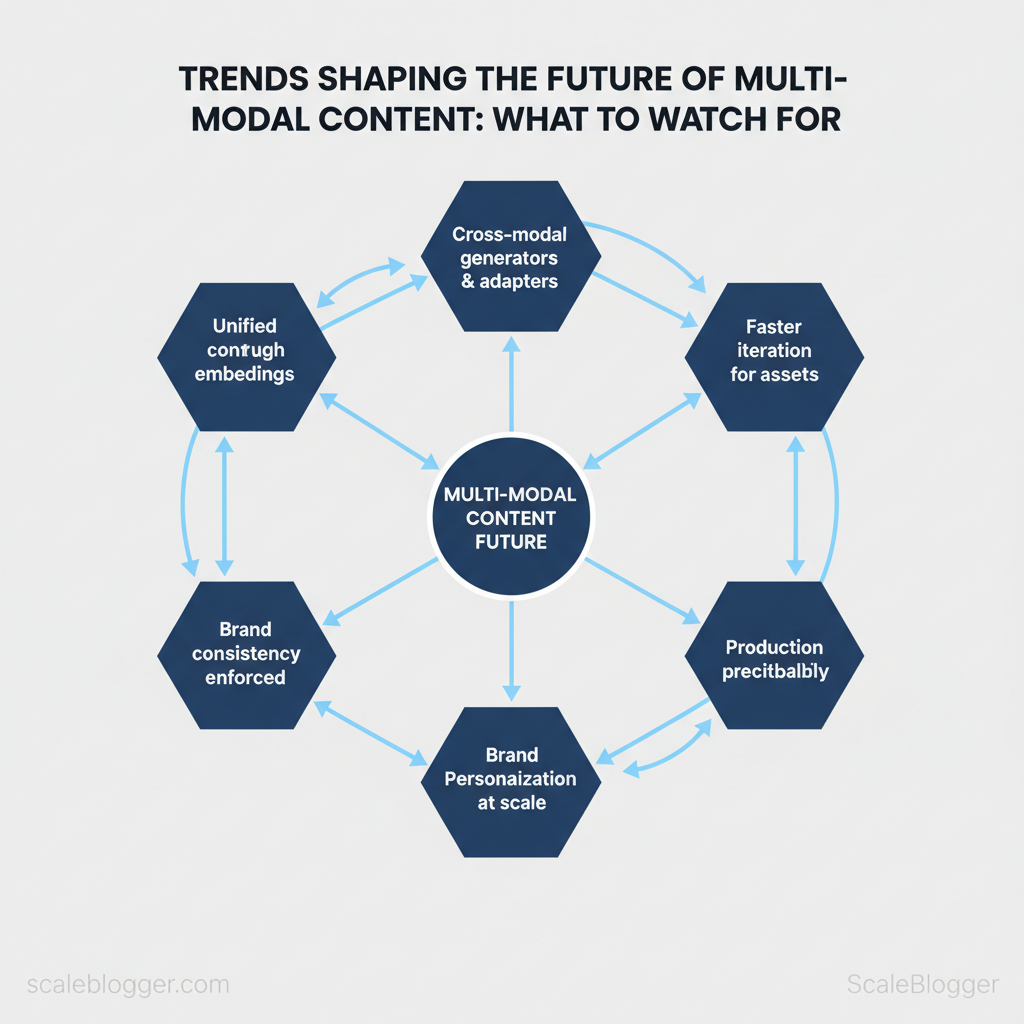

Generative models now bridge formats, turning a single idea into coordinated text, image, audio, and video assets with minimal human touch. Rather than treating visuals, audio, and copy as separate deliverables, modern pipelines use cross-modal transformations and unified `embeddings` so context and intent persist across outputs. This lets content teams scale campaigns, A/B test formats quickly, and keep brand voice consistent while producing more personalized creative.

How modalities get tied together

Unified context through embeddings

Multimodal embedding spaces map text, images, and sometimes audio into a shared vector space so similarity and intent are preserved. That means a headline, an accompanying hero image, and the voiceover for a short video can all derive from a single semantic representation.Cross-modal generators and adapters

“Multimodal models let creators repurpose a single brief across formats, cutting production time and inconsistencies.”

Practical benefits for content teams

- Faster iteration: create dozens of asset variants from one prompt.

- Brand consistency: shared `embeddings` enforce tone and visual cues.

- Personalization at scale: programmatic swaps for names, locations, or imagery.

| Approach / Tool | Supported Modality Pairs | Strengths | Typical Use Cases |

|---|---|---|---|

| Stable Diffusion | text→image, image→image | Open models, fine-tuning | Concept art, social visuals |

| DALL·E (OpenAI) | text→image | High-quality compositing, coherent scenes | Marketing hero images |

| Midjourney | text→image | Artistic stylization, fast iterations | Brand moodboards |

| GPT-4 with Vision | image→text, text→image (via prompts) | Strong context, reasoning across modalities | Captioning, brief-to-asset |

| CLIP / Embedding platforms | image↔text (similarity) | Robust semantic matching | Asset search, tagging |

| ElevenLabs | text→audio (TTS) | Natural prosody, voice cloning | Podcasts, ads |

| Descript / Overdub | audio→audio, text→audio | Editing-first workflow, multitrack | Voice edits, tutorials |

| Runway | text→video, image→video | Rapid prototyping, toolchain integrations | Short form video ads |

| Synthesia | text→video (avatar) | Script-to-video, multilingual | Training videos, spokespeople |

| Custom multimodal pipelines | any via orchestrators | Tailored controls, data privacy | Enterprise-grade campaigns |

Trend 2 — Personalization at Modality-Level

Personalization is moving beyond audience segments into the modality mix itself: different users prefer different combinations of text, audio, images, and video depending on context, device, and intent. Modality-level personalization means mapping behavioral and contextual signals to content formats (for example, short audio summaries for commuters, long-form interactive guides for desktop researchers) and continually testing which mixes drive engagement and conversions. This approach reduces wasted content effort and increases relevance by delivering the right format at the right moment.

Modality profiling and audience signals

- Session length: short sessions → concise formats (summaries, bullets)

- Device type: mobile → vertical video, snackable audio; desktop → interactive longreads, dashboards

- Time of day: commute hours → audio-first; late-night browsing → long-form reading

- Accessibility needs: screen readers → semantic HTML, transcripts, captions

- Behavioral patterns: repeat readers → deeper, progressive disclosure content; first-time visitors → clear, fast paths

Industry analysis shows that users exposed to preferred modalities spend more time and show higher conversion intent, especially when accessibility and context are respected.

Practical profiling uses analytics platforms and simple heuristics (e.g., `avg_session_duration < 90s` → prefer `audio-summary` or `infographic`). Privacy and consent are non-negotiable: collect only necessary signals, honor do-not-track, and provide clear opt-outs.

Implementing modality-level tests

“`yaml

Example test config

test_name: audio_vs_image_signup cohorts: – mobile_commuters variants: – article + audio_90s – article + hero_image primary_kpi: newsletter_signup_rate duration: 14_days “`Practical tips: prioritize low-friction modalities first (transcripts, short audio), measure both immediate and downstream conversion, and respect privacy signals when personalizing.

| Audience Signal | Inferred Preference | Recommended Modalities | Measurement KPI |

|---|---|---|---|

| Mobile, short sessions | Quick answers, skim-friendly | Snackable text, vertical video, 60–90s audio | CTR, bounce rate, micro-conversions |

| Desktop, long sessions | Deep research, multi-step tasks | Interactive longreads, data visualizations, downloadable PDFs | Time on page, task completion, lead form fills |

| Commuting behavior | Hands-free consumption | Podcast episodes, audio summaries, chapterized content | Audio completion rate, subscribe rate |

| Accessibility needs | Non-visual access, clear structure | Semantic HTML, captions, full transcripts, alt text | Screen reader usage, accessibility compliance checks |

| Repeat readers/subscribers | Deeper content, personalization | Progressive series, personalized recs, gated deep dives | Repeat visit rate, subscription upgrades |

When implemented correctly, modality-level personalization shifts work from one-size-fits-all publishing to delivering format-first experiences that respect context and accessibility—letting creators focus on substance while automation handles format delivery. For teams ready to operationalize this, AI content automation like Scaleblogger’s AI-powered content pipeline can accelerate mapping signals to format rules and scale winning mixes across the blog estate. This approach speeds decision-making and reduces wasted content production effort.

Trend 3 — Immersive and Spatial Formats (AR/VR/3D)

Immersive formats are moving from novelty to practical business channels: augmented reality and 3D viewers let customers try and customize products before buying, while VR and mixed reality create controlled environments for training, storytelling, and experiential marketing. These formats change the content relationship from passive consumption to active interaction — content becomes a product utility as much as messaging.

Business use cases and how they map to outcomes

- Product try-ons & configurators: Virtual try-ons, furniture placement, and color/configuration selectors increase conversion intent and reduce returns.

- Interactive storytelling: Branded micro-worlds and location-based AR campaigns boost dwell time and social sharing.

- Training & simulations: VR flight decks, industrial maintenance sims, and safety drills lower training costs and accelerate skill transfer.

- Sales enablement: 3D demos and AR overlays help reps explain complex products during remote pitches.

- Event & retail experiences: Mixed reality installs create memorable, shareable moments that drive earned media.

Practical tooling notes Pilot tools: WebAR platforms for no-app experiences, 3D marketplaces* for reusable assets. Prototype tools: Unity/Unreal for interactivity, glTF* + `draco` compression for performance.

- Scale considerations: CDN hosting, device performance testing, custom analytics for interaction metrics.

| Format | Best Use Cases | Technical Complexity | Typical Time-to-Launch |

|---|---|---|---|

| Mobile AR (WebAR) | Quick try-ons, location AR | Low; `WebXR` friendly | 2–8 weeks |

| App-based AR | High-fidelity product demos | Medium; SDK integration | 2–4 months |

| VR experiences | Training, deep storytelling | High; hardware & UX design | 3–6 months |

| 3D product viewers | E-commerce product pages | Low–Medium; optimization | 2–6 weeks |

| Mixed reality installations | Events, retail flagship | Very high; custom hardware | 3–9 months |

Trend 4 — Contextual Distribution and Device Fragmentation

Content no longer lives in a single place; it must be engineered to perform across contexts and devices. Optimize for where and how audiences consume: short vertical clips for snackable discovery, long-form episodes for deep engagement, voice responses for transactional intent, and in-app microcontent for active users. Matching length, format, metadata, and progressive enhancement strategies to each context reduces friction and preserves the same underlying message across channels.

Start with content design that accepts fragmentation as the norm. Build a canonical asset (long-form article, episode, or report) and produce derived variants tuned for each distribution context. Technical enablers include `content_id` conventions, consistent metadata schemas, and progressive enhancement so experiences degrade gracefully on older devices or lower-bandwidth networks.

- Short-form social: prioritize vertical, under-60s clips with on-screen captions and a clear hook.

- Long-form platforms: chapters, timestamps, and structured show notes boost discoverability and session time.

- Voice assistants: surface concise answers with schema markup and conversational snippets.

- Email/newsletters: modular blocks and linked microsummaries increase click-throughs.

- In-app content: lean on personalization signals and lightweight HTML/CSS for fast rendering.

| Distribution Context | Recommended Length/Format | Primary Modalities | Indexing / Discovery Tip |

|---|---|---|---|

| Short-form social (TikTok/Reels) | 15–60s vertical clips, 1–3 hooks | Video, captions, stickers | Use clear captions, trending sounds, short captions |

| Long-form platforms (YouTube/Podcast) | 10–60+ minutes, chapters | Video, audio, transcripts | Add timestamps, full transcripts, structured show notes |

| Voice assistants (Alexa/Google) | 1–30s response snippets | Spoken answer, SSML | Provide concise answers + `FAQ` schema, SSML for prosody |

| Email/newsletters | 50–250 words modular blocks | Text, images, links | Use preheader text, content IDs, linked microsummaries |

| In-app content | 5–90s micro-interactions | HTML, AMP-like pages | Use lightweight markup, local caching, personalization tags |

Operationalizing this—consistent IDs, UTMs, and a centralized analytics layer—lets teams attribute multi-touch journeys and optimize where each variant produces the best return. When implemented correctly, this approach reduces wasted effort and makes decisions about format and channel measurable. This is why modern content strategies invest in automation and standardized metadata: they let creators focus on narrative quality while systems handle distribution complexity.

Trend 5 — Accessibility and Inclusive Design as Competitive Advantage

Accessibility and inclusive design are no longer optional extras; they expand reach, strengthen SEO signals, and reduce legal and reputational risk. Making content usable for people with disabilities—via readable text, meaningful alt text, accurate captions, and navigable immersive experiences—also improves machine readability. Search engines index transcripts, captions, and semantic headings, which increases discoverability. Brands that prioritize accessibility tap underserved audiences, avoid compliance costs, and gain long-term trust.

- Improved discoverability: Transcripts and captions create indexable text that drives long-tail search traffic.

- Better user engagement: Clear headings and readable copy reduce bounce rates and increase time-on-page.

- Risk mitigation: Meeting accessibility standards lowers the chance of compliance penalties and class-action suits.

- Brand differentiation: Inclusive experiences signal reliability and broaden market reach.

- Operational efficiency: Accessibility-first content is easier to localize, repurpose, and automate.

Modality-specific accessibility checklist

| Modality | Accessibility Action | Implementation Time (estimate) | Priority (High/Medium/Low) |

|---|---|---|---|

| Text / Articles | Use semantic headings, readable fonts, 90+ contrast, `aria` landmarks | 1–3 hours per article | High |

| Images / Graphics | Add descriptive `alt` text, provide detailed captions, include data tables as text | 15–30 minutes per image | High |

| Video | Add captions, provide verbatim transcripts, include audio descriptions for visuals | 1–4 hours per video | High |

| Audio / Podcasts | Publish episode transcripts, chapter markers, show notes with links | 30–90 minutes per episode | Medium |

| AR/VR experiences | Ensure keyboard/navigation alternatives, adjustable speed and text size, spatial audio cues | 1–2 weeks per experience | Medium |

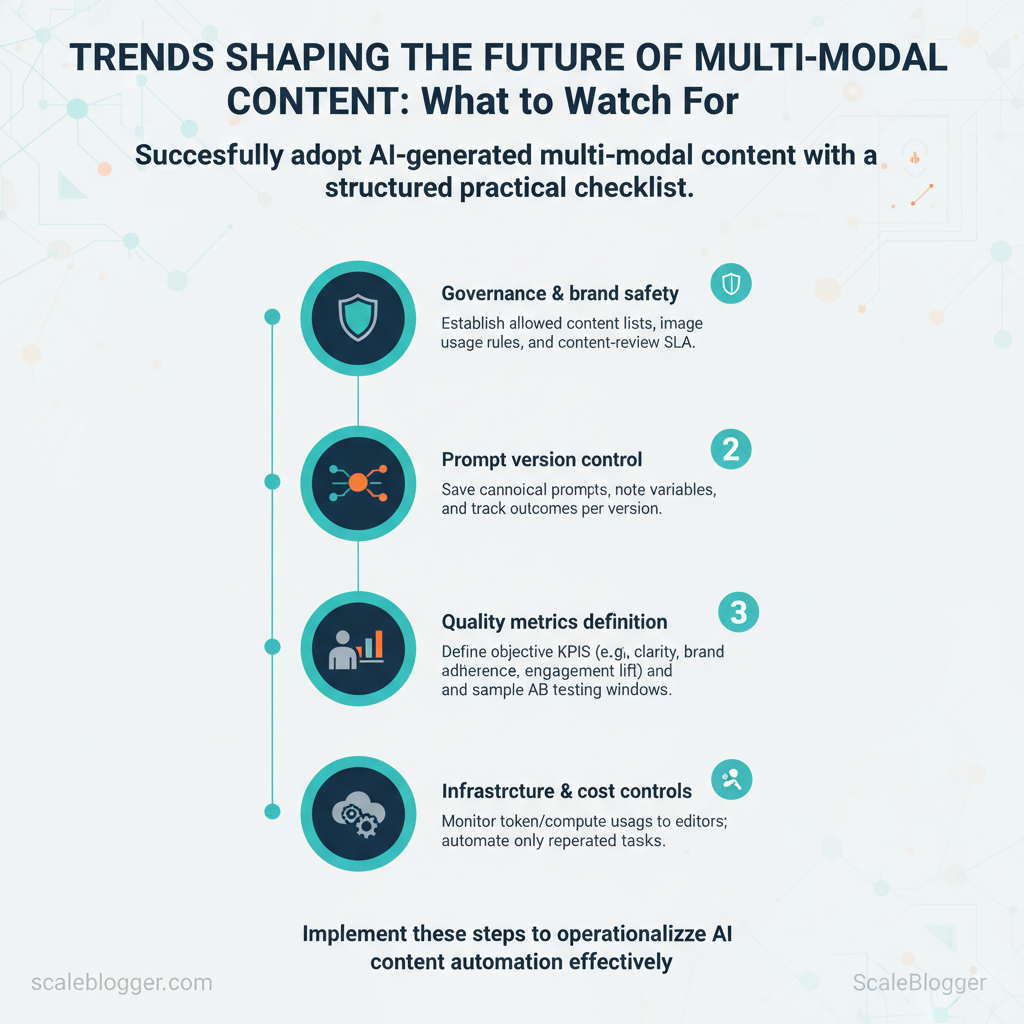

Integration tip: Automate repetitive steps—caption generation, alt-text suggestions, and contrast checking—so creators focus on quality. Scale your content workflow with AI-powered tools that handle the mundane parts of accessibility while teams refine voice and context. Understanding and applying these practices accelerates production without sacrificing usability or SEO gains.

📥 Download: Multi-Modal Content Strategy Checklist (PDF)

Trend 6 — Measurement and Monetization of Multi-Modal Experiences

Measuring multi-modal experiences requires treating each modality as both a cost center and a revenue vector—track production and distribution costs, then connect engagement-weighted outcomes to revenue or lifetime value (LTV) uplift. Start by quantifying `engaged minutes`, leads attributed to each format, and incremental conversion rate change; then attribute a dollar value to those increases. That lets teams compare the marginal return of a podcast episode versus a short-form video or an interactive infographic and choose where to scale.

Why this matters: brands that map engagement to revenue can prioritize modalities that deliver higher LTV per dollar spent instead of guessing based on vanity metrics.

Core framework: measuring multi-modal ROI

- Define cost buckets: production, post-production, distribution, and platform fees.

- Measure engagement-weighted outcomes: engaged minutes, repeat visits, shares, lead quality.

- Calculate incremental conversion uplift: A/B test variants with and without the modality to isolate effect.

- Translate to revenue/LTV: assign `average order value (AOV)` and `LTV` to incremental conversions.

- Track net ROI and payback period: include depreciation of content (evergreen value).

Industry analysis shows engagement-quality beats raw reach for monetization—deep engagement converts at materially higher rates than passive impressions.

Illustrate a worked ROI example with sample numbers for production, distribution, engagement, and revenue uplift

| Line Item | Assumed Value | Notes | Impact on ROI |

|---|---|---|---|

| Content production (multi-modal) | $12,000 | 4 videos + 2 podcasts + interactive asset | Largest upfront cost; enables repurposing |

| Distribution & hosting | $1,500 | CDN, hosting, platform promotion | Ongoing monthly + paid placements |

| Engagement uplift (value) | $18,000 | +40% engaged minutes → higher ad / sponsorship CPM | Converted to ad/sponsorship revenue |

| Conversion uplift (value) | $6,000 | +1.2% conversions from gated leads | Based on AOV and lead-to-sale rates |

| Net ROI | $10,500 (78%) | (Revenue uplift $24,000 − Costs $13,500) / Costs | Positive payback, justifies scale |

Conclusion

Turning a set of isolated assets into a living, context-aware content system changes how audiences discover and engage with your work. Integrating structured content, automated distribution, and multimodal adaptation reduces production friction, improves relevance, and shortens time-to-value. Teams that standardized their content pipeline saw faster iteration loops and clearer performance signals; editorial groups that layered AI-driven tagging onto legacy archives unlocked renewed traffic from evergreen pieces. Keep attention on three practical moves: map the content lifecycle, automate repetitive distribution tasks, and measure outcomes by audience journeys rather than page counts.

For immediate next steps, audit one high-value workflow and replace manual touchpoints with automation, then run a two-week pilot to compare engagement and efficiency. For teams looking to scale that pilot into an operational system, platforms that unify AI, orchestration, and analytics can cut implementation time. To streamline this transition and explore a production-ready approach, visit Explore Scaleblogger’s AI-driven content strategy and automation. This site provides resources and examples to help translate the strategies above into concrete processes, so teams can move from experimentation to predictable content ROI.