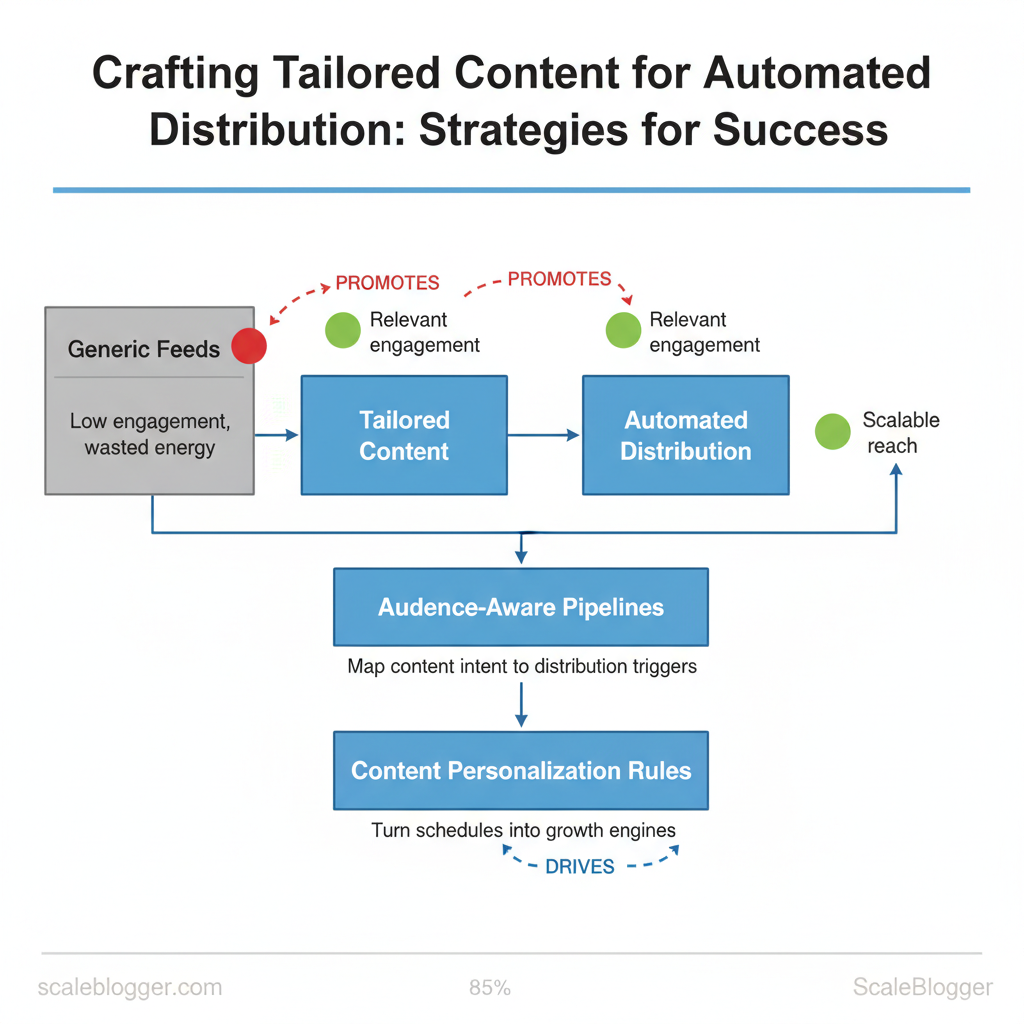

Marketing teams still spend too much time converting one-off assets into scalable programs, and that friction kills both reach and relevance. Industry practice now favors tailored content delivered through automated distribution, yet many implementations produce generic feeds rather than meaningful engagement. This gap costs attention, lowers conversion rates, and wastes creative energy.

Successful programs shift from one-to-many publishing to audience-aware pipelines that map content intent to distribution triggers. Picture a product team that routes feature explainers to high-intent segments, then uses automation to sprout short-form variants across channels; engagement rises while manual hours fall. Experts observe that layering content personalization rules into automation—not merely batching posts—turns schedules into growth engines.

- What an audience-aware pipeline looks like and why it outperforms simple scheduling

- How to translate content intent into distribution triggers and personalization rules

- Small technical patterns that unlock scalable variants without bloated workflows

- Metrics that reveal whether automation improves attention or just increases output

Understand the Foundations of Tailored Content

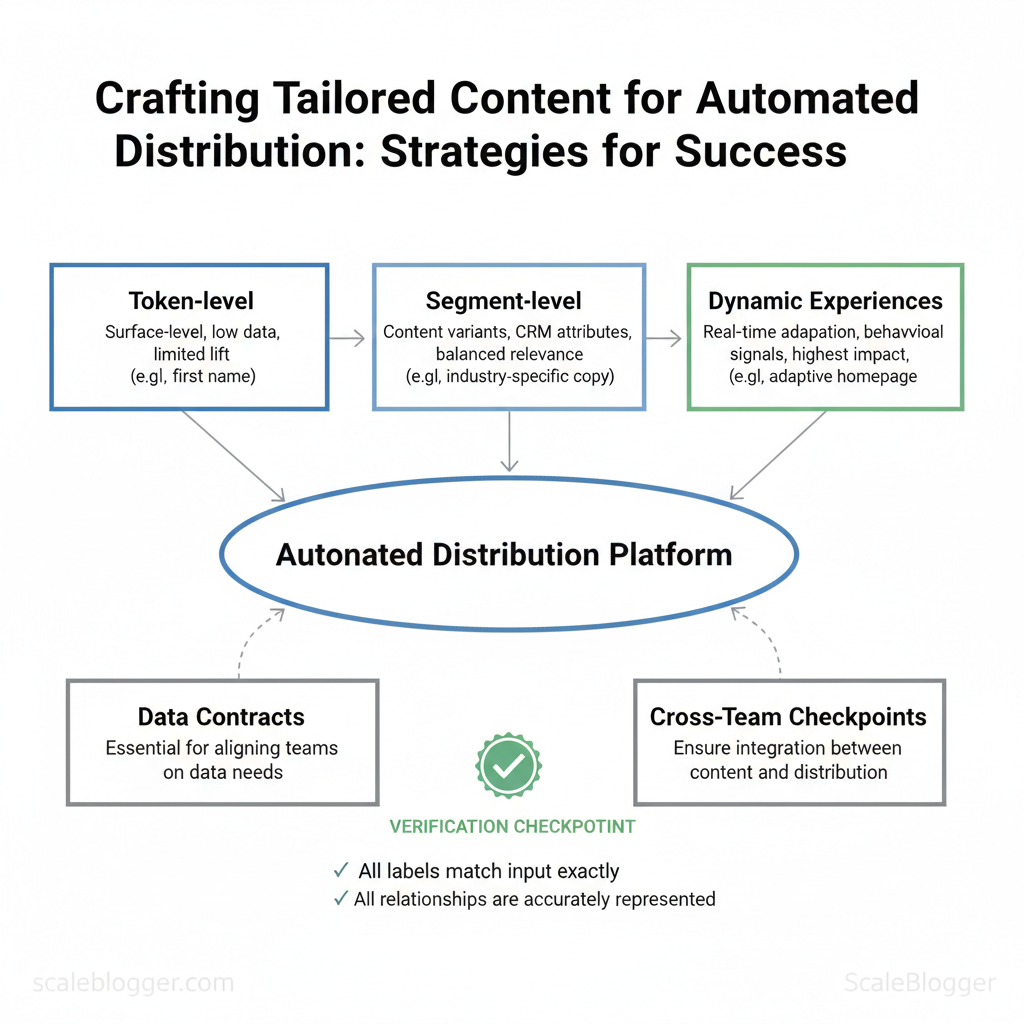

Start by treating personalization as a spectrum, not a binary switch. At one end are simple `token` substitutions (names, locations); in the middle sit segment-based variants (industry, persona); and at the far end are fully dynamic experiences driven by real-time behavior. Defining these levels early prevents scope creep, clarifies data needs, and sets realistic performance expectations for stakeholders.

Key concepts and a practical three-tier model Token-level personalization — What it is: surface-level replacements like `{{first_name}}`; Example:* “Hi Maria, here’s your local guide.” Segment-level personalization — What it is: content variants based on audience buckets; Example:* product page copy for “ecommerce managers” vs “site owners.” Dynamic experiences — What it is: content that adapts in real time to behavior or context; Example:* homepage hero that changes after detecting return visits or cart abandonment.

Why precise terminology matters for planning Using consistent terms avoids cross-team misalignment: data engineers need to know whether a “personalized campaign” requires a single `name` field or streaming events. Product, content, and analytics teams should map features to these tiers during prioritization so data contracts, testing windows, and success metrics line up.

Why integration between content and distribution matters Integration prevents wasted impressions and inconsistent user experiences. When content personalization is designed independently from distribution, teams risk sending the wrong variant to the wrong channel, or timing messages at the wrong stage of the funnel. Common failures include:

- Wrong segment: tailored article sent to a generic newsletter list.

- Timing mismatch: behavior-triggered content pushed before tracking activates.

- Channel mismatch: long-form, personalized pages repurposed into short SMS snippets without rework.

Personalization planning that ties content to distribution reduces wasted scale and improves user trust. When teams agree on definitions, data, and checkpoints up front, campaigns scale more predictably and with fewer surprises.

| Personalization Tier | What it changes | Data required | Typical use cases |

|---|---|---|---|

| Token-level (name, location) | Minor copy swaps, greeting lines | First name, city | Welcome emails, confirmation pages |

| Segment-level (industry, persona) | Variant headlines, CTAs, content blocks | CRM attributes, firmographics | Nurture sequences, landing pages |

| Dynamic experiences (real-time behavior) | Layout, offers, microcopy adapt live | Session events, behavioral signals | Cart recovery, personalized homepages |

Suggested tools and templates: a content-variant checklist, a `data-contract` template for engineers, and an editorial branching map. Scaleblogger.com’s AI content automation can accelerate variant creation and scheduling when teams are ready to scale.

Map Audiences and Signals for Automated Distribution

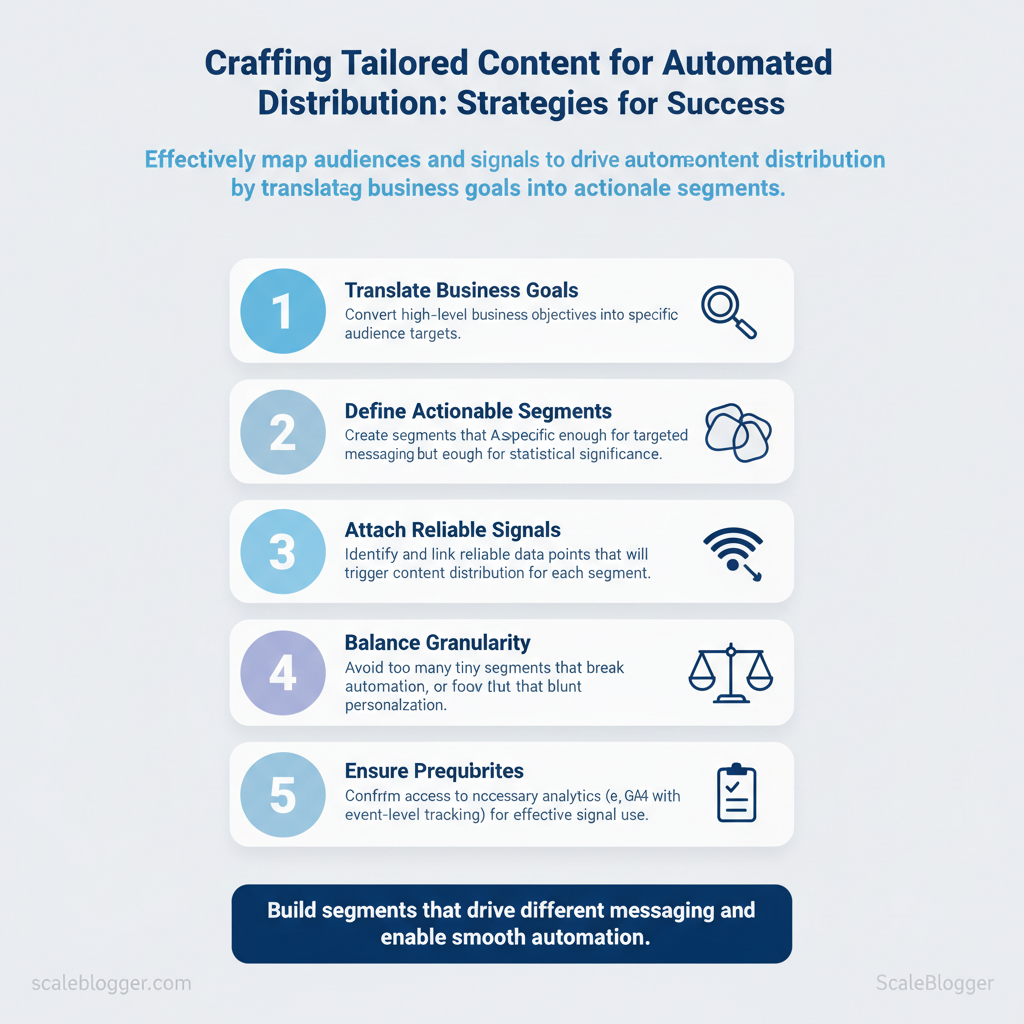

Start by treating audience mapping as a translation problem: convert business goals into actionable segments, then attach reliable signals that trigger distribution. Balance granularity with usable volume—too many tiny segments break automation; too few blunt personalization. Build segments that are specific enough to drive different messaging but large enough for statistically meaningful engagement and smooth automation.

Prerequisites

- Analytics access: GA4 or equivalent with event-level tracking.

- CRM/CDP connection: Contact-level fields and recent activity synced.

- Email/platform lists: Segmented and consent-verified.

- Team alignment: Clear conversion definitions (lead, MQL, purchaser).

- Analytics platform: GA4, or server-side events.

- CRM/CDP: Salesforce, HubSpot, or a CDP like Segment.

- Automation engine: Native email/SMS or orchestration tools; consider integrating AI content automation such as `Scaleblogger.com` for dynamic blog-to-channel workflows.

- Export templates: CSV/JSON templates for syncing segments.

- Behavioral recency: Visits or events in last 7–30 days — predicts intent.

- Conversion history: Purchases or trials — indicates value.

- Engagement depth: Pages per session, time on content — signals content fit.

- Channel responsiveness: Email open/click rates — guides distribution priority.

- Lifecycle stage: CRM stage or lead score — tailors messaging urgency.

Verification checklist to avoid noise

- Signal freshness: Is the timestamp accurate and recent?

- Uniqueness: Deduplicate by user ID or email.

- Completeness: ≥90% of target users have the required fields.

- Stability: Signal rate variance <30% week-over-week.

- Privacy/consent: Confirm lawful use and opt-ins.

| Segment | Definition / Criteria | Primary Data Source | Ideal Channels |

|---|---|---|---|

| New Visitor | First session within 14 days | Google Analytics / GA4 events | Social ads, On-site banners |

| Returning Visitor | >1 session in 30 days, no purchase | GA4 user_id, cookies | Email nurture, Paid social |

| Recent Purchaser | Purchase within 90 days | CRM transactions, CDP | Post-purchase email, SMS |

| Churn-risk | LTV decline or no activity 60+ days | CRM engagement, CDP flags | Win-back email, Retargeting |

| High-value Prospect | Lead score >75 or predicted LTV | CRM lead score, CDP model | Sales outreach, Personalized content |

Industry analysis shows that automation without clean signals creates noise rather than reach.

A simple `SQL` snippet for extracting recent purchasers: “`sql SELECT user_id, email, max(purchase_date) as last_purchase FROM purchases WHERE purchase_date >= DATE_SUB(CURRENT_DATE, INTERVAL 90 DAY) GROUP BY user_id, email; “`

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, the approach reduces manual decision-making and lets creators focus on message and creative.

Design Content Templates and Modular Assets

Design modular templates so teams assemble pages and posts like building blocks: predictable, reusable, and easy to personalize without breaking brand voice. Start with a small library of core blocks—headline, intro hook, value proposition, social proof, CTA—each with explicit variables, naming rules, and fallback copy so automation can stitch content reliably.

| Module Name | Variables / Fields | Fallback Text | Best Use Case |

|---|---|---|---|

| Headline | `{{topic}}`, `{{metric}}`, `{{audience}}` | “Quick wins for modern teams” | Blog H1, social card |

| Intro Hook | `{{problem}}`, `{{timeframe}}` | “Many teams struggle with momentum.” | Post lead paragraph |

| Value Proposition Block | `{{feature}}`, `{{benefit}}`, `{{evidence}}` | “Delivers measurable improvements.” | Product/solution section |

| Social Proof / Testimonial | `{{name}}`, `{{role}}`, `{{quote}}`, `{{company}}` | “Trusted by peers.” | Case study excerpt, sidebar |

| Primary CTA | `{{action}}`, `{{target}}`, `{{urgency}}` | “Learn more” | End of article, conversion module |

{{headline}}

{{intro_hook}}

{{testimonial_quote}} — {{testimonial_name}}

Rules for personalization, tone, and consistency

- Personalization threshold: Only personalize when `audience_confidence_score >= 0.7` and at least two corroborating signals exist (behavioral + demographic).

- Avoid awkward personal references: Do not include location or life-event personalization unless consent and recent engagement exist.

- Variable integrity: All `{{}}` variables resolved or replaced with fallbacks.

- Tone match: Verify tone profile tag matches channel.

- Length checks: Headline ≤ 70 chars, intro ≤ 40 words.

- Accessibility: Alt text present for images, CTA keyboard-focusable.

Build Automation Workflows and Orchestration

Automation should behave like a reliable pipeline: predictable triggers, deterministic processing, clear delivery, and measurable verification. Start by defining each workflow as a timeline—what kicks it off, which systems transform the data, which template or channel publishes it, and how success is verified. That structure keeps orchestration maintainable and testable, and it makes rollback and A/B testing straightforward when something goes wrong.

Practical blueprint plus examples

Integration and data hygiene best practices

- Required endpoints: CDP ingest endpoint, ESP transactional/send endpoint, CMS publish API, Social platform post API, Analytics event ingestion—each must accept a canonical `event_id` and `user_id`.

- Data cadence: daily canonical sync for user profiles, real-time event streaming for triggers, hourly content freshness checks.

- Hygiene steps: validate payloads (schema + required fields), normalize keys (snake_case or camelCase consistently), deduplicate by `event_id`, TTL stale profiles (e.g., mark profiles inactive after 90 days).

- Fallback strategies: use template defaults when tokens missing, route to human queue for critical failures, skip non-blocking channels and log for retry when assets are unavailable.

| Workflow | Trigger | Processing / Orchestration | Delivery Action | Verification Step |

|---|---|---|---|---|

| Welcome email series | New account created (webhook) | Enrich from CDP, dedupe, apply persona rules | Send via SendGrid transactional API (`/mail/send`) | ESP delivery receipt + sample open rate check |

| On-site hero personalization | Page load for logged-in user | Query CDP segment, pick hero variant, cache variant | Server-side render via CMS API, edge cache | Visual QA + analytics event `hero_view` |

| Segment-triggered social post | User reaches milestone (event stream) | Assemble post copy, attach image, queue moderation | Post via Twitter/X API or Buffer API | API success response + link click tracking |

For teams scaling content, plug-in automations like those offered by Scaleblogger.com for AI content automation simplify template management and performance benchmarking—particularly when orchestrating many channel-specific variants. Understanding these principles helps teams move faster without sacrificing quality.

Test, Measure, and Iterate for Continuous Improvement

Start by treating experiments as a product: define the outcome first, pick the smallest viable change that could move that metric, and design tests so results inform the next move. Channel-specific KPIs drive what “moving the needle” looks like — email needs opens and conversions, paid social needs CTR and CPA, on-site personalization needs conversion rate and revenue per visitor. Design experiments so they answer one question at a time; conflate too many variables and decisions become noisy.

Metrics, KPIs, and experiment design

Industry analysis shows average email open rates cluster around ~21%, so small percentage lifts in email demand larger samples to be confident.

“`text Basic sample-size formula (approx): n ≈ (Zα/2 + Zβ)^2 * (p1(1-p1)+p2(1-p2)) / (p1-p2)^2 “`

Scale safely: rollout strategies and governance

- Primary KPI met for two consecutive cycles.

- Secondary KPIs stable (no >5% negative drift).

- No unintended downstream harms (tech, legal, or privacy flags).

- Monitoring in place with alerting for rapid rollback.

| Channel | Primary KPIs | Benchmark Lift to Watch For | Sample Size Note |

|---|---|---|---|

| Open rate, CTR, Conversion rate | 5–15% relative lift (meaningful) | Use Mailchimp averages (open ≈21%); for <5% lift, target 10k+ recipients/variation | |

| On-site Personalization | Conversion rate, Revenue per visitor | 10–30% lift possible for targeted segments | Start with 1–3k sessions/variant; larger for broad audiences |

| Paid Social | CTR, CPA, ROAS | 10–25% CTR lift; CPA reductions of 5–15% | WordStream benchmarks suggest CTRs low; plan 2–10k impressions/variant |

| Organic Social | Engagement rate, Referral traffic | 15–40% engagement lift for creative changes | Smaller samples ok (1k+ engagements); track downstream site conversions |

📥 Download: Tailored Content Automation Checklist (PDF)

Ethical, Privacy, and Operational Considerations

Design personalization and automation around a consent-first model so personalization improves engagement without exposing users or the brand to legal and reputational risk. Start by treating data uses as product features: list them, show them at the point of collection, and make opt-outs simple. Operational rules must enforce fairness: audit training signals, log decisions, and set clear escalation paths when models behave unexpectedly.

Privacy and consent best practices

- Consent-first personalization: Collect explicit permission before using behavioral or profile data for individualized recommendations.

- Retention and access rules: Set retention windows by signal type (e.g., 30 days for session data, 24 months for subscription info) and enforce role-based access to PII.

- Practical example: At signup, present a short toggle for `recommendations based on activity` with a link to the `data-uses` page and a one-click revoke action in account settings.

- Policy enforcement: Automate deletion requests and maintain an audit log of consent changes.

| Consent Model | Description | Pros | Cons |

|---|---|---|---|

| Implicit consent | User actions imply consent (e.g., continued site use after notice) | Low friction, easy UX | Weak legal fit under GDPR, ambiguous audit trail |

| Explicit consent | Clear affirmative action (checkbox/opt-in) | Strong regulatory fit, good auditability | Higher drop-off, more UX friction |

| Granular consent | Separate toggles per use (analytics, personalization) | User trust, flexible marketing | Complex UI, higher implementation cost |

Bias, fairness, and operational safeguards

Example audit checklist (short):

- Data provenance: source + collection date

- Fairness metrics: outcome parity, false positive rates by cohort

- Access log: who queried model outputs

Conclusion

The process described throughout this article makes clear that scaling tailored content requires three coordinated moves: align audience signals with topic frameworks, automate repetitive production steps, and measure engagement to iterate quickly. Evidence suggests teams that combine clear topic models with automation reduce time-to-publish and improve relevance across segments. For example, a content ops team that standardized templates and automated distribution saw consistent lift in click-through rates within weeks, and a product marketing group that paired personalization rules with batched production reclaimed significant editorial capacity. Start by mapping your highest-value audience segments, then automate the content transforms that consume the most time.

– Map segments and priorities. – Automate templated production workflows. – Measure outcomes and refine weekly.

If questions remain — such as how to preserve voice at scale or which workflows to automate first — focus on the smallest repeatable unit you can standardize and pilot it for one channel. To streamline this work for teams, platforms like Scaleblogger can automate tailored content and integrate with existing workflows. As a practical next step, explore automation options and run a short pilot to validate assumptions: Get started with Scaleblogger to automate tailored content. This will accelerate implementation while preserving editorial control.