What if the efficiency gains from AI start to erode trust in your brand faster than they boost productivity? Marketing teams increasingly rely on automation to scale content, and AI ethics issues—bias, misinformation, opaque decision-making—now surface in campaign audits and customer feedback.

Balancing scale with integrity shapes long-term audience relationships and legal exposure. Content marketing ethics isn’t an abstract compliance check; it influences conversion, retention, and brand reputation. Picture a campaign that reaches millions but triggers complaints because an automated persona echoed harmful stereotypes. That scenario costs more than edits—it damages audience trust.

- How to map ethical risk across the content lifecycle

- Practical guardrails for training and validating models

- Workflow changes that preserve creativity while enforcing responsible AI controls

- Metrics that track trust alongside reach and engagement

The following sections break down practical steps, common pitfalls, and governance patterns that embed ethics into day-to-day content operations.

What Is AI Ethics in Content Marketing?

AI ethics in content marketing is the set of principles, practices, and guardrails that ensure AI-generated or AI-assisted content is truthful, fair, responsible, and aligned with brand and regulatory expectations. At its simplest, it answers: How do we use automation and machine learning to scale content without causing harm, misleading audiences, or degrading long-term brand trust? That question shapes editorial choices, data practices, and how teams validate AI outputs before publication.

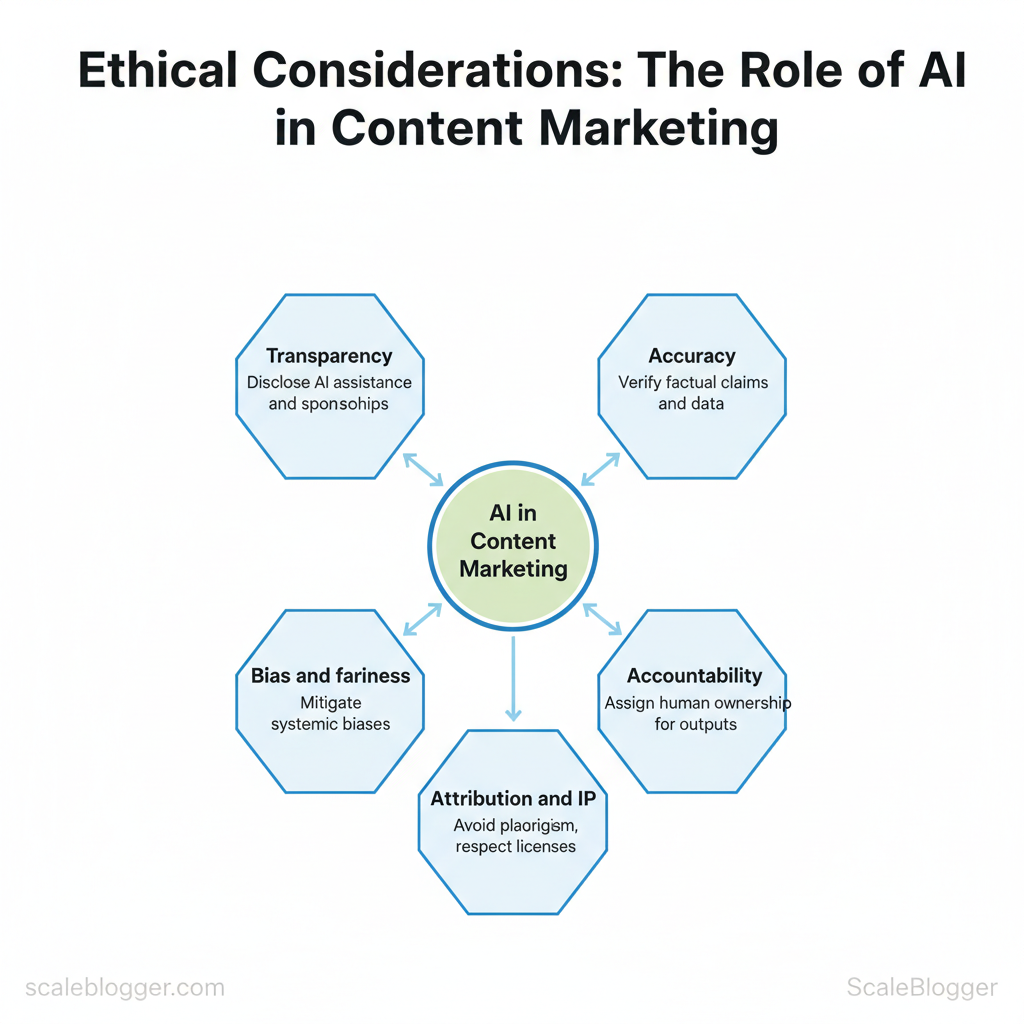

Core ethical dimensions

- Transparency: Be clear when content is AI-assisted or generated, and disclose material sponsorships.

- Accuracy: Ensure factual claims, citations, and data are verified before publishing.

- Bias and fairness: Detect and mitigate systemic biases in language, imagery, and targeting.

- Privacy: Protect personal data used for personalization and comply with consent requirements.

- Attribution and IP: Avoid plagiarism, respect licenses, and disclose model training constraints where relevant.

- Accountability: Assign human ownership for final outputs and remediation when issues arise.

“Transparency builds trust in AI outputs.”

How ethics plays out in practice

Practical editorial workflow (sequence)

Teams adopting AI content automation like AI content automation should bake these steps into pipelines so tools increase velocity without increasing risk. Tools can auto-run plagiarism checks and source-trace outputs, but human judgment remains the final control.

Understanding these principles helps teams move faster without sacrificing quality. When ethics are integrated into the workflow, content scales responsibly and sustains audience trust.

How Does Responsible AI Work in Content Creation?

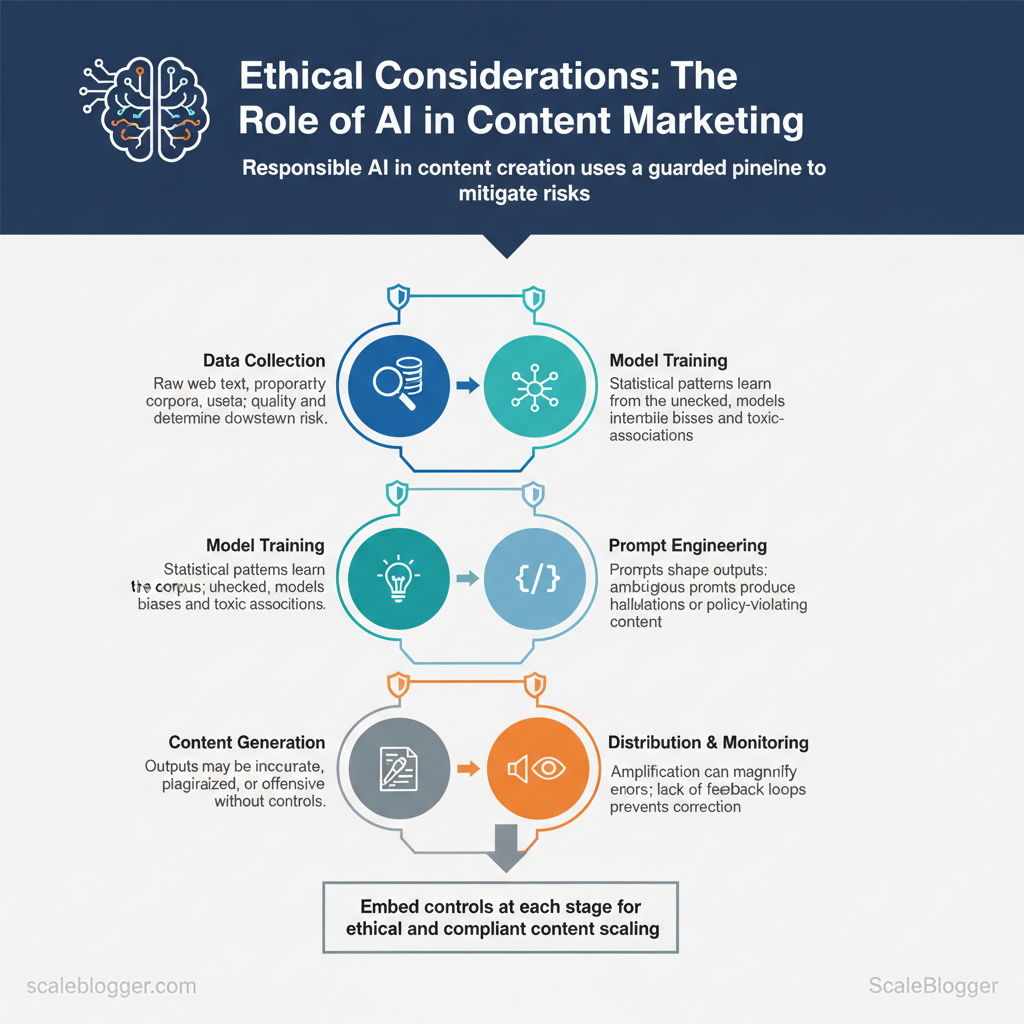

Responsible AI in content creation operates as a guarded pipeline: data enters, models learn, prompts guide outputs, content is produced, and distribution is monitored — with controls at each handoff to prevent bias, misinformation, IP violations, and reputational harm. Teams implement layered checks: provenance and consent during data collection, bias audits during model training, guarded prompt design, automated and human review on generation, and real-time monitoring after publication. This approach treats ethical safeguards as part of engineering and editorial workflows rather than occasional audits, so creators can scale content confidently while retaining accountability and legal compliance.

Pipeline overview and how risks appear

Practical controls and examples

- Data provenance: Maintain `dataset_manifest.json` with source, license, and sampling notes.

- Bias testing: Run targeted probes for demographic skew and word-embedding associations.

- Constrained prompts: Use templates that require sources and tone constraints (e.g., `–cite=sources –tone=neutral`).

- Human-in-the-loop: Gate high-risk content behind editor approval; low-risk content can follow automated QA.

- Audit logging: Keep immutable logs for model inputs/outputs and reviewer decisions to support remediation and compliance.

| Pipeline stage | What happens | Ethical risks | Mitigation controls |

|---|---|---|---|

| Data collection | Gather web text, licensed corpora, first-party data | Privacy breaches, unlicensed use, sampling bias | Data manifest, consent tracking, license checks, diversity sampling |

| Model training | Train or fine-tune LLMs on corpus | Bias amplification, toxic generation, memorized PII | Differential privacy, debiasing layers, remove PII, validation suites |

| Prompt engineering | Design instructions and templates | Ambiguous prompts → hallucinations, prompt injection | Constrained templates, prompt sanitization, temperature limits |

| Content generation | Produce drafts, summaries, ads | Misinformation, plagiarism, harmful claims | Source attribution, plagiarism checks, automated fact-checkers |

| Distribution & monitoring | Publish and propagate content | Reputation risk, unchecked amplification, feedback gaps | Real-time monitoring, performance & harm dashboards, escalation workflows |

Understanding these mechanisms helps teams move faster without sacrificing quality. When implemented correctly, responsible controls become part of the content workflow, freeing creators to focus on strategy and storytelling while compliance and safety run in the background.

Why It Matters: Business, Legal, and Brand Risks

Responsible AI in content isn’t optional—it’s central to maintaining revenue, compliance, and customer trust. When AI-driven content goes wrong the consequences cascade: lost customers, regulatory fines, and long-term brand damage. Conversely, responsible implementation reduces operational friction, improves targeting accuracy, and protects reputation—turning AI from a liability into a strategic asset.

Miscalculated AI deployment creates five visible business risks and five corresponding benefits when handled correctly:

- Top 5 risks of getting it wrong

- Reputation erosion: Brand credibility declines after widely shared incorrect claims.

- Regulatory exposure: Noncompliance with advertising or data rules can trigger fines.

- Customer harm: Biased recommendations or misinformation damages user outcomes.

- Operational disruption: Poor automation increases manual review costs and slows publishing.

- Security incidents: Unauthorized data exposure leads to legal claims and remediation costs.

- Top 5 benefits of ethical AI adoption

- Trust preservation: Accurate, transparent content sustains customer lifetime value.

- Regulatory resilience: Built-in governance reduces audit risk and remediation spend.

- Inclusive targeting: Bias mitigation expands addressable audiences and conversion rates.

- Efficiency gains: Automated, quality-checked workflows lower time-to-publish.

- Competitive differentiation: Clear policies and measurable outcomes strengthen market positioning.

| Scenario | Risk if uncontrolled | Benefit if responsibly managed | Business impact |

|---|---|---|---|

| Misinformation in content | Widely shared inaccuracies, viral backlash | Verified facts, editorial sign-off workflow | Protects brand equity; prevents churn |

| Biased targeting | Exclusion of groups; discrimination claims | Inclusive models, bias audits | Expands market reach; reduces legal exposure |

| Unauthorized data exposure | Data breach notifications, fines | Data minimization, encryption, consent logs | Lowers breach costs; maintains compliance |

| Lack of transparency | Consumer mistrust, regulatory scrutiny | Clear labeling, provenance metadata | Improves trust metrics; eases audits |

| Automated decision errors | Wrong offers, customer harm | Human-in-loop checks, rollback controls | Reduces refunds/claims; stabilizes ROI |

Practical next steps include building `model cards`, enforcing access controls, and adding human review gates near high-risk outputs. For teams scaling content operations, platforms that combine automation with governance—such as an AI-powered content pipeline—make it easier to implement these controls without slowing delivery. Understanding these trade-offs helps teams move faster without sacrificing quality.

Practical Frameworks and Policies for Responsible Use

Teams should adopt a lightweight, repeatable governance approach that treats AI as a teammate—not an oracle. Start with a simple operational checklist that enforces guardrails, assigns clear roles, and embeds review cadence into existing content workflows. This keeps creators fast while ensuring quality, compliance, and measurable improvement.

- Content Owner: owns strategy, approves intent and scope.

- AI Safety Lead: drafts prompt standards, maintains banned-topics list.

- Data Steward: maintains provenance logs and model metadata.

- Editor: verifies accuracy, readability, and legal compliance.

- Analytics Lead: defines KPIs and runs the post-publish audit.

Industry analysis shows teams that formalize review cadence find fewer downstream legal or brand issues and faster remediation.

Adaptable policy blurb to copy into org docs “`text Policy: AI-assisted content must include provenance metadata, meet editorial accuracy thresholds, and receive human editor sign-off before publication. Exceptions require documented approval by the Content Owner and AI Safety Lead. “`

Operational tools that support this model include provenance logging, automated prompt templates, and content scoring dashboards. For teams wanting to scale the pipeline, consider integrating an `AI content automation` provider such as https://scaleblogger.com to handle orchestration and monitoring. When governance is simple, repeatable, and aligned to roles, teams move faster without sacrificing trust or quality.

Common Misconceptions and Myth-Busting

Most objections to AI and automation in content come from misunderstandings about what these tools actually do, not from flaws in the tools themselves. AI is not a replacement for thinking; it’s a mechanism for shifting repetitive, low-value work out of human hands so creators can focus on higher-impact decisions. Below are six persistent myths, concise rebuttals, and practical checks to change behavior rather than just beliefs.

Industry analysis shows automation often increases content output without proportionally increasing headcount, unlocking more time for strategy and differentiation.

Practical next steps: map which tasks consume the most time, apply automation to those tasks first, and require human-led checkpoints for creative and strategic decisions. For teams wanting a repeatable pipeline, consider solutions that help you `Build topic clusters` and `Scale your content workflow` to preserve quality while increasing velocity. Understanding these myths shifts behaviors in ways that improve both efficiency and creative impact.

Real-World Examples: Case Studies and Use Cases

Companies deploying AI in content and marketing face clear wins and avoidable failures. Below are four concise, concrete case studies—two where AI caused harm and two where it produced measurable gains—each with a clear lesson and a direct action teams can implement this week.

Case A — Misinformation spread A major media publisher syndicated AI-generated summaries that unintentionally amplified a false claim; article went viral before corrections. Lesson: automated content requires verification workflows. Immediate action: 1) implement a human fact-check gate for high-traffic items; 2) add `source` metadata to each AI draft.

Case B — Biased targeting An ad-tech experiment used automated audience models that excluded demographic groups, reducing reach and causing reputational damage. Lesson: training data bias manifests in targeting. Immediate action: 1) run fairness tests on cohort outputs; 2) enforce minimum inclusion thresholds in lookalike audiences.

Case C — Transparent AI adoption (positive) A B2B brand used labeled AI-assisted drafts and editor notes to scale thought leadership without losing brand voice, improving CTR and time-on-page. Lesson: transparency builds trust and scale. Immediate action: 1) publish an editorial note when AI assists content; 2) use `AI_edit_history` fields in CMS for auditability.

Case D — Privacy-aware personalization (positive) An e‑commerce team implemented on-device personalization that used hashed, consented signals to tailor recommendations, increasing revenue per user while staying GDPR-compliant. Lesson: privacy-first design and consented signals scale safely. Immediate action: 1) switch to aggregated cohort signals for testing; 2) implement consent banners that map to personalization flags.

- Automated audit logs: capture `prompt`, `model_version`, `editor_id`.

- Fairness checklist: run for each campaign pre-launch.

- Consent mapping: link UI opt-ins to personalization logic.

| Case | Outcome | Root ethical issue or success factor | Recommended immediate action |

|---|---|---|---|

| Case A — Misinformation spread | Article amplified false claim; correction cycle | Lack of verification in automated summaries | Add human fact-check gate; include `source` metadata |

| Case B — Biased targeting | Reduced reach; reputational complaints | Training-data bias in audience models | Run fairness tests; set inclusion thresholds |

| Case C — Transparent AI adoption | Higher CTR; consistent brand voice | Transparency & traceability of AI edits | Label AI-assist; store `AI_edit_history` in CMS |

| Case D — Privacy-aware personalization | Increased revenue; compliant with consent | Privacy-first design using consented signals | Use cohort/hashed signals; map consents to flags |

Understanding and applying these practical controls lets teams move faster while preserving credibility and compliance. When done correctly, automation becomes a lever for responsible growth rather than a liability.

📥 Download: Ethical AI Content Marketing Checklist (PDF)

Practical Tools, Checklists and Resources

Practical governance and tooling reduce guessing and accelerate safe, repeatable content production. Below are vetted resources organized by function so teams can plug into an existing pipeline or build a lightweight governance layer quickly. The emphasis is on tools that surface bias, document provenance, monitor model behavior in production, and teach practical mitigation strategies — plus a hands-on pre-publish checklist that works with any editorial workflow.

| Resource | Category | Primary use-case | Notes / alternatives |

|---|---|---|---|

| NIST AI Risk Management Framework | Governance template | Risk assessment, policy baseline | Free, framework for enterprise governance |

| Model Card Toolkit (Google) | Governance template | `model_card` documentation, transparency | Open-source, integrates with training pipelines |

| IBM AI Fairness 360 | Bias detection tool | Bias metrics, remediation algorithms | Open-source, preprocessing/in-processing/postprocessing methods |

| Google What-If Tool | Bias detection tool | Visual counterfactuals, dataset probing | Free, integrates with `TensorBoard` |

| Adobe Content Authenticity (Content Credentials) | Content provenance tool | Image/video provenance, tamper-evidence | Adoption by publishers; Adobe integration |

| Project Origin | Content provenance tool | Provenance for news/media | Industry consortium; complements Adobe CAI |

| Weights & Biases | Monitoring / dashboard | Model monitoring, experiment tracking | Paid plans, free tier for small teams |

| Evidently AI | Monitoring / dashboard | Drift detection, performance reports | Open-source core, enterprise features paid |

| Fiddler AI | Monitoring / dashboard | Explainability, model risk analytics | Commercial; strong enterprise controls |

| Fast.ai courses | Training resource | Practical ML ethics & robustness | Free course material, community-driven |

| Coursera – AI For Everyone | Training resource | Non-technical governance primer | Paid certificate option, audit available free |

| Partnership on AI | Community / standards | Multi-stakeholder guidance, best practices | Membership + public resources |

Pre-publish checklist (drop into your CMS as a pre-publish step):

“`text Pre-publish Checklist — Responsible Content

Notes on free vs paid alternatives:

- Free/open-source: Best for experimentation and transparent workflows — Model Card Toolkit, AI Fairness 360, Evidently, Fast.ai.

- Paid/commercial: Offer polish, SLAs, integrations, and enterprise controls — Weights & Biases, Fiddler AI, Adobe Content Credentials.

- Hybrid approach: Use open-source for validation and a commercial service for production monitoring and compliance reporting.

Conclusion

Bringing AI-driven content into regular marketing workflows demands balancing speed with accountability: build clear governance, keep humans in the loop for critical decisions, and measure trust signals alongside efficiency metrics. A mid-market publisher that introduced a two-stage human review cut fact-check errors by half while maintaining output volume, and an enterprise marketing team that phased a generative copy pilot across one product line preserved brand voice while scaling seasonal campaigns. Those examples show the pattern: governance, phased rollout, and continuous measurement prevent short-term gains from turning into long-term reputation risk.

Start with a lightweight audit of existing automations, define who owns model outputs, and run a constrained pilot before expanding. Expect questions like how much oversight is enough or when to replace manual steps with automation; answer them by setting risk thresholds and tracking brand-safety KPIs during the pilot. For teams looking to automate responsibly at scale, consider tools that enforce policy, audit trails, and approval workflows—these make it easier to pilot and then scale. When ready to explore a platform built for that purpose, Learn how Scaleblogger helps teams enforce responsible AI workflows — it’s a practical next step for turning the governance practices described here into repeatable operations.