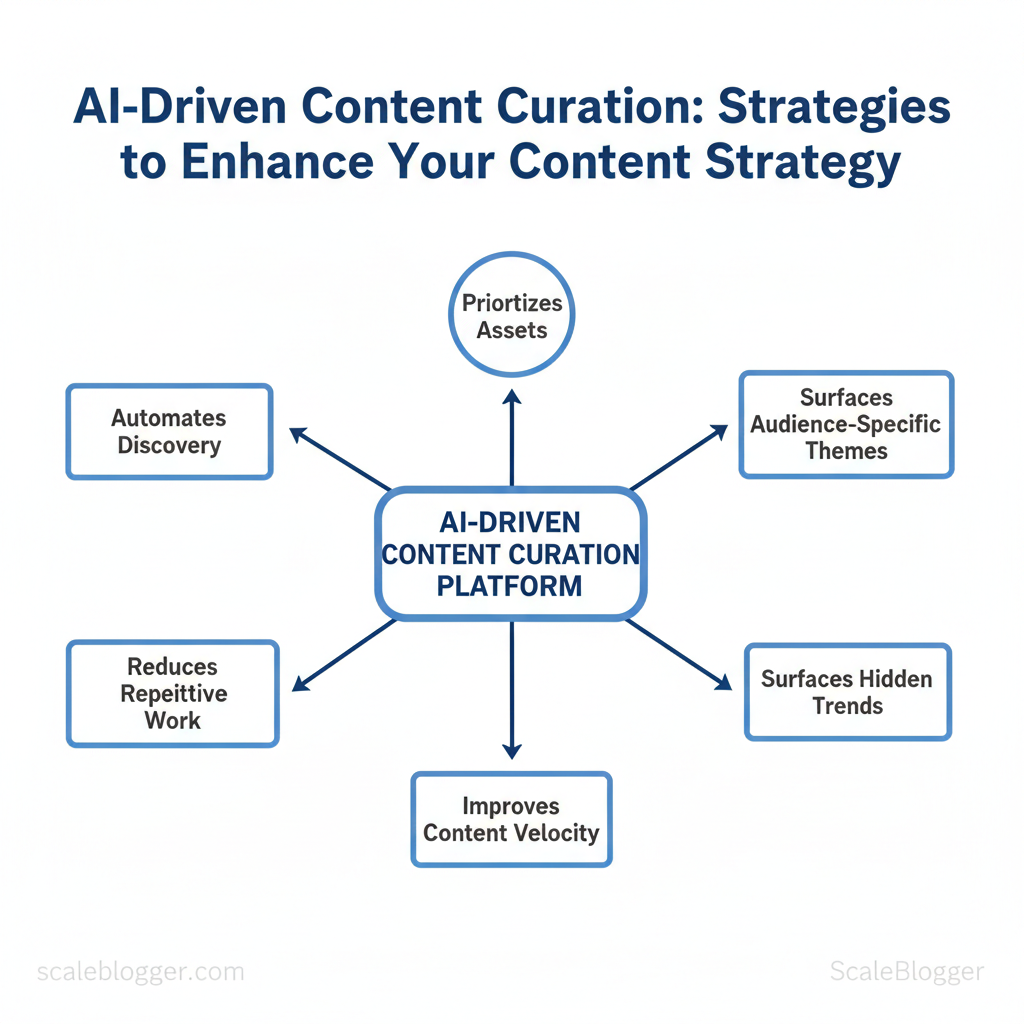

Marketing teams waste hours sifting through feeds and spreadsheets to find content that actually moves metrics. AI-driven content curation automates discovery, prioritizes high-impact assets, and surfaces audience-specific themes so teams spend time on strategy, not triage.

Applied correctly, AI reduces repetitive work, surfaces hidden trends, and improves content velocity while preserving brand voice. Industry research and practitioner guides highlight gains in efficiency and personalization when models are tuned to business KPIs and editorial rules.According to Jasper these systems also streamline SEO and performance tracking.

- How to map AI outputs to commercial goals and editorial standards

- Simple workflows that let humans approve or refine machine suggestions

- Metrics to track so automation actually improves ROI

Explore automated content curation workflows with Scaleblogger: https://scaleblogger.com

Foundations of AI-Driven Content Curation

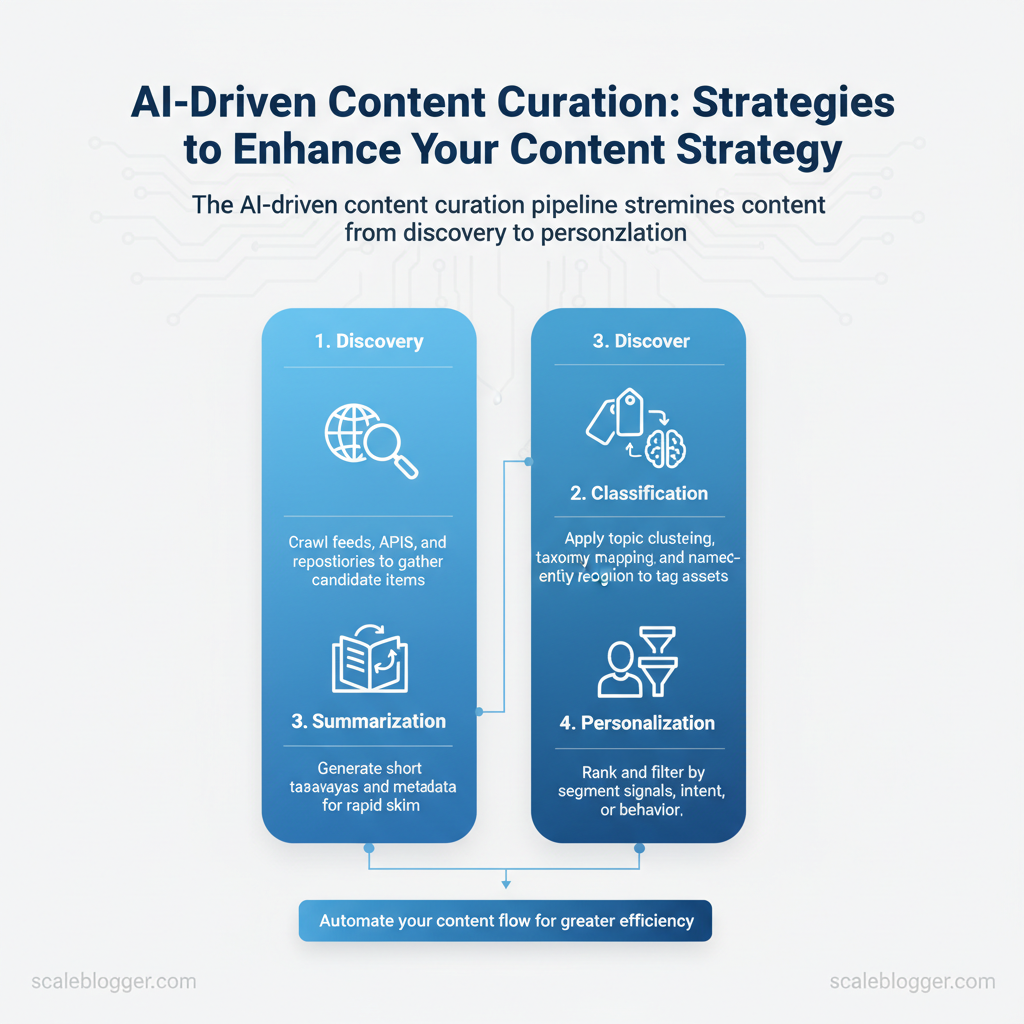

AI-driven content curation automates discovery, organization, and delivery of the most relevant assets for an audience. At its core it replaces manual sifting with models that surface, tag, summarize, and personalize content at scale. That means teams spend less time hunting for sources and more time shaping narrative and distribution.

- Discovery at scale — continuous ingestion across RSS, social, and internal archives.

- Automated classification — unsupervised `topic clustering` and supervised tagging.

- Concise summarization — extractive or abstractive summaries for fast consumption.

- Behavioral personalization — recommendations tuned to segments and funnels.

- Integrations — CMS, scheduling, analytics, and compliance checkpoints.

- Use AI for high-volume streams, real-time feeds, and recurring newsletters.

- Reserve manual curation for high-stakes editorial voice, legal/medical compliance, or nuanced thought leadership.

- Combine both — an assisted workflow where AI pre-filters and editors approve yields the best throughput-quality balance (this is consistent with practice recommended in AI content strategy discussions such as the Jasper AI content strategy guide and comparative overviews like Nightwatch on AI-driven strategies).

| Decision Factor | Manual Curation | Assisted Curation | Automated Curation |

|---|---|---|---|

| Best use case | High-touch thought leadership | Editorial + AI triage | Real-time feeds, large volumes |

| Speed | Minutes–hours per item | Seconds–minutes (with human review) | Sub-second to seconds |

| Consistency | Variable by editor | Higher (guidelines + AI) | Very high (model-driven) |

| Editorial control | Full control (human) | Shared control (human oversight) | Low control (rules/models) |

| Resource requirements | Skilled editors, time | Editor + AI subscription | Engineering + model / vendor |

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Building the Data Pipeline for Curation

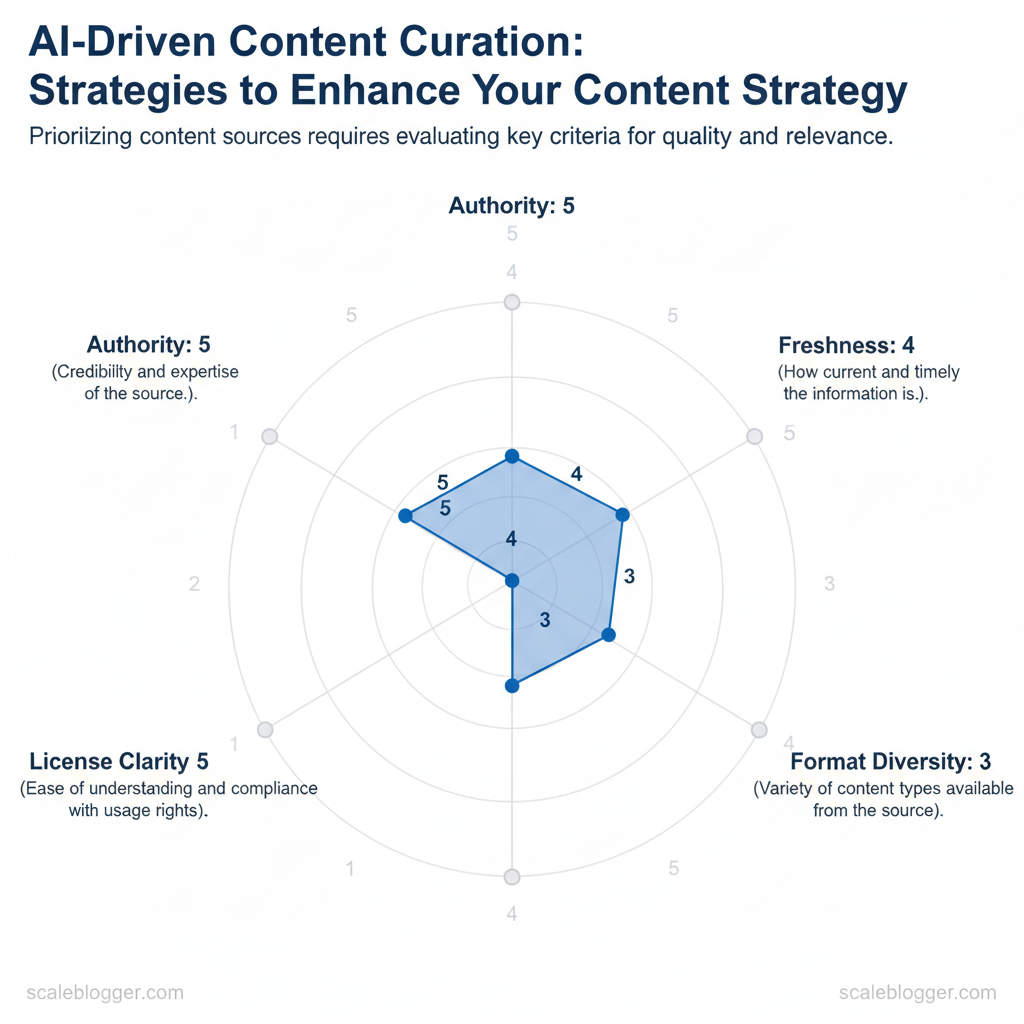

Begin by treating source selection and ingestion as product requirements: what outputs do editors and models need, and what guarantees must the pipeline provide for freshness, provenance, and reuse. Prioritize sources that consistently deliver signal — not just volume — then automate collection and normalization so downstream models and teams consume predictable records.

Example normalized schema: “`json { “title”:”Example Title”, “author”:”Jane Doe”, “publish_date”:”2025-06-12T08:00:00Z”, “canonical_url”:”https://example.com/article” “source_id”:”forbes.com”, “license”:”CC-BY-NC-4.0″, “tags”:[“ai”,”content strategy”], “intent_score”:0.82, “provenance”:{“fetched_at”:”2025-11-24T10:00:00Z”,”fetch_method”:”api”} } “`

- Use rate-limited workers and backoff to avoid API bans.

- Store license URLs and archive snapshots (Wayback or raw HTML) for legal audits.

- Re-score content periodically to capture evolving relevance.

| Source | Authority Score | Freshness (update freq) | Formats | License/Use Notes |

|---|---|---|---|---|

| Industry publications | High (DA 70–90) | Weekly–Daily | Articles, long-form, analysis | Often restrictive; check syndication/licensing |

| Academic papers | High (Citations/peer-reviewed) | Quarterly–Ongoing | PDFs, preprints | Usually copyright; some open access (CC) |

| Competitor blogs | Medium (DA 40–70) | Weekly–Daily | Case studies, posts | Copyrighted; use excerpts + attribution |

| Social posts (X/LinkedIn) | Variable (low–high) | Real-time | Short posts, threads, media | Platform TOS; capture author metadata |

| User-generated forums | Low–Medium | Real-time | Q&A, comments | User content rights vary; verify before reuse |

Following these steps makes curation predictable and scalable while preserving legal safety and editorial quality. Understanding these principles helps teams move faster without sacrificing reliability.

AI Techniques and Tools for Effective Curation

Start by mapping problems to techniques: use NLP for extraction and summarization, embeddings for semantic search and clustering, topic modeling for editorial grouping, and ranking models for personalized feeds. These components combine into pipelines that find, normalize, and surface the right content to the right audience.

- NLP (summarization & entity extraction): Use `transformers` or managed APIs to generate abstracts, extract named entities, and tag content for taxonomy alignment.

- Embeddings (semantic similarity): Encode documents and queries into vectors (e.g., OpenAI/Cohere/Pinecone) to power `nearest-neighbor` search and content deduplication.

- Topic modeling (LDA, BERTopic): Group large corpora into editorial buckets to build evergreen calendars and cluster ideas for pillar pages.

- Ranking models (learning-to-rank): Combine signals — recency, engagement, personalization score — to rank content for users or newsletters.

- Hybrid pipelines: Combine rule-based filters with ML to control quality and reduce hallucination risk.

Industry analysis shows adoption favors platforms with easy CMS connectors and clear data policies.

| Criteria | Small teams | Mid teams | Enterprise |

|---|---|---|---|

| Budget considerations | Low: Free tiers / $20–$50/mo (ChatGPT Plus, StoryChief) | Moderate: $39–$200/mo (Jasper plans, StoryChief growth) | High: Custom pricing, enterprise contracts |

| Integration complexity | Low: Plug-ins, Zapier | Medium: APIs, partial dev resources | High: Full API, SSO, custom connectors |

| Customization needs | Basic: Templates, prompt tuning | Advanced: Fine-tuning, model ops | Full: Fine-tune, private models, MLOps |

| Support and SLAs | Community: Docs, forums | Business: Email support, onboarding | Enterprise: 24/7 SLAs, dedicated CSM |

| Data privacy controls | Limited: Shared infra | Improving: Dedicated projects, opt-outs | Strong: VPCs, SOC2, data residency |

Understanding these pieces makes it practical to assemble a curation pipeline that balances speed, control, and compliance. When implemented correctly, this approach reduces overhead and lets content teams focus on strategy rather than manual wrangling.

Workflow Design: From Discovery to Publication

Start by treating content as a repeatable production line: discovery informs briefs, briefs feed creation, drafts move to QA, then scheduling and publication. The value of a designed workflow is removing friction at handoffs so creators spend time on craft, not coordination.

Templates for handoffs “`markdown Brief ID: B-2025-045 Title: Intent: Primary sources (with URLs): SEO target: Writer: Editor: Due dates: Automated checks run: [plagiarism, fact-check, license] Notes: “`

| QA Item | Automated Check | Human Review | Frequency |

|---|---|---|---|

| Factual accuracy | `Fact-checker` matches claims to cited URLs, flag inconsistencies | Verify nuance, context, and interpretation | Per-article |

| Source licensing | Metadata scan for copyright/CC tags, vendor API checks | Legal/editor review for paid/partner assets | Per-asset |

| Tone/style alignment | Style linter enforces `voice`, sentence length, passive voice | Editor adjusts brand voice, idioms, and nuance | Per-article |

| Plagiarism/duplication | Plagiarism engine (Copyscape/Turnitin) exact and paraphrase checks | Confirm attribution, rewrite or cite properly | Per-article |

| Sensitive content flags | Safety classifier detects hate, medical/legal flags | Senior editor/legal decides on edits/avoidance | Per-article |

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Personalization, Distribution, and Measurement

Prerequisites

Tools and materials

- Data: CRM export, event stream, content metadata.

- Systems: CMS, email platform, social scheduler, recommendation engine.

- AI: predictive models for scoring and topic matching (see industry playbooks like Building a Robust AI-driven Content Strategy for Enterprise for design patterns).

- Channel matching: newsletters for curated depth, social for discovery, in-app for contextual nudges.

- Engagement KPIs: CTR, `time_on_content`, scroll depth, and downstream conversions (free trial, MQL, purchase).

- Attribution: use first-touch for discovery insight, last-touch for conversion mapping, and multi-touch/assisted conversion for channel influence.

- Reporting cadence: daily for operational KPIs, weekly for channel performance, monthly for strategic shifts.

Market playbooks show AI-driven workflows reduce production friction and improve personalization velocity; adapt models incrementally and validate with A/B testing.

Channel-by-channel quick reference for distribution tactics, frequency, and KPIs

| Channel | Recommended Frequency | Best content format | Primary KPI |

|---|---|---|---|

| Email newsletter | Weekly (digest) | Long-form + curated links | Open rate / CTR |

| Social media | 3–7x/week | Short posts + link cards | Engagement rate / CTR |

| In-app recommendations | Real-time | Short summaries, CTAs | Click-through to content |

| Syndication partners | 1–4x/month | Republished articles | Referral traffic / Assisted conversions |

| RSS/aggregators | Daily | Full article feed | Clicks / New users |

Understanding these principles helps teams move faster without sacrificing quality. When distribution, personalization, and measurement are aligned, content becomes both more discoverable and more measurable.

📥 Download: AI-Driven Content Curation Checklist (PDF)

Scaling, Governance, and Ethical Considerations

Prerequisites

- Executive commitment to measurable KPIs and budget cadence.

- Baseline content pipeline: templates, taxonomy, and initial AI tooling.

- Clear legal touchpoints for data/privacy review.

- Content operations platform (CMS + scheduling).

- MLOps pipeline or access to `ML/data engineer` workflows.

- Audit logs, provenance ledger, and a licensing registry.

- Benchmarking dashboard (e.g., automated performance reporting).

Ethics, bias mitigation, and privacy-compliant practices

- Audit training and sources: sample training corpora and provenance for representation gaps; keep a ledger of datasets and their licensing.

- Human-in-the-loop for sensitive topics: require senior editor sign-off for legal, medical, or political content.

- Provenance tracking: attach source metadata to every curated item and retain licensing records for three years minimum.

- Privacy controls: strip PII at ingestion, limit model fine-tuning to compliant datasets, and document consent flows to align with platform TOS and data protection laws.

- Bias mitigation steps: run counterfactual tests, measure demographic parity in outputs, and maintain remediation tickets for systematic failures.

| Role | Primary responsibilities | Required skills | KPIs to measure |

|---|---|---|---|

| Content curator | Source selection, initial tagging, taxonomy mapping | Content research, SEO basics, CMS skills | Items curated/day, relevance score |

| Editor | Quality control, tone, legal checks | Editing, topical expertise, compliance awareness | Edit turnaround, publish-quality rate |

| ML/data engineer | Model training, feature pipelines, monitoring | Python, MLops, data pipelines | Model latency, drift rate, uptime |

| Product/analytics owner | Roadmap, ROI tracking, A/B testing | Analytics (GA4), prioritization, stakeholder mgmt | Page lift, conversion uplift, time-to-publish |

| Compliance/legal | Licensing, privacy review, TOS alignment | IP law, GDPR/CCPA knowledge | Compliance incidents, review cycle time |

Understanding these practices helps teams scale confidently while retaining editorial control. When governance is embedded early, automation becomes a force-multiplier rather than a risk vector.

Conclusion

After automating discovery, scoring, and distribution, marketing teams reclaim hours formerly spent on manual triage, focus on high-impact content, and close the loop on performance. The article showed how automated scoring surfaces shareable assets, how lightweight pilots reduce risk, and how feeding performance signals back into selection improves ROI over time. Teams concerned about quality or platform fit should start small: run a weeklong pilot, compare engagement KPIs, and iterate on scoring thresholds; this addresses integration and editorial control without large upfront change. As Jasper’s work on AI content strategy illustrates, a measured rollout accelerates learning while maintaining standards.

Take three concrete steps now: audit your content sources, define a simple relevance-and-impact scoring rule, and run a controlled pilot to measure lift. For a practical implementation path and demo-ready workflows, Explore automated content curation workflows with Scaleblogger.