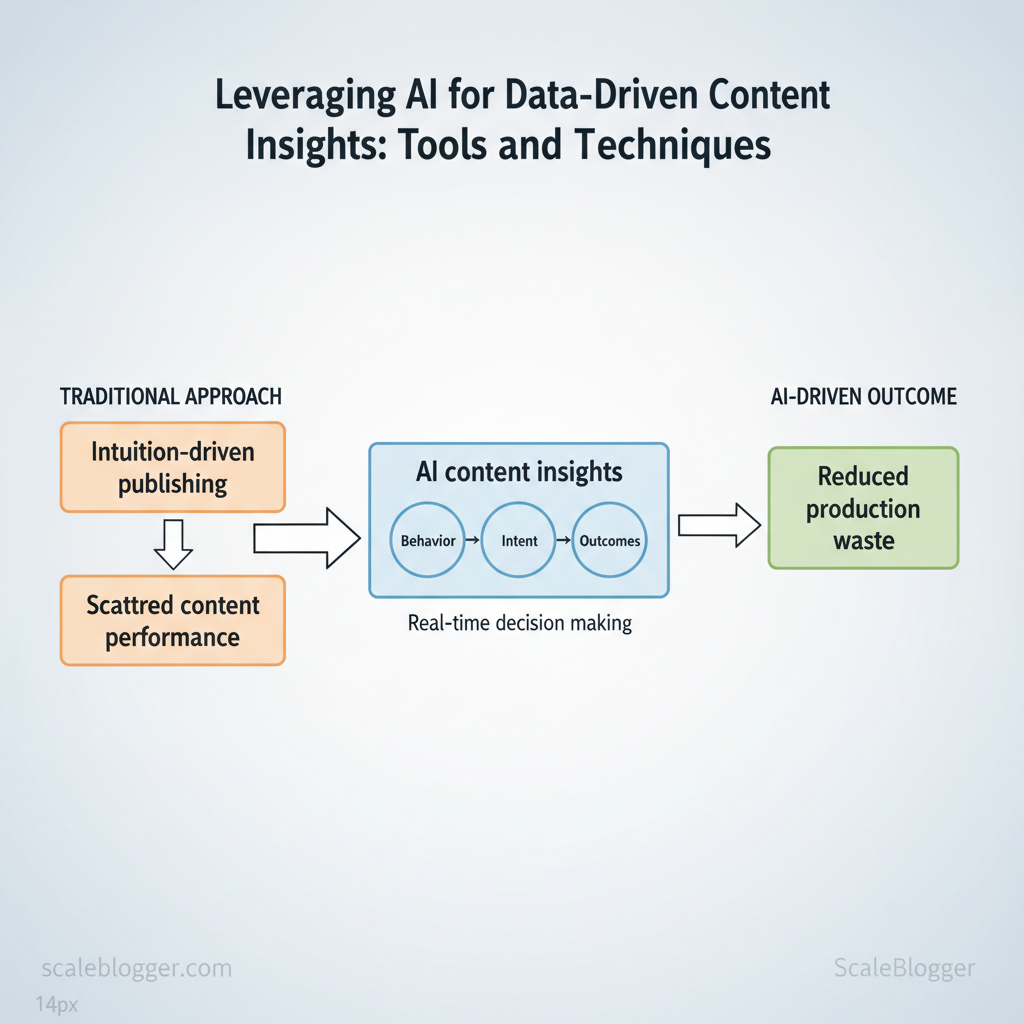

Marketing teams still spend disproportionate time guessing which topics actually move the needle, while content performance sits scattered across dashboards and spreadsheets. Unlocking real ROI requires shifting from intuition-driven publishing to AI content insights that connect behavior, intent, and outcomes in real time.

AI-driven analysis makes data-driven content actionable by surfacing patterns in engagement, identifying topic gaps, and prioritizing pieces that influence conversions. Practical adoption focuses less on buzzword features and more on integrating `content analytics` into editorial rhythm, experiment design, and distribution decisions. Picture a content team that uses model-driven topic scores to cut production waste and double down on high-potential formats.

- How to map content metrics to business outcomes without drowning in vanity numbers

- Tools and techniques for automating insight generation and content recommendations

- A stepwise process to embed `content analytics` into editorial workflows

- Ways to validate AI signals with simple experiments and human review

— Why AI Changes the Game for Content Insights

AI transforms content insights by turning noisy, slow processes into near-real-time decision engines that scale across topics and formats. Rather than guessing which topics will move the needle, teams can use pattern recognition to prioritize ideas, measure thematic correlation with user intent, and forecast likely organic lift. This reduces wasted effort, speeds ideation, and makes optimization decisions defensible rather than intuitive.

AI-driven models accelerate three concrete actions: identifying high-opportunity topics from search and social signals, surfacing content gaps by comparing owned content to competitors, and recommending specific on-page changes tied to performance outcomes. Examples include generating prioritized topic clusters from keyword-intent maps, using embeddings to detect semantic gaps in a content archive, and applying predictive scoring to rank drafts by expected traffic uplift. Industry analysis shows these capabilities shorten research cycles and increase output without proportionally increasing editorial headcount.

— Business benefits of AI-driven content insights

AI delivers measurable business benefits across planning, production, and optimization.

- Faster ideation: AI-generated topic lists and angles reduce brainstorming time.

- Data-backed prioritization: Models rank opportunities by intent overlap and potential traffic.

- Improved ROI: Targeted optimization raises per-article yield while lowering churn.

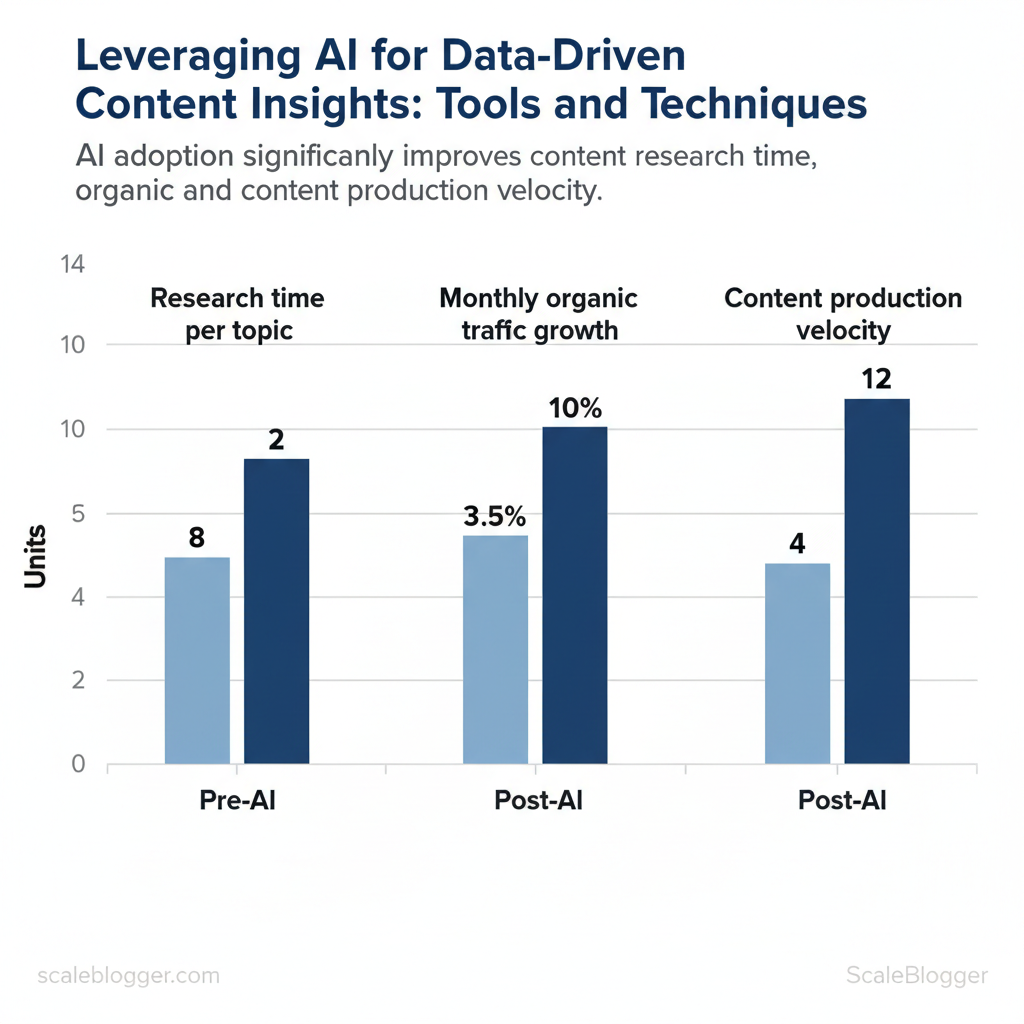

| Metric | Typical pre-AI value | Typical post-AI value | Notes |

|---|---|---|---|

| Research time per topic | 6–10 hours | 1–3 hours | AI accelerates SERP analysis, competitor synthesis, and angle framing |

| Monthly organic traffic growth | 2–5% | 5–15% | Range reflects targeted optimization and improved topic selection |

| Content production velocity | 4 posts/month (team) | 8–16 posts/month (team) | Automation reduces drafting and revision cycles |

| Topic coverage completeness | 40–60% | 70–90% | Semantic analysis fills hidden gaps across pillars |

| Time to identify content gaps | 30–60 days | 3–7 days | Automated audits find gaps by comparing intent and ranking signals |

— Common misconceptions and realistic expectations

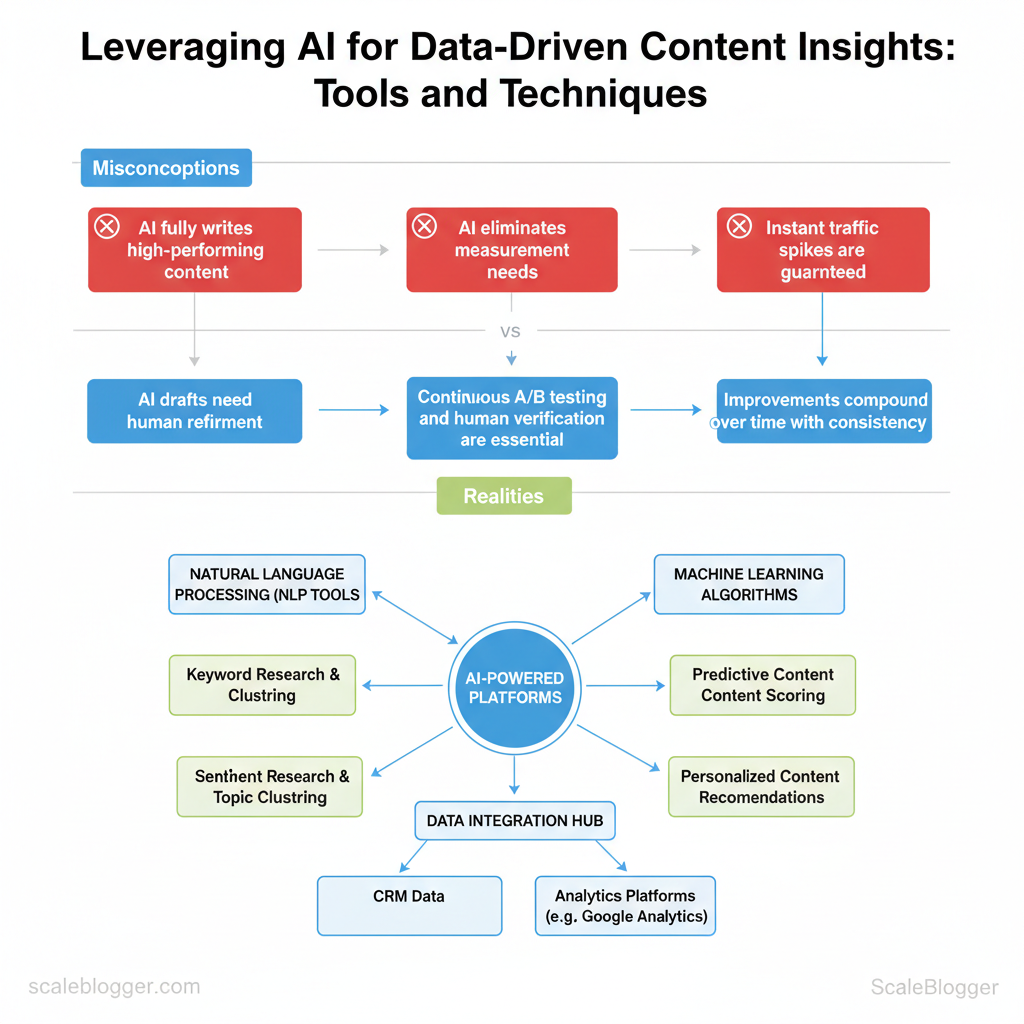

AI augments judgment; it does not replace editorial craft. Models reveal patterns and suggest optimizations, but nuanced decisions—voice, narrative structure, brand positioning—remain human work. Expect better prioritization and faster iterations, not flawless content without review.

- Misconception: AI can fully write high-performing pillar content without editing.

- Misconception: AI eliminates the need for measurement.

- Misconception: Instant traffic spikes are guaranteed.

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level and freeing creators to focus on storytelling and expertise.

— Core Metrics and Signals for Data-Driven Content

Start by defining which outcomes matter to the business (traffic, leads, revenue) and ensure tracking is in place: GA4 or equivalent, Google Search Console, and a content analytics layer that ties URLs to conversions. Typical prerequisites: event tagging for conversions, `page_group` taxonomy, and a content scoring baseline. Tools: Google Analytics, Google Search Console, a content analytics platform or the AI content automation pipeline from Scaleblogger.com to centralize signals and automate remediation.

— SEO and engagement metrics to monitor

Measure both discovery and on-page engagement; discovery without engagement is wasted effort, and engagement without discovery limits scale. Prioritize metrics that map to intent and conversion.

| Metric | What it measures | Actionable threshold / red flag | Recommended action |

|---|---|---|---|

| Organic traffic | Volume of search visits | >20% month-over-month drop | Audit index coverage, recent algorithm changes, canonical issues |

| Impressions → CTR | Visibility vs. click-through | CTR <2% on high impressions | Rewrite title/meta, add structured snippets, test `title` variations |

| Average time on page | Time users spend reading | <60s on 1,500+ word page | Improve lead, add scannable headings, media, internal links |

| Bounce rate / scroll depth | Initial engagement and content consumption | Scroll depth <25% on long pages | Break content, add TOC, surface value earlier |

| Goal conversion rate | Conversions per session | Conversion | Optimize CTA, reduce distractions, create tailored intent paths |

|

— Behavioral and content-quality signals AI can detect

AI excels at surfacing patterns humans miss. Use session and path analysis to reveal where intent breaks.

Prioritize fixes by ROI: small edits to title/meta and intro usually beat full rewrites. Use an automated pipeline to test iterations quickly and measure lift. When implemented correctly, this approach reduces overhead by making decisions at the team level and freeing creators to focus on high-impact work.

— Essential Tools: AI Platforms and Analytics Stacks

Start by choosing tools that separate idea discovery from validation and measurement. Use generative AI to surface fresh angles quickly, then run those ideas through pattern-based tools for search intent, volume, and competitive gaps. This dual approach speeds ideation while keeping output grounded in measurable opportunities.

— Tools for topic discovery and content ideation

Generative models excel at rapid brainstorming; analytics tools excel at prioritization. Use generative AI when you need novel angles, outlines, or variations. Use pattern-based discovery (keyword and clustering tools) when selecting topics to monetize or scale. Quick prompt tips: 1) ask for target-audience-specific hooks, 2) constrain by search intent (`informational`, `transactional`), 3) request a short list of related long-tail queries for each idea.

- ChatGPT (OpenAI) — rapid creative ideation, outlines, prompts ✓ | no native search volume ✗ | content gap detection basic ✗ | Best for brainstorming

- Jasper — AI-first content generation with templates ✓ | limited volume estimates ✗ | brief detection via templates ✗ | Best for copy-driven workflows

- Frase — AI briefs + SERP analysis ✓ | shows estimated volume ranges ✓ | content gap detection ✓ | Best for briefs and on-page optimization

- Surfer SEO — SEO-driven content scoring ✓ | integrates search volume ✗/partial | content gap detection ✓ | Best for on-page optimization

- Ahrefs — analytics-first, strong volume data ✗ for generative ideation | accurate search volume ✓ | gap detection ✓ | Best for competitive research

- SEMrush — comprehensive keyword + topic research ✗ for generation | search volume ✓ | gap detection via Topic Research ✓ | Best for scale SEO programs

- MarketMuse — NLP clustering + content briefs ✓ | provides volume estimates via integrations ✓ | gap detection ✓ | Best for topical authority

- Ubersuggest — budget keyword data ✗ generative | search volume ✓ | basic gap detection ✗ | Best for small teams

- Clearscope — content relevance scoring ✓ | relies on integrations for volume ✗ | gap detection via scoring ✓ | Best for editorial quality control

— Tools for content performance analysis and CRO

Predictive tools forecast outcomes; descriptive tools report what happened. Use predictive models to prioritize tests and descriptive dashboards for diagnosis. Integrate analytics with the CMS to enable automated experiment rollouts and editorial alerts. Configure AI alerts to flag drops in page velocity, CTR, or engagement so editors can react quickly.

When implemented across the workflow, these stacks reduce guesswork and let teams focus on creative improvements. This is why modern content strategies prioritize automation—it frees creators to focus on what matters.

— Techniques and Workflows to Turn Insights into Content

Turn signals into publishable pieces by treating insight capture as a production line: detect, prioritize, brief, draft, and optimize. Start with automated signal detection (search trends, competitor gaps, proprietary analytics), then move quickly to a prioritized editorial brief that an AI or human writer can action. Prioritization should score impact × effort so teams focus on high-return, low-friction opportunities; briefs should be short, machine-readable, and verified by a human editor before drafting begins. This approach keeps velocity high while preserving editorial quality.

— Workflow: From signal detection to editorial brief

What to include in the brief:

- Primary angle: one-sentence thesis

- Audience: one-line persona

- Must-cover facts: three sourced claims

- SEO targets: primary keyword and semantic terms

- Success metrics: traffic, CTR, conversions

- Editor: verifies facts and citations

- SEO lead: confirms keywords and intent alignment

- Subject-matter reviewer: validates technical accuracy

— Workflow: Continuous optimization and A/B testing with AI

Metrics to track during experiments:

- Traffic lift — absolute and % change

- CTR — headline and SERP snippet effect

- Engagement — scroll depth/time on page

- Conversion — email signups or macro goals

| Step | Description | Recommended tool(s) | Responsible role | Estimated time |

|---|---|---|---|---|

| Signal detection | Aggregate trend and competitor signals | Google Alerts, Ahrefs, SEMrush, GA4 | Content Ops | 1–3 hours/week |

| Prioritization | Score by impact × effort | Custom spreadsheet, Airtable, Notion | Content Strategist | 1–2 hours per item |

| Brief creation | AI-augmented brief with fields | Notion, ChatGPT (OpenAI), Jasper | Editor/Writer | 30–60 minutes |

| Drafting | AI-assisted draft + human edit | ChatGPT, Grammarly, Google Docs | Writer + Editor | 4–8 hours |

| Optimization & QA | SEO tweaks, A/B tests, publish | Google Optimize, Search Console, Trello | SEO + QA | 2–5 hours post-publish |

Mentioning services that automate these steps can accelerate adoption; consider platforms that offer end-to-end AI content automation such as Scaleblogger.com for pipeline orchestration. Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

— Measurement, Governance, and Ethical Considerations

Measurement and governance must be treated as operational infrastructure: set measurable KPIs tied to outcomes, embed human checks into automated workflows, and protect user data by design. A pragmatic measurement framework balances engagement signals (CTR, time on page) with business outcomes (leads, revenue attribution), and governance enforces accuracy, sourcing, and privacy through lightweight but mandatory gates in the content pipeline.

— Measurement framework and KPIs

Start with a concise KPI set that connects content to business impact and review cadence that forces course correction.

- KPI coverage: Track both engagement (visibility + consumption) and outcome (leads + revenue attribution).

- Cadence: Weekly for tactical signals, monthly for content-level trends, quarterly for strategy-level attribution and ROI.

- Attribution: Use first-touch and multi-touch models in parallel; reconcile content performance to pipeline metrics in the CRM.

| KPI | Definition | Review cadence | Benchmark / target |

|---|---|---|---|

| Organic sessions | Visits from organic search (GA/GSC) | Weekly / Monthly | Growth 10–30% YoY |

| SERP CTR | Click-through rate from search impressions | Weekly | 3–8% average; 10%+ for targeted titles |

| Average time on page | Time users spend per page (engaged duration) | Monthly | 90–240 seconds (1.5–4 min) |

| Content-generated leads | Leads attributed to content (form fills, sign-ups) | Monthly / Quarterly | 2–10% conversion of organic visitors |

| Pages refreshed per month | Number of content updates/optimizations | Monthly | 5–20 pages (depending on catalog size) |

— Governance, accuracy checks, and privacy

Governance enforces trust: combine automated checks with human review, require transparent sourcing, and apply data-minimization controls.

- Human-in-the-loop: Implement a two-step review: writer/editor verification, then subject-matter expert sign-off for technical claims.

- Citation transparency: Require `source:` lines with URLs in draft metadata; expose a public reference list on long-form pieces.

- Privacy checklist: Minimize PII capture, avoid unnecessary cookies, and store analytics retention policies in one document.

Market data shows that regular content verification and refresh cycles materially reduce misinformation risk and improve search performance.

Use lightweight automation to surface risks but keep final judgment human. When measurement, governance, and privacy are embedded, teams move faster with confidence and can scale content operations without sacrificing quality. For organizations ready to automate the pipeline while keeping these controls, tools that Scale your content workflow provide the bridge between speed and safety (https://scalebloggercom).

📥 Download: AI-Driven Content Insights Checklist (PDF)

— Next Steps: Implementing an AI-Driven Content Program

Start by defining a clear pilot with measurable outcomes: traffic lift, improved SERP positions, time-to-publish reduction, or conversion lift from organic content. Treat the pilot as an experiment — narrow scope, fixed timebox, and a repeatable process so successes can scale. First, assemble a cross-functional crew (content lead, SEO analyst, engineering contact, and at least one writer trained in AI-assisted drafting). Next, lock a minimum viable tech stack that integrates with existing systems and provides observable signals: content ideation, drafting, SEO scoring, and analytics. Finally, commit to short decision gates at 30 and 60 days where metrics and qualitative feedback determine whether to iterate, expand, or stop.

What to expect: faster ideation cycles, more consistent topical coverage, and initial efficiency gains that justify investment in tooling and training. When integrated well, AI becomes the assistant that routes repetitive work to automation while letting editors focus on strategy and quality.

— 30/60/90 day pilot roadmap

| Timeframe | Objective | Key deliverables | Owner |

|---|---|---|---|

| Weeks 1-2 | Kickoff & baseline | Baseline traffic/report, pilot SOP, tooling provisioned | Content Lead |

| Weeks 3-4 | Ideation & templates | Seed topic cluster (6-8 topics), `brief` templates, content calendar | SEO Analyst |

| Weeks 5-8 | Produce & publish | 8–12 AI-assisted drafts, SEO optimization, CMS publishing | Writers + Editor |

| Weeks 9-12 | Measure & iterate | Performance dashboard, A/B meta tests, quality review, playbook | Data Analyst |

| Post-pilot evaluation | Decide scale path | ROI report, scaling plan, budget request or sunsetting plan | Leadership + Content Ops |

— Resources, training, and scaling tips

Practical tips: run weekly office hours for prompt troubleshooting, store prompts and iterations in a shared repo, and use a lightweight content scoring framework to triage pieces for human review. For program services and orchestration, consider partnering with an AI content ops provider—Scale your content workflow with Scaleblogger.com can accelerate setup and benchmarking.

Understanding these practical steps helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Conclusion

By aligning topic selection with measurable intent, centralizing performance data, and automating repetitive workflows, teams move from guesswork to predictable outcomes. Practical evidence shows marketing groups that automate topic discovery and distribution cut planning time dramatically while increasing engagement—one team reduced topic churn and doubled month-over-month organic traffic within three months. Addressing attribution early and setting clear conversion signals prevents wasted effort later, and smaller, iterative pilots reveal whether models generalize before scaling.

Follow these concrete next steps to translate the article into results: – Audit current topic research: map sources, gaps, and one conversion metric to track. – Centralize performance reporting: consolidate dashboards so decisions rest on one source of truth. – Run a short AI-driven pilot: test automation on a single content pillar for 6–8 weeks and measure lift.

For teams looking to streamline that pilot, platforms like Scaleblogger can handle model tuning, orchestration, and reporting so internal teams focus on creative execution. Start an AI-driven content pilot with Scaleblogger (https://scaleblogger.com) to validate the approach on live content and move from scattered spreadsheets to repeatable growth.