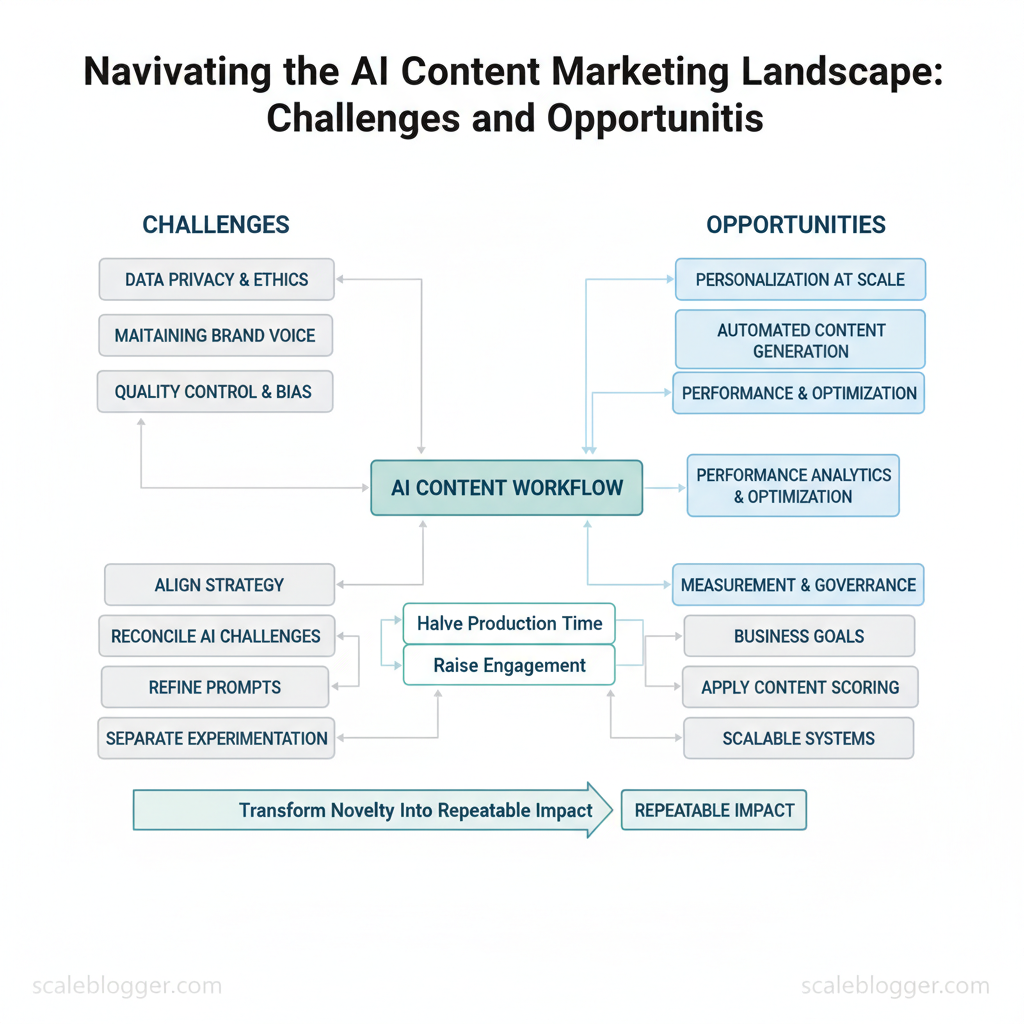

Marketing teams waste hours every week wrestling with inconsistent quality, creative fatigue, and fractured workflows as adoption of AI content accelerates. Industry research shows these frictions are common when teams scale generative tools without aligning strategy, measurement, and governance. The result: faster output, but not always better outcomes.

A pragmatic approach reconciles the most pressing AI content challenges with real business goals, turning automation into a competitive advantage across distribution, personalization, and performance optimization. Picture a mid‑market brand that halved production time while raising engagement by refining prompts and applying `content scoring` to editorial decisions.

Strategic adoption separates experimentation from scalable systems; operational controls transform novelty into repeatable impact.

- How to diagnose the highest‑impact bottlenecks in the AI marketing landscape

- Practical guardrails for quality, brand safety, and regulatory risk

- Ways to measure ROI and attribution for AI‑driven content

Start a pilot with Scaleblogger — testable frameworks and automation to convert AI experiments into predictable growth.

Current State of AI in Content Marketing

AI is now an operational component of modern content workflows rather than an experimental add-on. Teams use it across the content lifecycle to accelerate production, improve targeting, and close gaps between creative intent and measurable performance. Adoption patterns show a split between high-volume, repeatable tasks (where AI excels) and strategic, judgment-led work (still human-led).

- Content generation: Drafting blog posts, product descriptions, and ad copy to scale output quickly.

- Personalization: Dynamic content blocks and individualized recommendations based on user signals.

- Content distribution: Automated scheduling, A/B testing headlines, and channel-specific rewrites.

- Analytics & insights: Performance attribution, churn signals in readership, and predictive CTR modeling.

Integration happens through APIs, native CMS plugins, and browser extensions. Teams commonly combine point solutions via APIs for best-of-breed features, or choose integrated platforms for a simpler stack. Each approach has trade-offs in flexibility, maintenance, and data ownership.

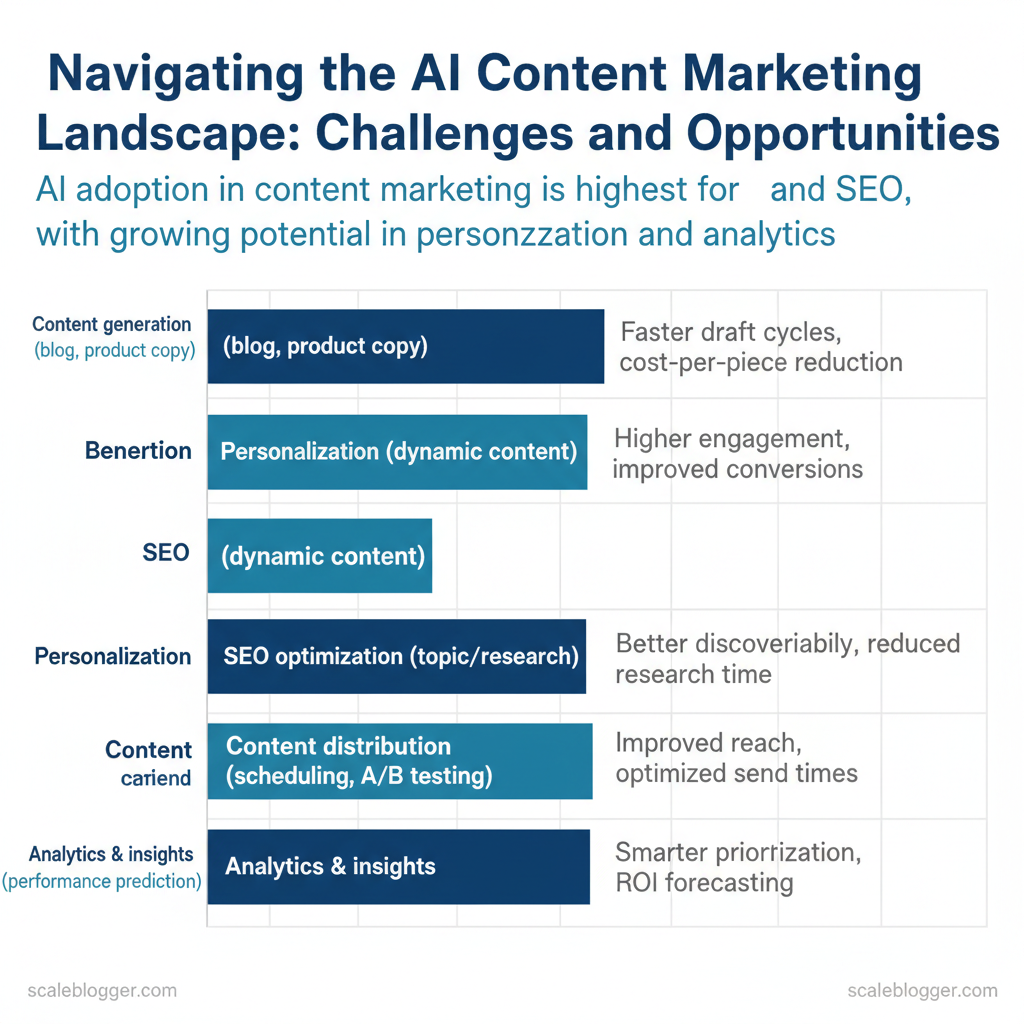

For ease of reference, this table maps common use cases to benefits and current adoption levels.

| Use Case | Typical Benefit | Example Industry | Adoption Level (Low/Medium/High) |

|---|---|---|---|

| Content generation (blog, product copy) | Faster draft cycles, cost-per-piece reduction | E-commerce, SaaS | High |

| Personalization (dynamic content) | Higher engagement, improved conversions | Media, Retail | Medium |

| SEO optimization (topic/research) | Better discoverability, reduced research time | Agencies, B2B SaaS | High |

| Content distribution (scheduling, A/B testing) | Improved reach, optimized send times | Media, Email publishers | Medium |

| Analytics & insights (performance prediction) | Smarter prioritization, ROI forecasting | Enterprise, Publishers | Medium |

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Key Challenges in AI-Driven Content

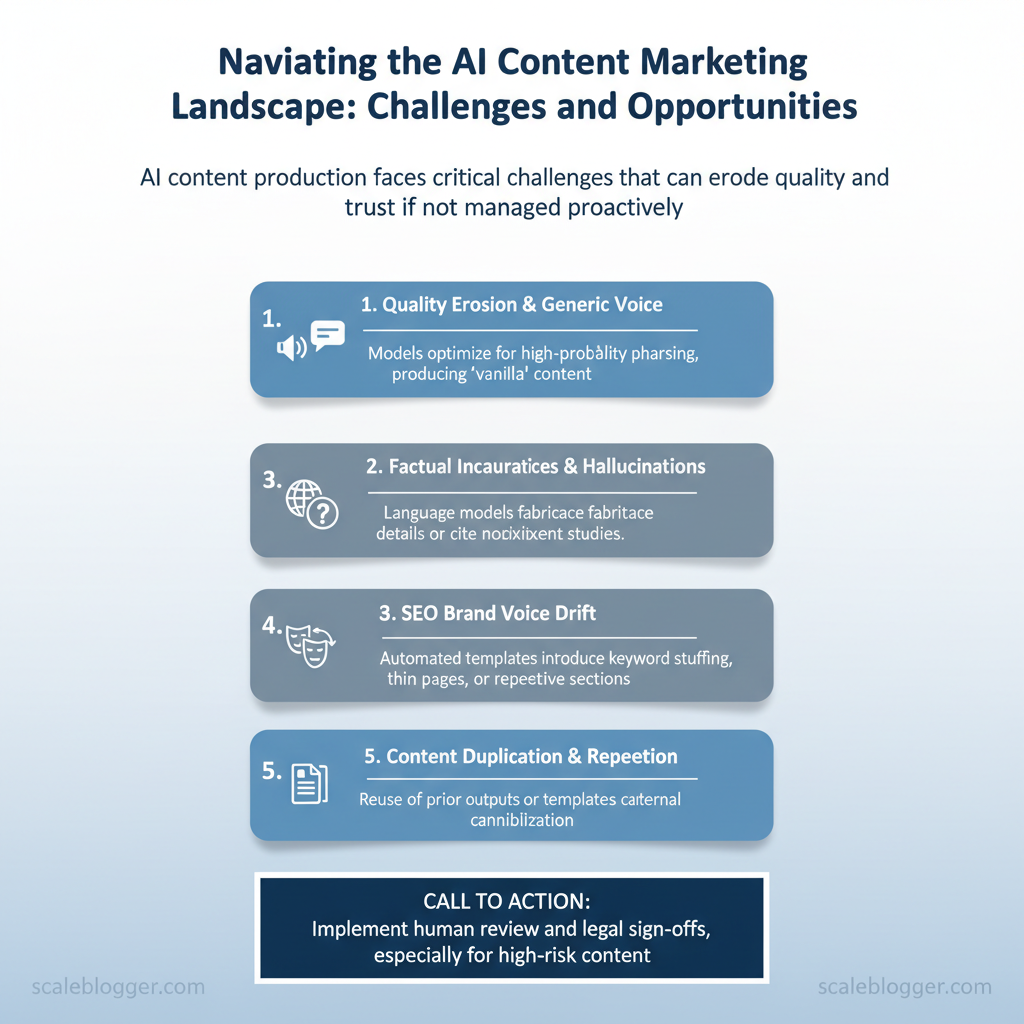

AI accelerates content production, but speed exposes a set of predictable failure modes that reduce trust, search performance, and brand consistency. Models often default to safe, generic patterns or hallucinate facts; teams that treat outputs as publication-ready risk user churn, search penalties, and legal exposure. Below are the most pressing challenges and practical indicators editors should watch for.

- Factual inaccuracies and hallucinations: Language models fabricate details or cite nonexistent studies when prompts lack constraints.

- Brand voice drift: Tone and terminology slip when prompts don’t encode brand guardrails or when multi-author workflows lack alignment.

- SEO over-optimization: Automated templates can introduce keyword stuffing, thin pages, or repetitive sections that harm rankings.

- Content duplication and repetition: Reuse of prior outputs or templates across topics causes internal cannibalization and weak topical authority.

Industry analysis shows that unchecked generative outputs are a leading cause of post-publication content recalls and reputation remediation.

Common detection signals are straightforward and actionable: `unnatural phrase repetition`, incorrect proper nouns, missing citations for claims, and inconsistent terminology across pages. Editors should embed lightweight quality checks into the pipeline — a simple editorial checklist catches most problems before publishing.

| Failure Mode | Likely Cause | Quick Remediation | Monitoring Metric |

|---|---|---|---|

| Generic/vanilla content | Default high-probability token choices | Use persona-driven prompts; inject examples | Time-on-page, qualitative UX reviews |

| Factual inaccuracy | Unconstrained generation; outdated context | Add citation prompts; require source links | Claim verification rate, correction rate |

| Tone mismatch with brand | No brand voice profile in prompts | Create `style_guide` prompt template | Brand voice drift score, editor feedback |

| Over-optimization for SEO | Template-driven keyword stuffing | Enforce natural-language variations | Keyword density, organic CTR |

| Repetition across articles | Reused templates, insufficient topic clusters | Implement topic-cluster planning | Content similarity index, cannibalization rate |

Legal and ethical risks deserve explicit controls. Copyright exposure arises when models reproduce proprietary text; require plagiarism scans and legal sign-off for any content that cites third-party IP. Ethical implications — deepfakes, undisclosed synthetic authorship, or biased framing — call for disclosure policies and diverse prompt review panels. Operationally, integrate escalation rules: automated checks flag likely hallucinations, editors perform contextual verification, and legal reviews sign off on regulatory or high-liability materials.

For teams adopting AI, build a repeatable content-scoring framework that combines `factuality`, `voice`, and `SEO` checks; tools that automate parts of this pipeline are helpful. Organizations using AI-powered content automation, such as Scale your content workflow (https://scalebloggercom), often pair automation with stage-gated reviews to scale safely. Understanding these principles helps teams move faster without sacrificing quality. This approach reduces downstream risk while preserving the creative work that differentiates the brand.

Opportunities AI Unlocks for Content Marketers

AI reshapes what a content program can achieve by compressing time-to-publish, widening experimentation, and enabling precision personalization at scale. Teams that embed AI into the content pipeline move from manually intensive production to a predictable, measurable engine for traffic and conversions.

- Behavioral personalization — content variations driven by page behavior and session signals.

- Segmented content funnels — dynamic copy for high-value CRM segments.

- Contextual recommendations — next-article and product suggestions based on real-time intent.

- Micro-localization — culturally tuned messaging for specific markets.

| Workflow Stage | Automation Example | Typical Outcome | Expected Impact (Low/Med/High) |

|---|---|---|---|

| Topic research | `semantic clustering`, keyword expansion tools | Broader idea set; faster validation | High |

| Draft generation | LLM long-form drafts, outline creation | Draft time cut 50-80% | High |

| SEO optimization | Automated meta, schema, on-page suggestions | Faster indexing; better CTR | High |

| Localization | ML translation + cultural tuning | Faster market rollout; lower cost | Med |

| Distribution/scheduling | Cross-channel scheduling, syndication rules | Consistent cadence; increased reach | Med |

Scaleblogger’s AI content automation can accelerate several of these stages within an integrated pipeline, making experimentation and measurement repeatable across campaigns. Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Practical Governance and Workflow Strategies

Effective AI-driven content requires governance that treats models as collaborators, not black boxes. Start by assigning a clear editorial owner responsible for scope and outcomes, then build approval gates that combine automated checks with human judgment. Implementing a compact set of launch and audit checklists prevents drift and preserves brand voice while enabling scale.

- Approval gates and labeling: require `AI-draft` labels, a human final-review signoff, and a metadata flag for model version used.

- Launch & audit checklist: include SEO baseline, plagiarism scan, named sources, estimated E-E-A-T score, and post-publish KPI targets.

“`text Launch checklist (template) – Title + intent: approved – Sources cited: 3+ reliable – Plagiarism scan: <5% overlap - SEO score (tool): >= 75 – Accessibility alt text: complete – Final reviewer: name/date Audit checklist (quarterly) – Sample 10% of corpus – Update facts/citations – Performance vs. baseline KPIs – Model version compatibility review “`

- Organic CTR, impressions, and ranking — monitor weekly for new content.

- Engagement (time on page, scroll depth) — signals content usefulness.

- Accuracy score — human reviewer assigns `0–100` for factual integrity.

- Plagiarism/uniqueness — automated scan with manual spot-checks.

- Conversion lift — endpoints like newsletter signups attributable to content.

| Governance Element | Freelancer/Small Business | Medium Business | Enterprise |

|---|---|---|---|

| Editorial owner | Solo founder/editor | Dedicated editor (1 FTE) | Editorial manager + SMEs |

| Fact-checking process | Manual checks (owner) | Editor + freelance fact-checker | Dedicated QA team, vendor checks |

| Legal compliance review | Ad-hoc (when needed) | Legal consult per campaign | Integrated legal signoff workflow |

| Tooling & automation | Google Docs, Grammarly, Copyscape | Airtable, SurferSEO, Zapier | DAM, enterprise SEO (Conductor), custom ML |

| Audit frequency | Annual or per major update | Quarterly audits | Continuous monitoring, weekly sampling |

Scaling governance benefits from templates, `AI content automation` that enforces labeling and metadata, and a disciplined cadence for iteration and testing. Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level.

Implementation Roadmap and Best Practices

Start by treating the first 90 days as an evidence-gathering phase: validate content generation quality, measure distribution effectiveness, and confirm SEO lift before committing significant resources. The pilot must answer whether automation improves publish cadence, search visibility, and engagement while preserving brand voice.

| Week Range | Objective | Key Tasks | Success KPI |

|---|---|---|---|

| Weeks 1-2 | Setup & baseline | Define target topics, map buyer intent, configure `content pipeline`, set tracking (GA4, Search Console), onboard team | Baseline organic traffic, CTR, time on page |

| Weeks 3-6 | Controlled production | Produce 8–12 pilot posts; apply SEO templates, editorial review, A/B headlines | +10% impressions; content passes editorial QA 90%+ |

| Weeks 7-10 | Distribution & measurement | Schedule publishing, amplify via email/social, run internal link strategy, measure early rankings | First-page keywords emerging; avg. session duration stable |

| Weeks 11-12 | Refinement & automation tuning | Adjust prompts, templates, tagging; automate metadata and scheduling; train reviewers | Conversion uplift on pilot posts; reduced editorial time per post by ≥20% |

| Post-pilot review | Decision & roadmap | Compile performance dashboard, conduct stakeholder review, decide scale-up scope | Clear go/no-go: KPI targets met or exceeded |

Signals to scale are practical and measurable. Grow when multiple indicators align:

- Consistent KPI improvement — search impressions, clicks, and target keyword rankings improving over 4+ weeks.

- Stable content quality — editorial acceptance rates ≥90% and positive user engagement.

- Operational efficiency — time-to-publish falling and cost-per-piece decreasing.

- Predictable ROI — incremental traffic converting at forecasted rates.

Industry analysis shows that consistent KPI improvement across traffic and engagement is the most reliable signal to expand automated content efforts.

When implemented with clear success criteria and editorial controls, this roadmap lets teams expand confidently while protecting brand integrity. Understanding these practices helps teams move faster without sacrificing quality.

Future Outlook and Strategic Recommendations

Emerging technological and regulatory shifts are reshaping how content teams operate; AI models will continue to speed ideation and draft generation while regulatory scrutiny and platform policy changes force stricter provenance and accuracy controls. Expect operational pressure in three areas: pipeline throughput, editorial quality control, and legal compliance. Early movers will be teams that combine lightweight automation with disciplined human oversight and measurable experiments.

- Generative models move into production: Widely available APIs and cheaper compute make `LLM`-assisted drafting a baseline capability.

- Content attribution and provenance demands: Platforms and regulators will increase requirements for disclosure and verifiability.

- Search engines favor E-E-A-T and intent alignment: Quality signals tied to expertise and experience will drive distribution.

- Automated SEO at scale: Semantic content optimization and entity-driven topic clusters become standard practice.

- Creative augmentation, not replacement: Human editing and original reporting remain differentiators.

- Data-driven personalization: Content tailored by intent segments delivers higher conversion with automation supporting execution.

| Recommendation | Rationale | First Step | Expected Impact |

|---|---|---|---|

| Run a controlled 90-day pilot | Limits risk while proving value | Select 3 topics, track traffic & time | +15–40% workflow speed |

| Implement editorial governance | Maintains quality at scale | Create checklist: accuracy, citations | Fewer reputational errors |

| Invest in training & human oversight | Humans catch nuance models miss | 2-day editor bootcamp on prompts | Better final output quality |

| Measure & iterate with experiments | Data-driven improvements scale | Establish A/B testing cadence | Continuous CTR/engagement gains |

| Monitor legal & ethical developments | Avoid compliance risk | Assign owner for policy updates | Reduced legal exposure |

Understanding these principles helps teams move faster without sacrificing quality. When implemented correctly, this approach reduces overhead by making decisions at the team level and lets creators focus on high-impact work. For organizations ready to scale, explore ways to `Scale your content workflow` with automation and governance.

Conclusion

After exploring quality drift, creative fatigue, and workflow bottlenecks, the practical path forward is clear: standardize prompts, centralize review, and automate repetitive publishing tasks. Teams that adopt these steps see faster output and more consistent brand voice; evidence suggests small pilots reduce revision cycles by weeks and free senior writers for strategic work. Readers wondering whether to start small or overhaul systems can begin with a single content stream and measure cadence, quality, and time saved before scaling.

For teams ready to move from experimentation to repeatable production, run a focused pilot, assign a single owner for governance, and instrument KPI reporting from day one. To streamline execution and accelerate measurement, platforms like Scaleblogger can help operationalize prompt libraries and approval workflows—consider taking the next step and Start a pilot with Scaleblogger.